From this thread thread you will learn about 12 key #OSINT-services for gathering information about a website

Cyber Detective • @cyb_detectivefrom this thread thread you will learn about 12 key #OSINT-services for gathering information about a website.

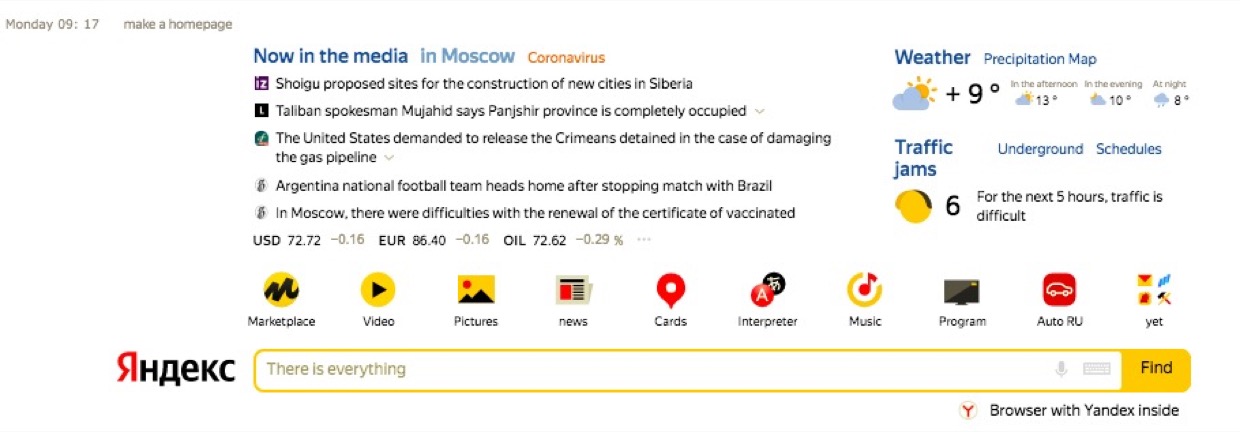

i'll show them with an example of most famous russian search engine "yandex.ru" and it's subdomains. [#1]

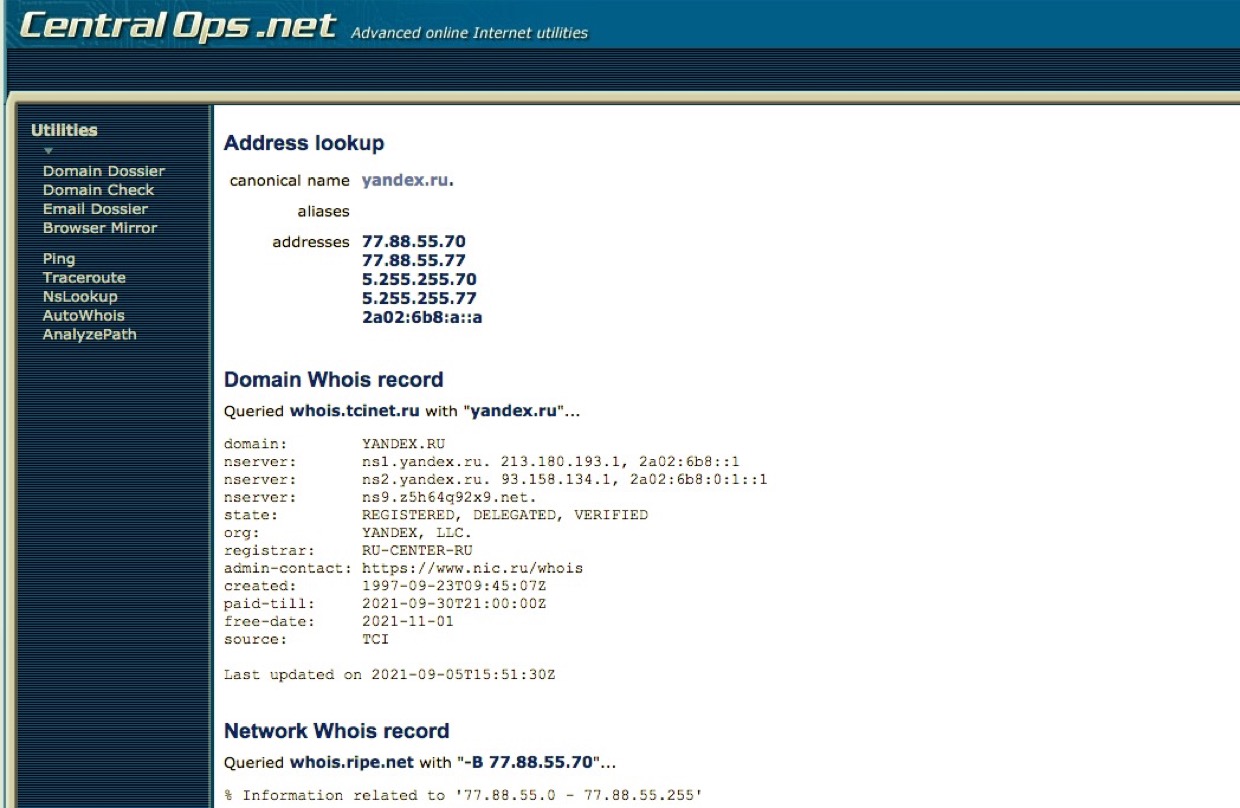

Step #1

Collect basic information about domain

IP address lookup, whois records, dns records, ping, traceroute, NSlookup.

centralops.net/ [#2]

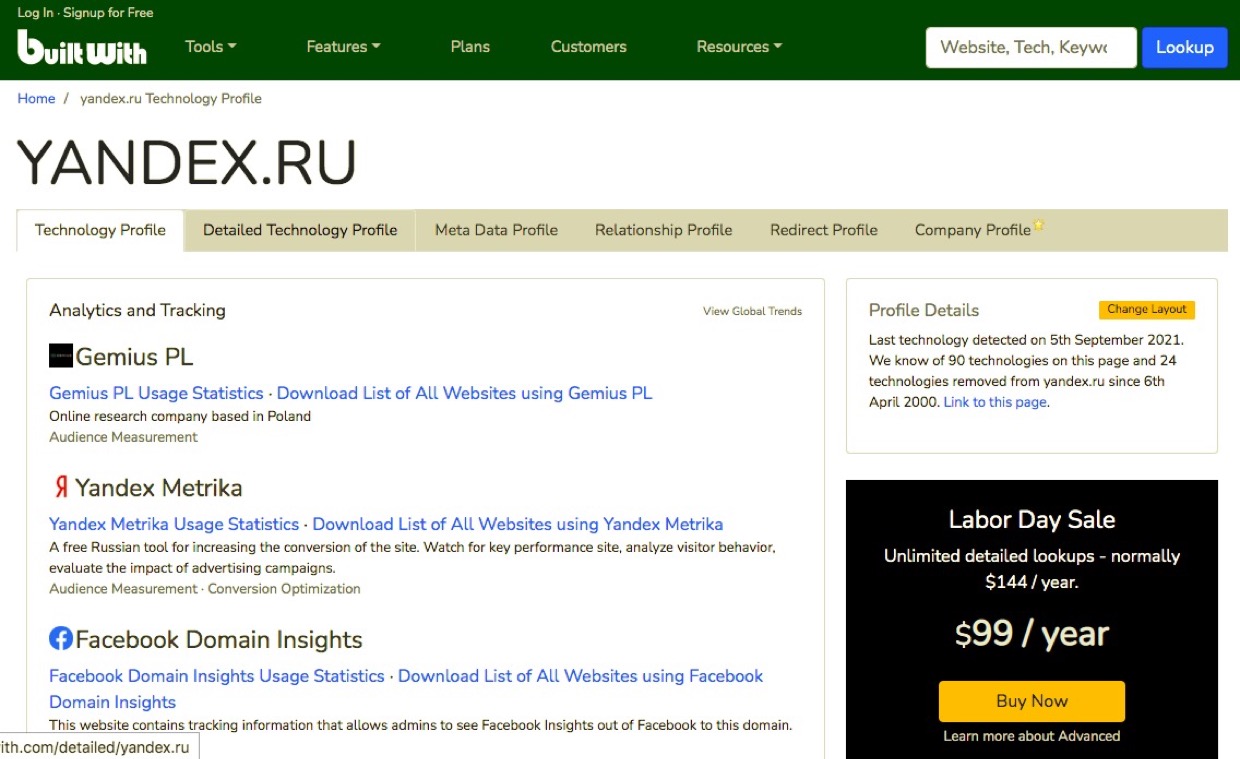

step 2

find out what technology was used to create the site: frameworks, #javascript libraries, analytics and tracking tools, widgets, payment systems, content delivery networks etc.

builtwith.com/ [#3]

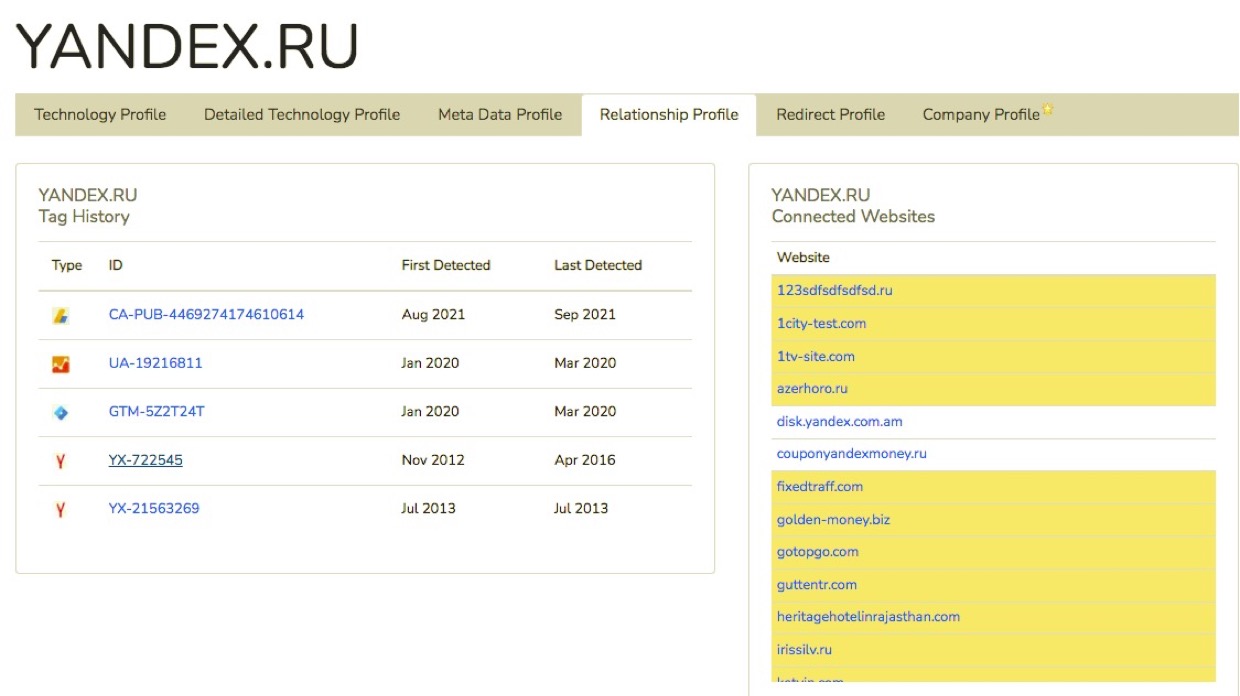

Step 3

Get a list of sites belonging to the same owner (having the same Yandex.Metrika and Google Analytics counter numbers, as well as other common identifiers)

builtwith.com/relationships/

Find sites with the same Facebook App ID

analyzeid.com/ [#4]

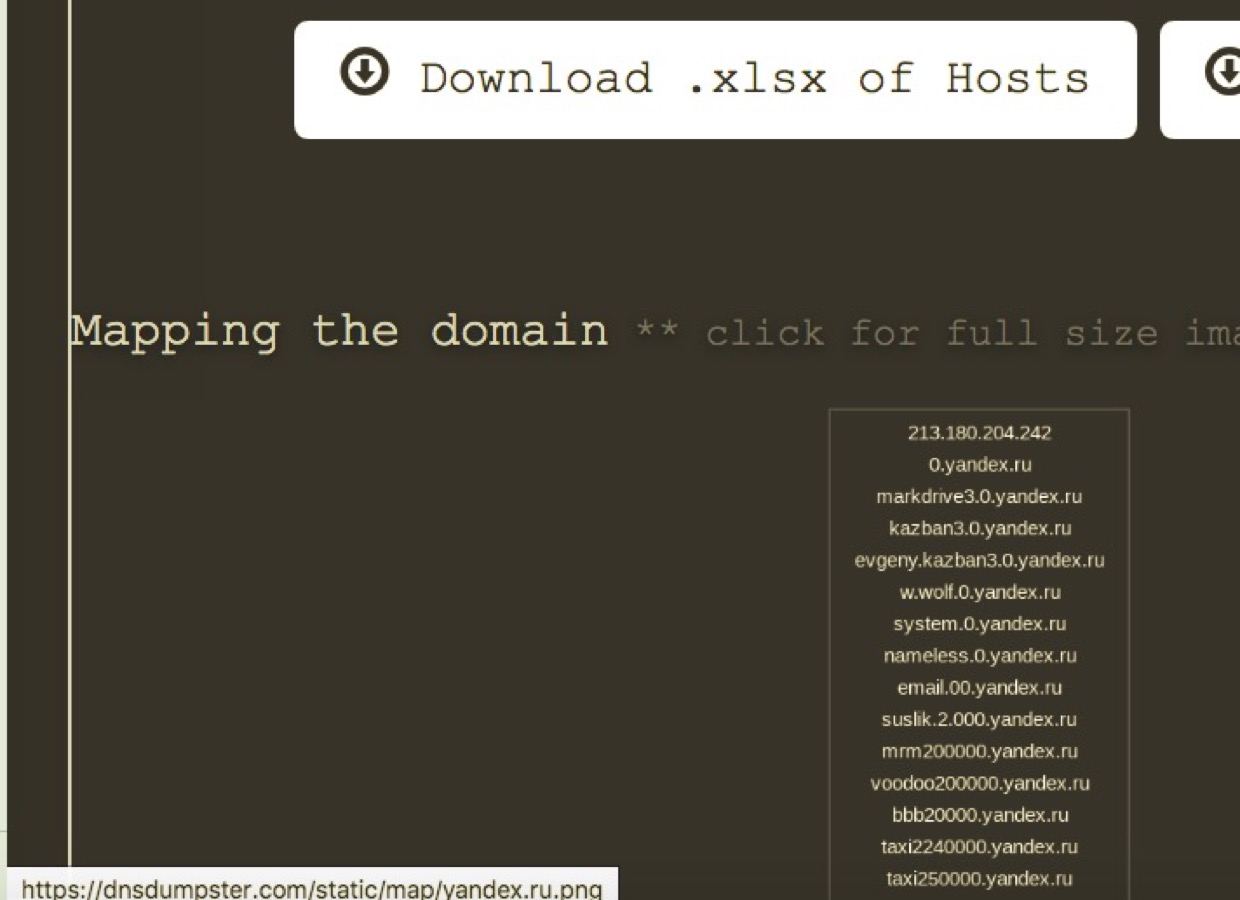

Step 4

Map subdomains.

dnsdumpster.com/#domainmap [#5]

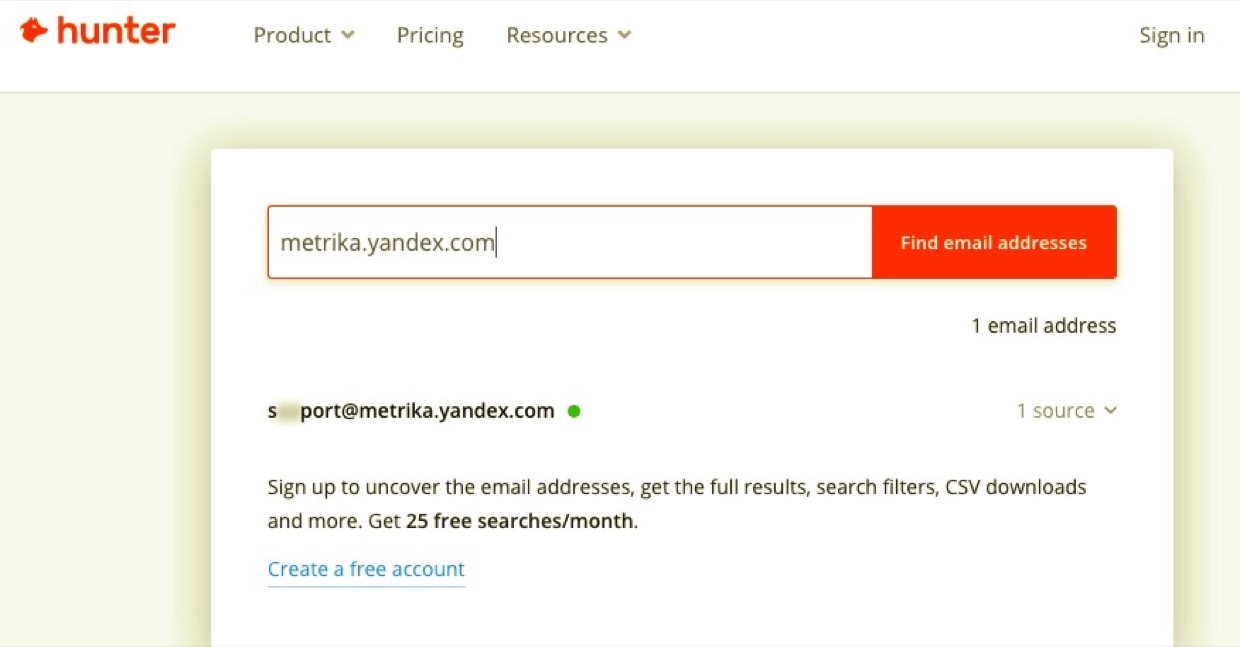

Step 5

Looking for email addresses associated with the domain or subdomains

hunter.io/search/

or

snov.io/email-finder [#6]

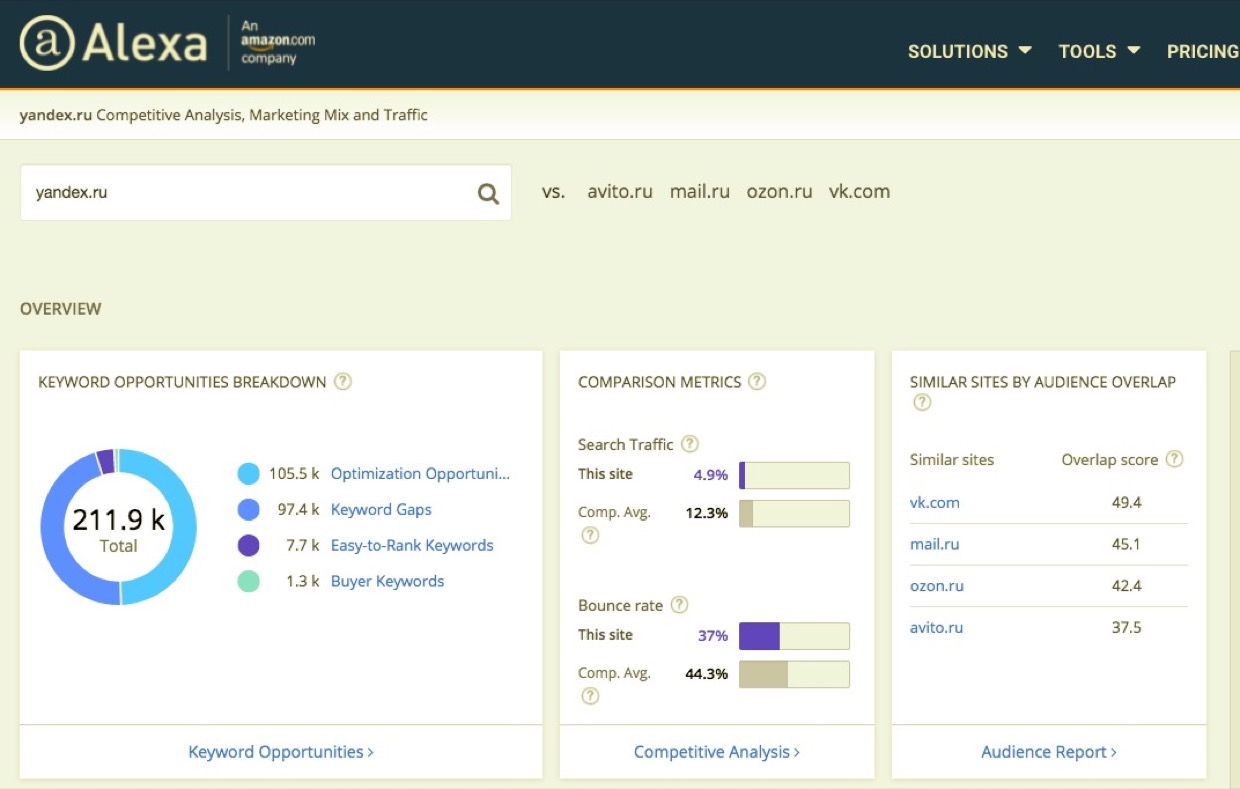

Step 6

Collect data on search engine rankings and approximate traffic.

alexa.com/siteinfo/

similarweb.com/ [#7]

Step 7

Download documents (PDF, docx, xlsx, pptx) from the site and analyze their metadata. This way you can find the names of the organization's employees, user names in the system and emails.

github.com/laramies/metagoofil [#8]

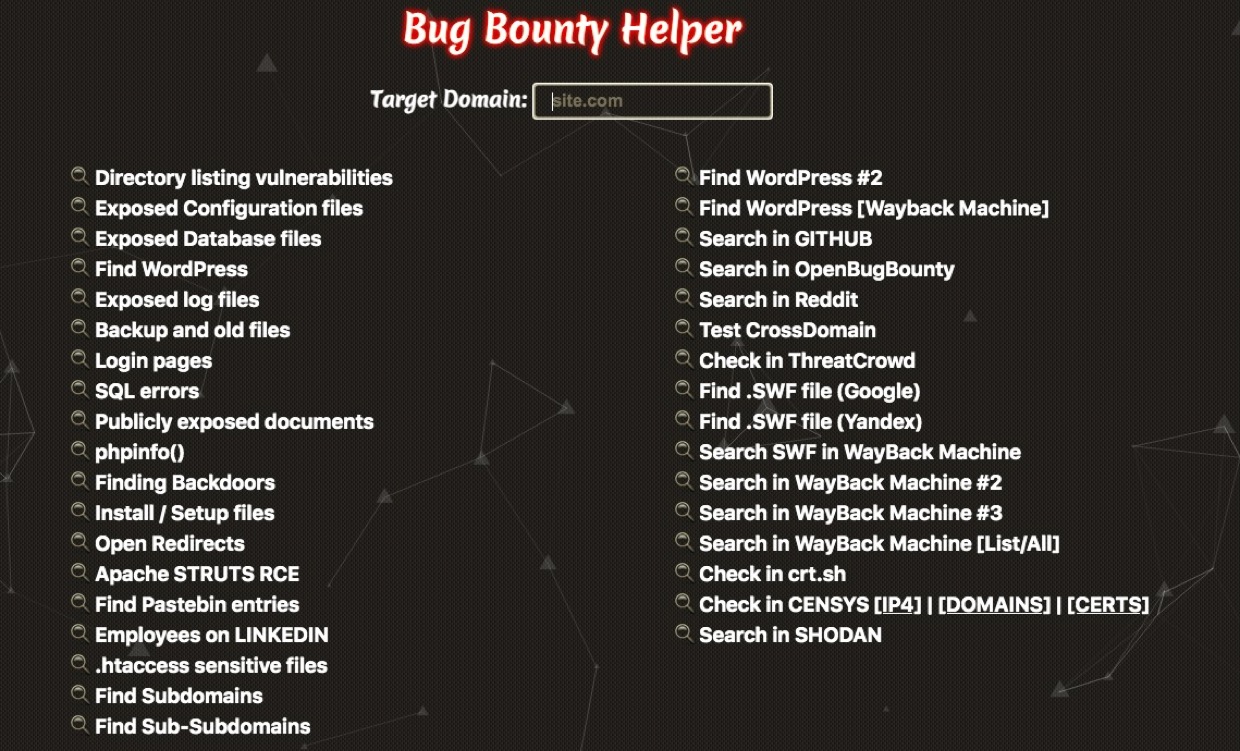

Step 8

Use Google Dorks to look for database dumps, office documents, log files, and potentially vulnerable pages.

dorks.faisalahmed.me [#9]

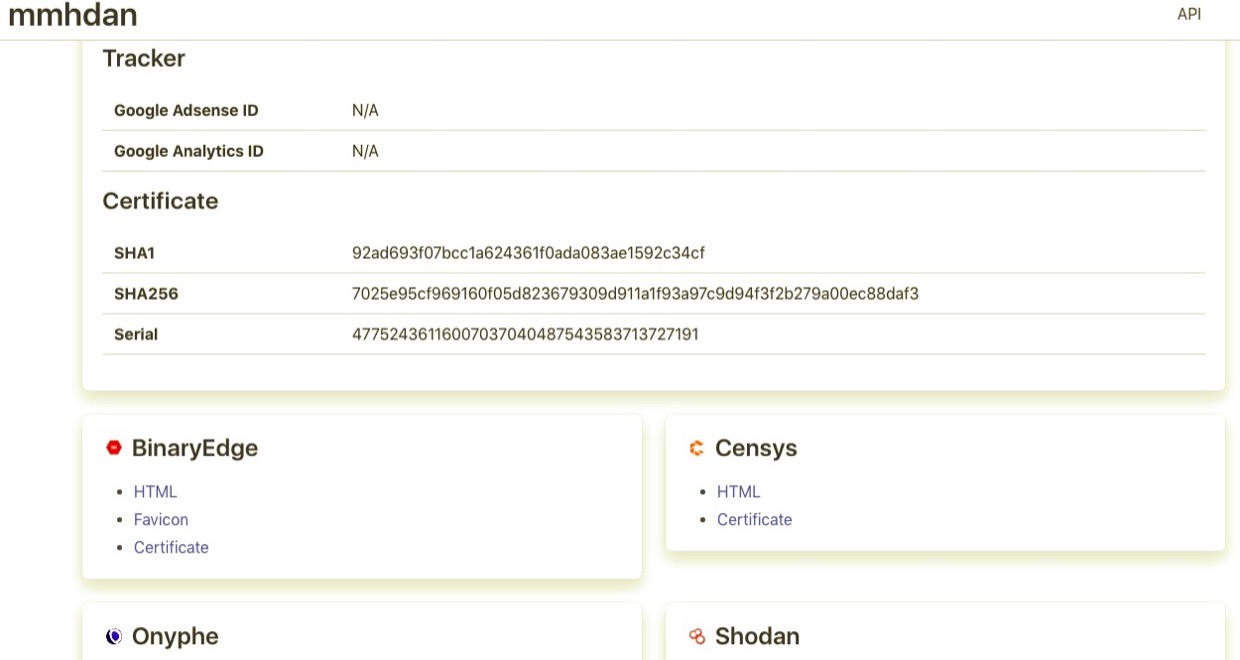

Step 9

Calculate a website fingerprint for searching it in Shodan, Censys, BinaryEdge, Onyphe and others "hackers" search engines.

mmhdan.herokuapp.com [#10]

Step 10

Looking for old versions of the site in archives and caches of search engines (sometimes in this way you can find addresses and contact information of the owners, which are currently already hidden from the site).

cipher387.github.io/quickcacheandar… [#11]

Step 11

Partially automate the process of finding important data in the archives. Download archive copies of pages from web.archive.org with Waybackpack

github.com/jsvine/waybackpack

Search it for phone numbers, emails and nicknames using Grep for OSINT

github.com/cipher387/grep_for_osint [#12]

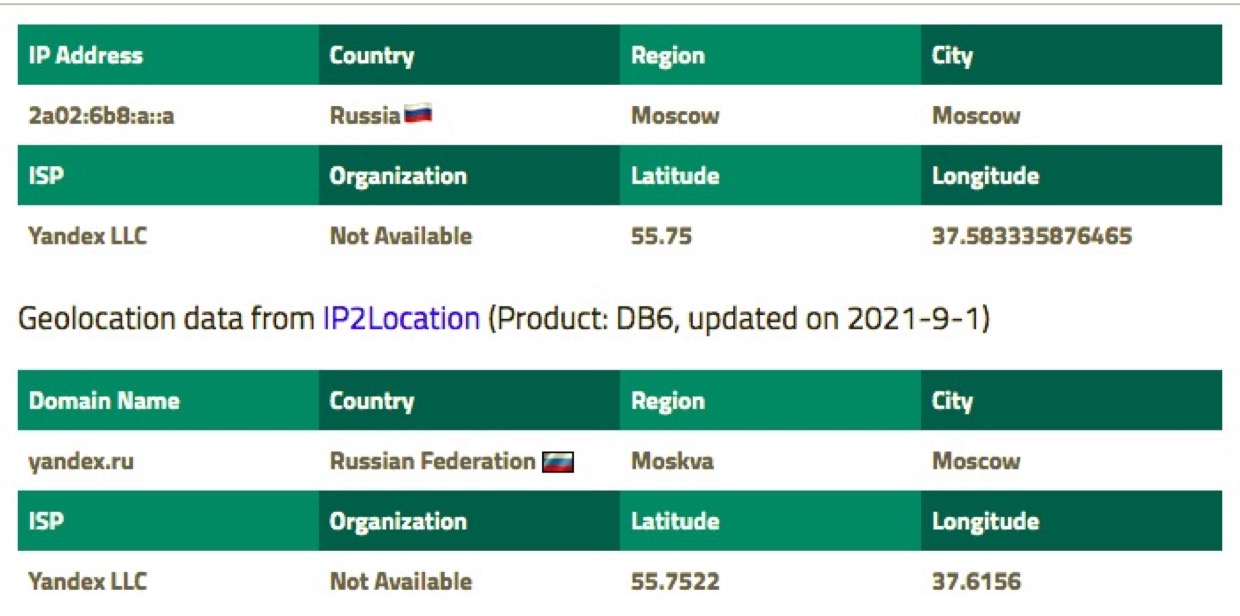

Step 12

Find out the approximate geographical location of the site

iplocation.net/ip-lookup

(There is a separate 12-step thread about gathering information about a place)

twitter.com/cyb_detective/status/14… [#13]

This short thread is over.

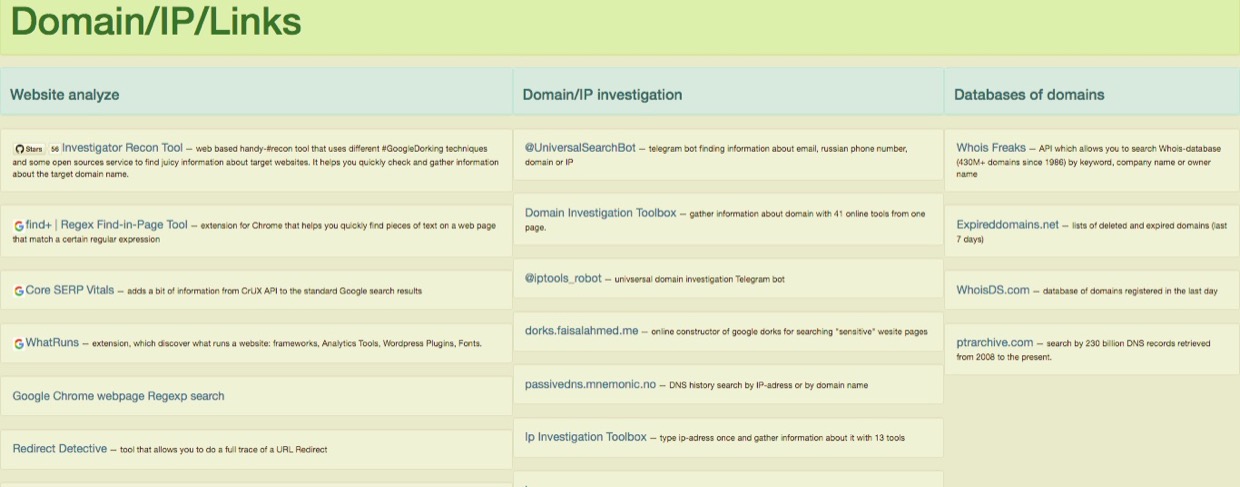

But there are dozens of times more tools for gathering information about domains. In my OSINT-collection there are already more than 60 of them:

cipher387.github.io/osint_stuff_too…

follow @cyb_detective to learn about new tools every day. [#14]