Why computers suck and how learning from OpenBSD can make them marginally less horrible

Arthur Rasmusson (Co-Founder, Arc Compute) & Louis Castricato (Computer Scientist, University of Waterloo)The following document is an attempt to consolidate down a number of threads spanning separate discussions from around the 'net I have been having on the subject of operating system development models and OpenBSD. I will break up this document into several sections each of which will handle separate pillars of my thinking in so far as I've formed some semi-articulate thoughts that I can share.

On where operating system development went wrong:

To begin it makes good sense to focus on Microsoft's Windows operating system. In Redmond a large number of engineers are focused on trying to make the Windows operating system function effectively for the needs of its market of private businesses and government agencies. These organizations typically have large workforces who never want application breaking changes introduced to the operating system. Frequently, it is the case that corporations running Windows for their workforce have paid outside firms sizeable sums of money to develop custom software that is "mission critical" to the company's core business. Companies who make such investments often view the money they've paid for the development of this software in a similar manner to how they would view the investment into any other asset - which is to say that the expectation is that it will continue to function for years. Worse yet is these outside firms often won't exist 10 years after the initial development of the software has been completed, and these same firms often do not offer source code to their customers; hence, their customers end up stuck with old builds. Even in cases where the customer does have source code access enterprise software developers, who are incentivized to complete their scope of work, may have no incentive to write code in a manner that would help future maintainers; they may not even document the code at all.

Without the ability to modernize an application's codebase, introducing any API deprecations into an operating system can be catastrophic for enterprise customers who find themselves in this situation; as a result of these pressures, Microsoft has made a conscious decision to avoid altering its operating system's functionality in a manner that would introduce Application Binary Interface (ABI) incompatibilities.

Hence, Windows can be conceptualized as a fundamentally enterprise oriented system with an enterprise oriented development model. Changes are carefully introduced to the system which often must be layered on top of existing functionality so as not to disrupt or otherwise alter the expected functions of existing APIs made use of by applications previously compiled targeting the Win32/Win64 platform. Backward compatibility is certainly desirable given many programs compiled to machine code will not realistically be updated to match a moving spec however this comes at a significant cost. Architectural improvements are much more difficult to introduce when the Application Binary Interface (ABI) is static, and deprecating old code segments to fix bugs, improve performance, or introduce binary incompatible features becomes impossible.

*Edit [December 15th 2019]: The most common thread I have seen in response to this article has been to point out the value of backward compatibility for purposes other than those that fit within what is often defined as "enterprise software". Based on the emphasis this article places on the concept of the "enterprise development model" I feel that it makes good sense to insert a section meant to clarify the intended scope of the concept as it applies to my thinking on this subject.

Infogalactic: Application Binary Interface

infogalactic.com/info/Application_binary_interface

In some threads discussing this article the point has been raised that a number of types of programs such as closed source games are not "enterprise software". To further clarify when we refer to the "enterprise development model" this is not strictly referring to "enterprise software" specifically. Throughout this article the term "enterprise development model" refers to any model of software development which can be considered to lack ongoing developer support in the form of source code maintenance needed to ensure a program's continued operability on a system which between releases undergoes changes to user land APIs and/or the operating system's ABI.

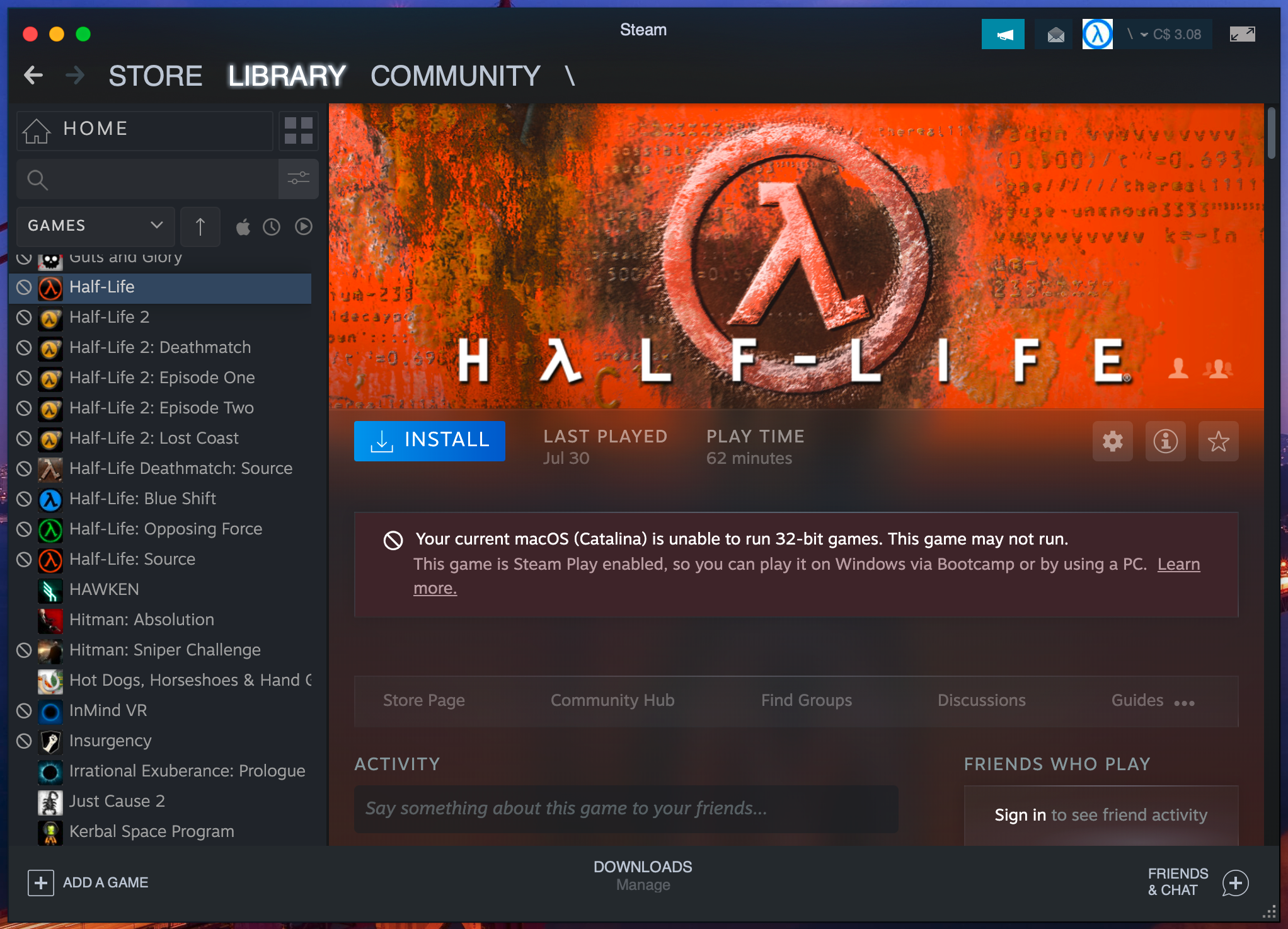

This would include, as has been pointed out in several threads discussing this article, games. A convenient illustration of this is the recent deprecation of the 32-bit binary interface in MacOS Catalina.

For the purposes of this article the enterprise development model will also be used to describe development of enterprise oriented operating systems which tailor themselves to preserving ABI backward compatibility as well as continuing to offer access to deprecated APIs upon which existing builds of software depend. I will further expand upon this point specifically in the following section.

The enterprise development model is the cancer killing the modern operating system.

To introduce this section, I'll share an anecdote from one of my older friends, a friend who had the pleasure of working on and consuming much of the history of early computer systems.

Prior to the release of Windows 98, Microsoft reflected on their previous major release with specific attention paid to the unwillingness of some customers to upgrade from MS-DOS to Windows 95. It turns out a large subset of the users who were able to upgrade but were otherwise unwilling had opted to remain on MS-DOS because many of the programs which had run on MS-DOS were incompatible with Windows 95.

As a result of their observation of this trend then Microsoft employees Michael Abrash (Project lead on the Windows NT kernel, NT graphics subsystem, co-wrote Quake) and Gabe Newell (later left Microsoft to found Valve) led a project at Microsoft to port the immensely popular DOS game "Doom" from MS-DOS to Windows 95. Microsoft viewed this strategy of selectively porting highly popular content to Windows 95 as key to increasing the rate of adoption for the system.

Beyond encouraging MS-DOS customers to upgrade their PCs to Windows 95 by offering them the ability to continue playing Doom (widely considered a PC killer app) Doom95 was also a strategic pitch to developers to adopt its DirectX and Win32 APIs - APIs that would become entrenched.

Invidious: Bill Gates Doom95 presentation

invidio.us/KN0K58EfJSg

This way of thinking (which would ensure sales of new versions of Windows would not be lost to existing copies of ABI incompatible user land software) became ingrained in both the minds of the engineers working at Microsoft and the software they wrote. The priority of backwards compatibility became so important that prior to the release of Windows 98 Microsoft's engineers travelled from their offices to local retailers so that they could purchase whatever off the shelf software they could find. Their purpose was to implement separate often entirely unique mitigations per program that would manipulate their new operating system so that the software facing ABI would present itself as compatible to this existing software. For example, Sim City apparently made use of a flag in a particular region of memory which was available to it under MS-DOS but under Windows 98 the same address and flag signaled the operating system to shut down.

Since then, a major priority of the company has been to prevent major breaking changes between releases rather than introduce changes that would later require mitigations.

But what about Linux?

While not to the extreme of Windows, where Microsoft built software specific patches, Linux has the same meta-problem. It is trying to preserve backward compatibility with prior versions of the kernel's Application Binary Interface so that builds of software compiled targeting an old version of the linux kernel will continue to run.

In the case of Linux, it is easy to see why the core maintainers have kept this priority essentially at the top of their list. While the Linux Foundation is not directly funded by the sales of its software (as it is free open source software licensed under the GPL) it is paid by a group of Foundation members.

Among the listed benefits provided to corporate members are a variety of opportunities for large companies who can afford the higher tiers to directly convey their goals for the project and lobby using their annually renewing $500,000 (USD) membership as a means to secure prioritized consideration.

Companies such as Oracle depend on a stable ABI from version to version of the Linux kernel in order to ensure that the enterprise software products (in the case of Oracle, database servers) they've sold to governments and many of the world's Fortune 1000 companies will not stop functioning on the next update to the kernel.

How much better could things actually be if we abandoned the enterprise development model?

Next I will compare this enterprise development approach with non-enterprise development - projects such as OpenBSD, which do not hesitate to introduce ABI breaking changes to improve the codebase.

One of the most commonly referred to pillars of the project's philosophy has long been its emphasis on clean functional code. Any code which makes it into OpenBSD is subject to ongoing aggressive audits for deprecated, or otherwise unmaintained code in order to reduce cruft and attack surface. Additionally the project creator, Theo de Raadt, and his team of core developers engage in ongoing development for proactive mitigations for various attack classes many of which are directly adopted by various multi-platform userland applications as well as the operating systems themselves (Windows, Linux, and the other BSDs). Frequently it is the case that introducing new features (not just deprecating old ones) introduces new incompatibilities against previously functional binaries compiled for OpenBSD.

To prevent the sort of kernel memory bloat that has plagued so many other operating systems for years, the project enforces a hard ceiling on the number of lines of code that can ever be in ring 0 at a given time. Current estimates guess the number of bugs per line of code in the Linux kernel are around 1 bug per every 10,000 lines of code. Think of this in the context of the scope creep seen in the Linux kernel (which if I recall correctly is currently at around 26,000,000 lines of code), as well as the Windows NT kernel (500,000,000 lines of code) and you quickly begin to understand how adding more and more functionality into the most privileged components of the operating system without first removing old components begins to add up in terms of the drastic difference seen between these systems in the number of zero day exploits caught in the wild respectively.

CVE Details: OpenBSD CVEs

cvedetails.com/vendor/97/Openbsd.html

CVE Details: Windows 10 CVEs

cvedetails.com/product/32238/Microsoft-Windows-10.html

CVE Details: Linux kernel & Debian CVEs

cvedetails.com/vendor/33/Linux.html

cvedetails.com/product/36/Debian-Debian-Linux.html

While many developers consider this continual movement of the system, and the requirement it places on them to be onerous, the OpenBSD project considers lack of documentation on any function of the system to be a bug - you have no excuse for not updating aside from incompetence. For the user this ultimately means you have a solid system which runs in a few places where everything is exactly as simple as it should be and to the maximum extent possible your security is ensured.

As nice as backward compatibility is from a user convenience perspective when this feature comes as a result of a static kernel Application Binary Interface this trade-off is essentially indistinguishable from increasing time-preference (or in other words declining concern for the future in comparison to the present). This can be seen with a continuous layering of hacky fixes, sloppily 'bolted on' feature additions (built in such a way that new additions don't conflict with existing APIs), and unremovable remnants of abandoned code segments left in place purely to ensure that applications continue to run. These issues not only cause their own problems but, in aggregate, cause a huge creep in the number of lines of code in privileged memory. This does not grow the exploitability of a system linearly but rather it causes exploitability to grow exponentially due to the fact that by there being more code to exploit, malicious functionalities can be chained together and made more harmful.

This comes as well at the cost of reducing the opportunity to transition the system from an entrenched way of doing things onto a newly improved set of conventions. Microsoft's most recent effort to introduce a new set of improved conventions under their new Universal Windows Platform (UWP) binary format as an alternative to Win32/Win64 was near completely rejected by developers in part because it was optional and the former Win64 binaries would continue to function (although there were other reasons for its rejection as well).

Apple abandoned the enterprise development model long ago. What has this meant for Apple's Darwin/XNU (MacOS/iOS) systems across time?

The development model (non-enterprise) which you see used by Apple for Darwin/XNU is essentially to prioritize modernizing APIs and deprecating old ones. If your application depends on an API which is old then you’re out of luck. You are expected to modernize with the kernel’s Application Binary Interface as a developer who lives in the userland.

Darwin XNU is based on a novel approach to kernel architecture shared only with Dragonfly BSD - a hybrid / modular microkernel+monolithic kernel. The advantages of this approach are in the increased extensibility of the kernel's functions, the ability to dynamically recover from software faults at runtime that would cause a monolithic kernel to crash (micro-services can crash and restart at OS runtime whereas monolithic kernel modules inherently lack this ability).

Unknown to most people Mac OS's use of the Darwin/XNU kernel (X is Not Unix) began with Mac OS X and is actually the byproduct of Apple's acquisition of NeXT Computer which ran NeXTSTEP. Developers on the system are expected to modernize their applications and are actively put on notice when application facing functionalities will soon become deprecated. Years of this approach to operating system development which actively breaks ABIs, as well as deprecates user land APIs in favour of replacing them with new better ones, has allowed Apple the ability to make leaps in the graphical shell, user land functionalities, and the underlying kernel while it's competitors have stayed stuck in the mud.

To understand the differences we've observed in the morphological direction and speed of these operating systems across time, bedrock can be found by examining the market niches filled by the respective systems and ultimately how those market niches result in a differing set of engineering considerations. Market pressures felt while building consumer operating systems differ substantially from those felt by companies building enterprise oriented operating systems. For enterprise systems to remain successful in the marketplace they must support software produced under the enterprise development model whereas for the consumer system to remain successful it is not necessarily required to support more than limited backward compatibility. These differing market pressures have immediate impact on engineering considerations, these considerations across time result in often entirely different technical trade-offs in the revisions seen throughout an operating system's lifecycle. The OS development paradigm of supporting backward compatibility imposes a severe tax on architectural innovation. Conversely the market for consumer operating systems with limited backward compatibility offers an opportunity to continually overturn existing paradigms and accelerate the discovery of new ones.

Also unknown to most people is the fact that Darwin/XNU is actually open source:

github.com/apple/darwin-xnu

There is even an entire BSD distro based on MacOS's open source components:

github.com/PureDarwin/PureDarwin/wiki

Pictured: "Mac OS X Internals" by Amit Singh

In the case of NeXT Computer kernel developers were hired from the FreeBSD project and the devs were given the mandate to develop the system architecture they would develop if they weren't constrained by version compatibility. What they ended up building was a hybrid monolithic-microkernel architecture which essentially balances the performance of Ring-0 only monolithic kernels against what had normally been the benefits seen only in slow micro-kernel architectures which were constrained in latency and memory throughput by cache reloading required when switching between privilege levels to execute the functions of its Ring 1/Ring 2 micro-services. While this new approach was no more secure than the approach seen with a typical ring-0 only monolithic kernel it was far less secure than the approach seen with a multi-ring full microkernel, but unlike the systems that were concerned with speed before who settled on leaving behind the entire concept of a micro-kernel due to the limitations imposed by a multi privilege ring system, the Darwin/XNU system was able to obtain the speed benefits of said Ring-0 only architecture while obtaining the aforementioned benefits of a micro-kernel by placing the various functions outside of the primary kernel process into smaller micro-services which themselves run in ring-0 alongside the primary hybrid kernel process.

Systems can be made radically different and substantially better across time when developers are not locked into the paradigm of an existing ABI. When this limitation does not impose itself changes can be made to the architecture of the underlying operating system which are unconstrained by the concern that you may anger a customer by breaking their custom enterprise software based on a decade old build or lose a corporate sponsor by forcing them to expend capital to update server-side software for your new kernel ABI.

Closing thoughts.

Computer system design reflects the business that a company is in. It isn't the case that after years of development Microsoft has ended up with a bad operating system because people at Microsoft are idiots, rather it is the case that they're in the enterprise software business.

It isn't the case that Linux has not adopted the architectural advancements seen in Darwin/XNU because they aren't smart enough to implement those changes. The reason they have not adopted these changes is because they are in the business of ensuring that the people who pay them aren't made unhappy by a massive change to the kernel's architecture that necessitates a non-trivial expenditure of time and capital to modernize software products running on current ABIs just to keep them running on future ABIs.

Conversely, OpenBSD is able break whatever functionality it wants because the team is simply in the business of building a simple and secure Unix system.

Apple is able to break whatever functionality they want because they fundamentally motivated by the fear that their improvements to the OS might result in someone's desktop Win32 application from 14 years ago refusing to start.

Computers are becoming less secure and in many cases only a few systems are continually innovating in both the APIs they offer developers and the architecture of the underlying system itself. That's the way things are and its not likely to change as long as two factors continue unchanged:

1) Linus Torvalds continues receiving his Linux Foundation salary paid for by the massive cheques its member organizations cut him in exchange for influence over the kernel's development.

2) Large corporations and government agencies who have made sizeable investments into custom enterprise applications necessary for their workforce continue to expect this software to run on any Windows system they purchase during the next 10-20 years.

Given that neither of these realities seem poised to change in the immediate future our best hope of renewed innovation in software architecture is to embrace the companies and projects which are willing to introduce breaking changes into the kernel and corelibs when it means improving the system. Otherwise various efforts making use of containers, lightweight virtualization, and binary wrappers for the purposes of introducing new options to companies allowing them reasonable backward compatibility for the various applications that have become entrenched in their organizations will be the only way to break away from the stagnation of the current paradigm of enterprise operating system development. I may post in the future regarding my thoughts on how we could make better use of Docker-like app containerization with virtualization technologies such as those in the now discontinued QEMU/KVM-light project (by the Intel Open Source Technologies Center) to run legacy applications with near native performance and perfect forward compatibility without stagnating the development of the host operating system.

If you'd like to work with us or you have any specific comments, recommendations, or questions pertaining to any of the above material our contact information is as follows:

Arthur Rasmusson can be reached at:

t.me/dopefish on Telegram

twitter.com/arcvrarthur on Twitter

and by email at arthur@arccompute.com

&

Louis Castricato can be reached at:

t.me/matrixcs on Telegram

twitter.com/lcastricato on Twitter

and by email at lcastric@edu.uwaterloo.ca