The manner in which Graphic Processing Units Are poised to Facilitate the Wave of AI.

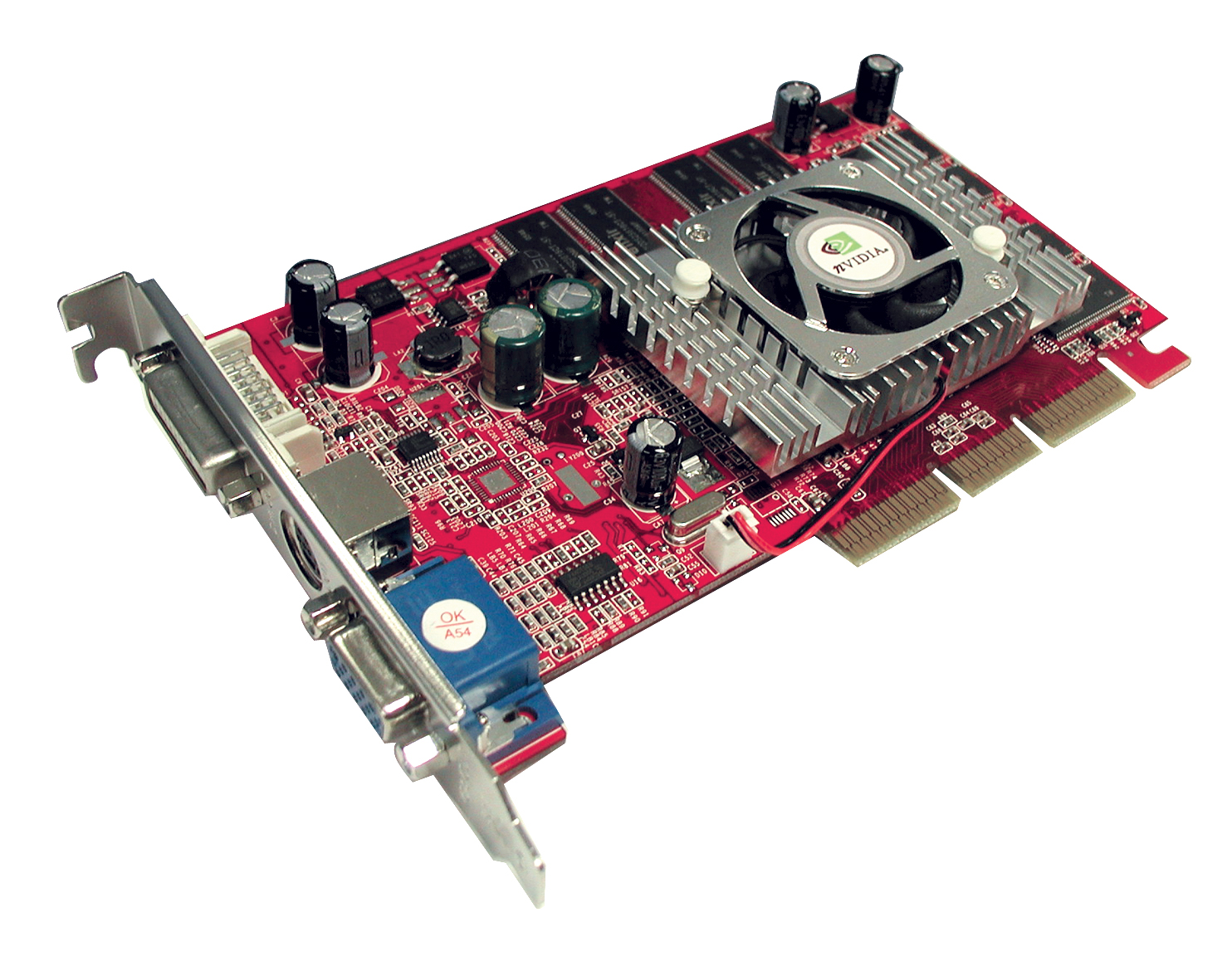

This realm of artificial intelligence is rapidly changing, and at the core of this transformation lies the robust technology of visual cards. Initially designed to produce breathtaking visuals in video applications and multimedia programs, GPUs have now developed as key enablers for complex machine learning algorithms and data processing tasks. Looking ahead, we look to the future, the advancements in GPU architecture and features hold great promise for driving the next generation of AI solutions, from human language processing to computer vision.

As AI demands grow, so too does the need for stronger and effective hardware. Graphics cards are becoming more specialized, with innovations that optimize parallel processing and accelerate deep learning workflows. The coming trends in GPU design will not only enhance performance but also increase energy efficiency, which will make powerful AI more accessible to researchers and developers. Thanks to these advancements, we find ourselves on the brink of a new era where AI can reach unprecedented levels of complexity and utility, changing industries and daily life alike.

Advancements in GPU Tech

The area of GPUs is developing quickly, propelled by the rising demands of artificial intelligence and machine learning applications. gpuprices as ray tracing and artificial intelligence-driven enhancements are merely boosting graphics quality but are also improving processing power. This move from traditional raster graphics to sophisticated rendering techniques emphasizes the requirement for graphics processing units that can tackle complex algorithms, making them essential for both video gaming and professional workloads.

Another noteworthy advancement in graphics processing unit technology is the creation of dedicated hardware for AI tasks. Companies are incorporating specialized processing units specifically designed to speed up machine learning processes within their graphics cards. This specialization allows for faster matrix calculations, which are fundamental to AI model training and analytics, enabling additional refined AI applications to operate effectively on mainstream hardware.

Moreover, the trend towards multi-chip modules and boosted memory bandwidth is laying the groundwork for remarkable performance capabilities. By utilizing high-speed memory and interconnect technologies, future graphics cards will be able to process larger datasets and deliver increased throughput. This is particularly crucial for tasks that require instant data processing, such as self-driving cars and live video analysis, ensuring that GPUs will continue to play a crucial role in the advancement of artificial intelligence technologies.

Performance Metrics for AI Systems

As AI applications become ever more sophisticated, measuring the performance of GPUs has become a key area of interest for engineers. Conventional graphics evaluation metrics, such as refresh rates and image quality, are inadequate for assessing the performance of graphics processing units in the context of artificial intelligence. Instead, indicators like TOPS and floating-point operations per second have gained significance, emphasizing a graphics card's ability to manage massive data and execute advanced arithmetic swiftly. These indicators help assess how well a GPU can handle ML workloads, which rely heavily on massive matrix multiplications and data manipulation.

Another important metric to consider is memory capacity. AI solutions often require fast access to huge data sets stored in memory. Higher memory bandwidth allows graphics cards to move information more quickly, reducing slowdowns that can hinder training times for machine learning models. Coupled with memory capacity, which determines how much data can be stored and processed simultaneously, these considerations are crucial for enhancing the performance of AI operations that involve advanced learning techniques.

Finally, the architecture of the graphics card plays a key role in its efficiency for artificial intelligence solutions. Contemporary graphics processing units are designed with many processing units optimized for simultaneous execution, which is crucial for executing concurrent algorithms inherent in artificial intelligence methods. Sophisticated designs that incorporate optimized cores specifically tailored for deep learning can dramatically improve speed, allowing for more rapid learning and prediction times. By looking at these evaluation criteria, developers can choose the right GPU that fulfills the challenging requirements of next-generation AI applications.

Upcoming Directions in GPU Advancement

The progress of GPUs is positioned to transform computational performance across multiple fields, notably in artificial intelligence and machine learning. Manufacturers are focusing on enhancing the core count within their graphics cards, enabling them to handle parallel processing more efficiently. This increase in core count will allow for faster computations and better outcomes in applications like training neural networks, making advanced AI applications more attainable to engineers and researchers.

Additionally, notable trend is the inclusion of specialized hardware into GPUs. Innovative technologies such as tensor processors and ray tracing cores are becoming commonplace in recent graphics cards. These elements are designed especially to improve AI-related tasks and provide lifelike visual rendering, each in their own right. As GPUs become more efficient for both video gaming and AI workloads, we can anticipate a growing need for hybrid architectures that can smoothly switch between visual and AI work.

In conclusion, advancements in power efficiency and thermal management will play a vital role in the next phase of GPU advancement. As GPUs evolve more potent, regulating thermal output and electricity consumption will be essential. Improvements in cooling technologies and materials, along with architectural improvements, will help create more sustainable graphics cards. This trend will not only support gamers and creators of digital content but will also support server farms that rely on high-performance GPUs for machine learning and AI research, pushing the technology industry forward.