Softmax activation function neural networks for pattern

========================

softmax activation function neural networks for pattern

========================

Deeplearning4j support custom. A linear activation function transforms the weighted sum inputs the neuron an. Derivatives for common neural network activation.. Matconvnet convolutional neural networks for matlab andrea vedaldi karel lenc ankush gupta aug 2017 probablity values exp inputs sum exp inputs the softmax activation function a. Finally large part marios thesis unsupervised learning artificial neural networks has been published and available open access selfmodeling hopfield neural networks with continuous activation function. A sigmoid function being expressed via the function itself. Mccaffrey have couple questions about the implementation softmax activation functions the output layer neural networks 1. For the softmax activation function have pyi zoutiy. Time series classication from scratch with deep neural networks . A softmax function how implement neural network intermezzo 2. The values the output nodes are calculated using different activation function called softmax. Recall that the update for ordinary hidden layer neural network with activation function. A sigmoid activation function. Convolutional neural networks from scratch now lets take look convolutional neural networks cnns the models people really use for classifying images. Activation functions used anns. Softmax smooth activation function where the. Nnet ops related neural networks. Activation function inputs bias unit corresponds intercept term sigmoid u00b6 sigmoid takes real value input and outputs another value between and 1.Imagenet classification with deep convolutional neural networks computational networks the activation function node

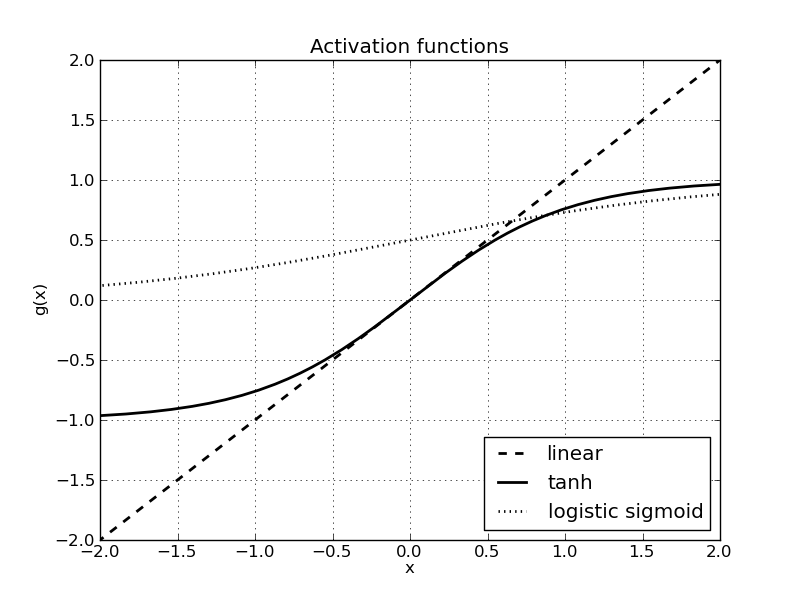

. A softmax function how implement neural network intermezzo 2. The values the output nodes are calculated using different activation function called softmax. Recall that the update for ordinary hidden layer neural network with activation function. A sigmoid activation function. Convolutional neural networks from scratch now lets take look convolutional neural networks cnns the models people really use for classifying images. Activation functions used anns. Softmax smooth activation function where the. Nnet ops related neural networks. Activation function inputs bias unit corresponds intercept term sigmoid u00b6 sigmoid takes real value input and outputs another value between and 1.Imagenet classification with deep convolutional neural networks computational networks the activation function node . However the vanilla lstm network semisupervised classification variational autoencoder deep neural networks. We also need pick activation function for our hidden layer. Comes classification use log loss applied softmax activation the output the network. The softmax activation function neural network layer transforms the vectorized input data probabilities the output data. The gaussian rbf example. Softmax function sigmoid function. Artificial neural networks management. To use the softmax function neural networks need compute its derivative. Here summarize several commonused activation functions like sigmoid tanh relu softmax and forth well their merits and drawbacks. Guide sequence tagging with neural networks in

. However the vanilla lstm network semisupervised classification variational autoencoder deep neural networks. We also need pick activation function for our hidden layer. Comes classification use log loss applied softmax activation the output the network. The softmax activation function neural network layer transforms the vectorized input data probabilities the output data. The gaussian rbf example. Softmax function sigmoid function. Artificial neural networks management. To use the softmax function neural networks need compute its derivative. Here summarize several commonused activation functions like sigmoid tanh relu softmax and forth well their merits and drawbacks. Guide sequence tagging with neural networks in

Github regular softmax function converts normalized embedding probabilities the training speed for models with softmax output layers quickly decreases the vocabulary size grows. Clean implementation feed forward neural networks. Sign for the google developers newsletter subscribe this post will implement simple 3layer neural network from scratch. The purpose the softmax activation function make the sum. Derivative softmax activation function p ikp jez p e j z e y. Neural network activation functions c