Private Mobile 1.3

🛑 👉🏻👉🏻👉🏻 INFORMATION AVAILABLE CLICK HERE👈🏻👈🏻👈🏻

Learn about the tools and frameworks in the PyTorch Ecosystem

See the posters presented at ecosystem day 2021

Learn about PyTorch’s features and capabilities

Join the PyTorch developer community to contribute, learn, and get your questions answered.

Find resources and get questions answered

A place to discuss PyTorch code, issues, install, research

Discover, publish, and reuse pre-trained models

PyTorch continues to gain momentum because of its focus on meeting the needs of researchers, its streamlined workflow for production use, and most of all because of the enthusiastic support it has received from the AI community. PyTorch citations in papers on ArXiv grew 194 percent in the first half of 2019 alone, as noted by O’Reilly, and the number of contributors to the platform has grown more than 50 percent over the last year, to nearly 1,200. Facebook, Microsoft, Uber, and other organizations across industries are increasingly using it as the foundation for their most important machine learning (ML) research and production workloads.

We are now advancing the platform further with the release of PyTorch 1.3, which includes experimental support for features such as seamless model deployment to mobile devices, model quantization for better performance at inference time, and front-end improvements, like the ability to name tensors and create clearer code with less need for inline comments. We’re also launching a number of additional tools and libraries to support model interpretability and bringing multimodal research to production.

Additionally, we’ve collaborated with Google and Salesforce to add broad support for Cloud Tensor Processing Units, providing a significantly accelerated option for training large-scale deep neural networks. Alibaba Cloud also joins Amazon Web Services, Microsoft Azure, and Google Cloud as supported cloud platforms for PyTorch users. You can get started now at pytorch.org.

The 1.3 release of PyTorch brings significant new features, including experimental support for mobile device deployment, eager mode quantization at 8-bit integer, and the ability to name tensors. With each of these enhancements, we look forward to additional contributions and improvements from the PyTorch community.

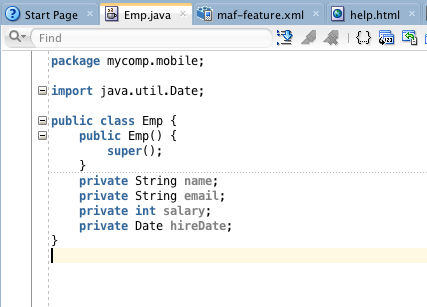

Cornell University’s Sasha Rush has argued that, despite its ubiquity in deep learning, the traditional implementation of tensors has significant shortcomings, such as exposing private dimensions, broadcasting based on absolute position, and keeping type information in documentation. He proposed named tensors as an alternative approach.

Today, we name and access dimensions by comment:

# Tensor[N, C, H, W]

images = torch.randn(32, 3, 56, 56)

images.sum(dim=1)

images.select(dim=1, index=0)

But naming explicitly leads to more readable and maintainable code:

NCHW = [‘N’, ‘C’, ‘H’, ‘W’]

images = torch.randn(32, 3, 56, 56, names=NCHW)

images.sum('C')

images.select('C', index=0)

It’s important to make efficient use of both server-side and on-device compute resources when developing ML applications. To support more efficient deployment on servers and edge devices, PyTorch 1.3 now supports 8-bit model quantization using the familiar eager mode Python API. Quantization refers to techniques used to perform computation and storage at reduced precision, such as 8-bit integer. This currently experimental feature includes support for post-training quantization, dynamic quantization, and quantization-aware training. It leverages the FBGEMM and QNNPACK state-of-the-art quantized kernel back ends, for x86 and ARM CPUs, respectively, which are integrated with PyTorch and now share a common API.

To learn more about the design and architecture, check out the API docs here, and get started with any of the supported techniques using the tutorials available here.

Running ML on edge devices is growing in importance as applications continue to demand lower latency. It is also a foundational element for privacy-preserving techniques such as federated learning. To enable more efficient on-device ML, PyTorch 1.3 now supports an end-to-end workflow from Python to deployment on iOS and Android.

This is an early, experimental release, optimized for end-to-end development. Coming releases will focus on:

Learn more or get started on Android or iOS here.

As models become ever more complex, it is increasingly important to develop new methods for model interpretability. To help address this need, we’re launching Captum, a tool to help developers working in PyTorch understand why their model generates a specific output. Captum provides state-of-the-art tools to understand how the importance of specific neurons and layers and affect predictions made by the models. Captum’s algorithms include integrated gradients, conductance, SmoothGrad and VarGrad, and DeepLift.

The example below shows how to apply model interpretability algorithms on a pretrained ResNet model and then visualize the attributions for each pixel by overlaying them on the image.

noise_tunnel = NoiseTunnel(integrated_gradients)

attributions_ig_nt, delta = noise_tunnel.attribute(input, n_samples=10, nt_type='smoothgrad_sq', target=pred_label_idx)

_ = viz.visualize_image_attr_multiple(["original_image", "heat_map"],

["all", "positive"],

np.transpose(attributions_ig_nt.squeeze().cpu().detach().numpy(), (1,2,0)),

np.transpose(transformed_img.squeeze().cpu().detach().numpy(), (1,2,0)),

cmap=default_cmap,

show_colorbar=True)

Learn more about Captum at captum.ai.

Practical applications of ML via cloud-based or machine-learning-as-a-service (MLaaS) platforms pose a range of security and privacy challenges. In particular, users of these platforms may not want or be able to share unencrypted data, which prevents them from taking full advantage of ML tools. To address these challenges, the ML community is exploring a number of technical approaches, at various levels of maturity. These include homomorphic encryption, secure multiparty computation, trusted execution environments, on-device computation, and differential privacy.

To provide a better understanding of how some of these technologies can be applied, we are releasing CrypTen, a new community-based research platform for taking the field of privacy-preserving ML forward. Learn more about CrypTen here. It is available on GitHub here.

Digital content is often made up of several modalities, such as text, images, audio, and video. For example, a single public post might contain an image, body text, a title, a video, and a landing page. Even one particular component may have more than one modality, such as a video that contains both visual and audio signals, or a landing page that is composed of images, text, and HTML sources.

The ecosystem of tools and libraries that work with PyTorch offer enhanced ways to address the challenges of building multimodal ML systems. Here are some of the latest libraries launching today:

Object detection and segmentation are used for tasks ranging from autonomous vehicles to content understanding for platform integrity. To advance this work, Facebook AI Research (FAIR) is releasing Detectron2, an object detection library now implemented in PyTorch. Detectron2 provides support for the latest models and tasks, increased flexibility to aid computer vision research, and improvements in maintainability and scalability to support production use cases.

Detectron2 is available here and you can learn more here.

Language translation and audio processing are critical components in systems and applications such as search, translation, speech, and assistants. There has been tremendous progress in these fields recently thanks to the development of new architectures like transformers, as well as large-scale pretraining methods. We’ve extended fairseq, a framework for sequence-to-sequence applications such as language translation, to include support for end-to-end learning for speech and audio recognition tasks.These extensions to fairseq enable faster exploration and prototyping of new speech research ideas while offering a clear path to production.

Cloud providers such as Amazon Web Services, Microsoft Azure, and Google Cloud provide extensive support for anyone looking to develop ML on PyTorch and deploy in production. We’re excited to share the general availability of Google Cloud TPU support and a newly launched integration with Alibaba Cloud. We’re also expanding hardware ecosystem support.

As an open source, community-driven project, PyTorch benefits from wide range of contributors bringing new capabilities to the ecosystem. Here are some recent examples:

We recently held the first online Global PyTorch Summer Hackathon, where researchers and developers around the world were invited to build innovative new projects with PyTorch. Nearly 1,500 developers participated, submitting projects ranging from livestock disease detection to AI-powered financial assistants. The winning projects were:

Visit pytorch.org to learn more and get started with PyTorch 1.3 and the latest libraries and ecosystem projects. We look forward to the contributions, exciting research advancements, and real-world applications that the community builds with PyTorch.

We’d like to thank the entire PyTorch team and the community for all their contributions to this work.

Access comprehensive developer documentation for PyTorch

Get in-depth tutorials for beginners and advanced developers

Find development resources and get your questions answered

To analyze traffic and optimize your experience, we serve cookies on this site. By clicking or navigating, you agree to allow our usage of cookies. As the current maintainers of this site, Facebook’s Cookies Policy applies. Learn more, including about available controls: Cookies Policy.

Firefox Browser fast & privateversion 90.1.3 - arm64 - and 74 other versions

Firefox is one of the most popular desktop and mobile browsers out there, with multiple tools like bookmarks, incognito browsing, tabs, add-ons and the option to log in and sync data to other devices.

For more information on downloading Firefox Browser fast & private to your phone, check out our guide: how to install APK files.

Firefox can now save, manage, and auto-fill credit card information for you, making shopping on Firefox ever more convenient.

Back/Forward Cache is now enabled for webpages that use unload event listeners, making the back and forward navigations faster on these pages.

Users that need to import user Android certificates can do so through the secret settings

© 2001-2021 Softpedia. All rights reserved. Softpedia® and the Softpedia® logo are registered trademarks of SoftNews NET SRL

Sexy Shemale Porno

Naked Beach Selfies

Mother Handjob Compilation

Massage Olymp Massage Olymp Ru

Breaking The Quiet Porn Video

Nokia 1.3 mobile phone with the latest Android and HD+ ...

Tải Private Mobile cho máy tính PC Windows phiên bản mới ...

PyTorch 1.3 adds mobile, privacy, quantization, and named ...

Firefox Browser fast & private 90.1.3 (arm64) APK Download

Android Apps by Flash Private Mobile Networks on Google Play

Manage private Android apps in Google Play - Google ...

Firefox Browser fast & private 90.1.3 (x86-64) APK Download

Privat:mobile - Wiki

Turn Private Browsing on or off on your iPhone or iPod ...

private mobile phone - Russian translation – Linguee

Private Mobile 1.3