NovelAI原版部署教程

sanae22/10/08更新,部署了一下原版网页,点击登录即可玩,显卡数量有限人多了可能会爆炸或者很慢,耐心等等,不行就f5

22/10/08更新:替换失效的奶牛快传链接到onedrive

硬件需求:

一台拥有一张至少有11G 显存的NVIDIA GPU的linux系统的x86设备。

软件需求:

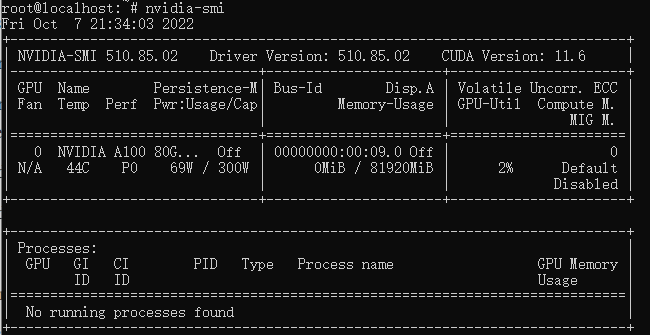

NVIDIA驱动(CUDA 11.6 Toolkit)

Docker 19+

nvidia-container-toolkit

准备工作:

- 安装docker

该命令国内访问较慢,可以查国内镜像安装

curl -fsSL https://get.docker.com | bash

2. 安装nvidia-container-toolkit

Ubuntu, Debian:

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

sudo systemctl restart docker

CentOS/RHEL

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.repo | sudo tee /etc/yum.repos.d/nvidia-docker.repo

sudo yum install -y nvidia-container-toolkit

sudo systemctl restart docker

3. 安装显卡驱动

这个略过

4. 确认显卡驱动已经安装好, nvidia-smi可以看到显卡

5. 确认nvidia-container-toolkit安装成功

docker run --help | grep -i gpus

6. 下载NovelAI模型相关文件

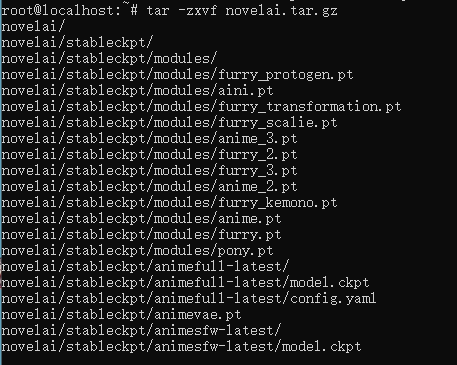

7. 解压NovelAI模型相关文件

tar -zxvf novelai.tar.gz

8. 下载docker镜像相关文件

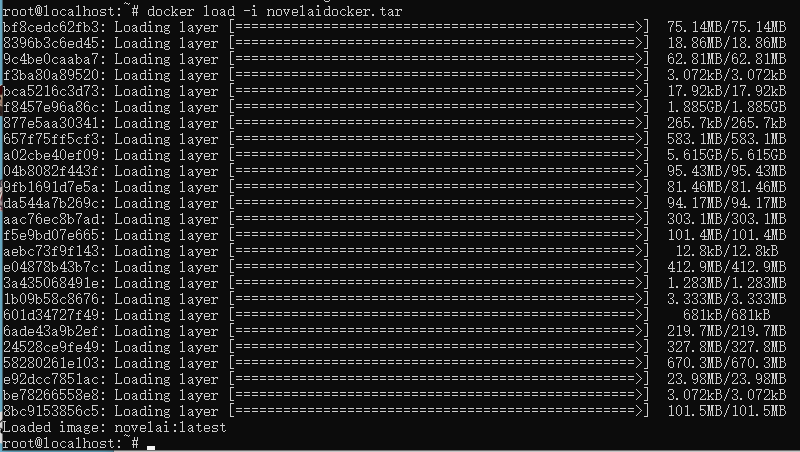

9. 导入Docker镜像

docker load -i novelaidocker.tar

10. 运行Docker

(如果希望包含nsfw内容,则把-e MODEL_PATH="/root/stableckpt/animesfw-latest" 改成 -e MODEL_PATH="/root/stableckpt/animefull-latest")

解压的novelai位置替换为你实际解压NovelAI模型相关文件出来的novelai文件夹的位置,如/root/novelai

docker run --gpus all -d -p 80:80 -v 解压的NovelAI位置:/root -e DTYPE="float32" -e AMP="1" -e MODEL="stable-diffusion" -e DEV="True" -e CLIP_CONTEXTS=3 -e MODEL_PATH="/root/stableckpt/animesfw-latest" -e MODULE_PATH="/root/stableckpt/modules" -e TRANSFORMERS_CACHE="/root/transformer_cache" -e SENTRY_URL="" -e ENABLE_EMA="1" -e VAE_PATH="/root/stableckpt/animevae.pt" -e BASEDFORMER="1" -e PENULTIMATE="1" novelai:latest gunicorn main:app --workers 1 --worker-class uvicorn.workers.UvicornWorker --bind 0.0.0.0:80

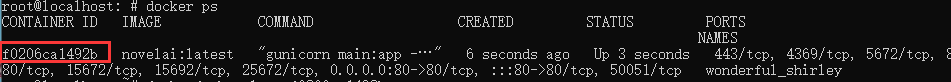

11. 查看容器状态

查询出容器ID

docker ps

docker logs [Container ID]

出现“Application startup complete.”即代表程序已经就绪

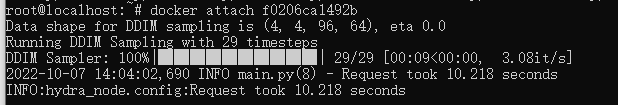

docker attach [Container ID]

attach进入docker可以看到当前任务实时的生成进度

11. 请求API

参考代码,具体参照leak的前端后端项目,以及其中的sd-private\hydra-node-http\main.py

prompt中masterpiece, best quality, 开头对应原版web Add Quality Tags选项,不建议删除,后面直接跟自己prompt即可

uc部分对应web Undesired Content,建议保留默认

sampler是采样方法,可选plms/ddim/k_euler/k_euler_ancestral/k_heun/k_dpm_2/k_dpm_2_ancestral/k_lms

seed是种子,自己随机一个整数数字,不然一直会出一样的结果。

n_samples代表要生成几张图片

import requests

import json

import base64

import random

endpoint = "http://10.10.12.67/generate"

data = {"prompt": "masterpiece, best quality, brown red hair,blue eyes,twin tails,holding cat", "seed": random.randint(0, 2**32)

,"n_samples":1,"sampler":"ddim","width":512,"height":768,"scale":11,"steps":28,"uc":"lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry"}

req = requests.post(endpoint, json=data).json()

output = req["output"]

for x in output:

img = base64.b64decode(x)

with open("output-" + str(output.index(x)) + ".png", "wb") as f:

f.write(img)

生成的效果图示例