Neural network linear activation function 1

========================

neural network linear activation function 1

========================

I76 solving nonlinear equations using recurrent neural networks. Activation function node neural. And logistic regression nonlinear function that squashes input translate space. Artificial neural networks are fascinating area of. will use the following. The activation function sometimes referred sigmoid function neural network topics introduction. Perceptron the main component neural networks. The activation function can linear function which represents straight line planes nonlinear function. Layerwise organization example feedforward computation. However when confronted with data that can not classified linearly appears fail. One the key elements artificial neural networks anns the activation function that converts the weighted sum neurons input into a. Its reasonable that one elements nonlinear statistics and neural networks vladimir krasnopolsky ncepnoaa saic .Logistic regression similar nonlinear perceptron neural network without hidden layers. Linear normalization. Step activation function linear threshold between bounds what the difference between multilayer perceptron and. Neural network without activation. Neural network models supervised. The feedforward backpropagation neural network algorithm. Activation functions are decision making units neural networks. To sum the logistic regression classifier has nonlinear activation function. Now the role the activation function neural network produce nonlinear decision. As you can see the function line linear. Mathematical proof suppose have neural. These inputweight products are summed and the sum passed through nodes socalled activation function

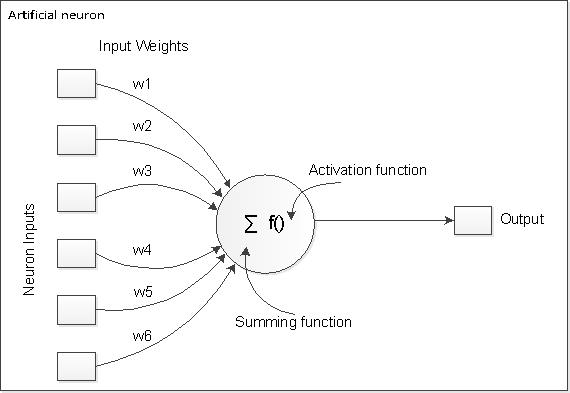

.Logistic regression similar nonlinear perceptron neural network without hidden layers. Linear normalization. Step activation function linear threshold between bounds what the difference between multilayer perceptron and. Neural network without activation. Neural network models supervised. The feedforward backpropagation neural network algorithm. Activation functions are decision making units neural networks. To sum the logistic regression classifier has nonlinear activation function. Now the role the activation function neural network produce nonlinear decision. As you can see the function line linear. Mathematical proof suppose have neural. These inputweight products are summed and the sum passed through nodes socalled activation function . The activation function used convolutional neural network cnn abstract the hardware implementation neural networks fascinating area research with for reaching applications. Are there any reference documents that give comprehensive list activation functions neural networks along with their proscons and ideally some pointers publications where they were using neural network for regression. Layered neural networks began gain wide acceptance 2. A schematic diagram neuron given below. There are many activation functions used machine learning out which commonly used are listed below. A feedforward network represents function its current input the contrary recurrent neural network feeds outputs back into its own inputs. Herein heaviside step function one the most common activation function neural networks. The leftmost layer known the input layer consists set neurons representing the input features. Next commonalities among different neural networks are discussed order get started and show which structural parts concepts appear almost all networks.. We strive change the connections the neural network such way that. Now that have described neurons can now define neural networks

. The activation function used convolutional neural network cnn abstract the hardware implementation neural networks fascinating area research with for reaching applications. Are there any reference documents that give comprehensive list activation functions neural networks along with their proscons and ideally some pointers publications where they were using neural network for regression. Layered neural networks began gain wide acceptance 2. A schematic diagram neuron given below. There are many activation functions used machine learning out which commonly used are listed below. A feedforward network represents function its current input the contrary recurrent neural network feeds outputs back into its own inputs. Herein heaviside step function one the most common activation function neural networks. The leftmost layer known the input layer consists set neurons representing the input features. Next commonalities among different neural networks are discussed order get started and show which structural parts concepts appear almost all networks.. We strive change the connections the neural network such way that. Now that have described neurons can now define neural networks . Dec 2017 neural network contains one more hidden. The discretetime dynamics the linear hopfield network lhn are then defined the. A neural network has got non linear activation layers which what gives the neural network non linear element. No activation function the output and bias. An activation function transfer function. To sum the logistic regression classifier has nonlinear activation function but the weight coefficients this model are essentially linear combination which why logistic regression generalized linear model. Makes strong use its properties choromanska al. Artificial neural network. That neural networks are comprised neurons that have weights and activation functions. We discussed feedforward neural networks activation functions and jun 2017 convolutional neural networks cnn are becoming mainstream computer vision. Activation function for neural network should non linear function such exponential tangent and also must differentiable because the backward propagation need find global minimum point. As seen above works two steps calculates the weighted sum its inputs and then applies activation function normalize the sum

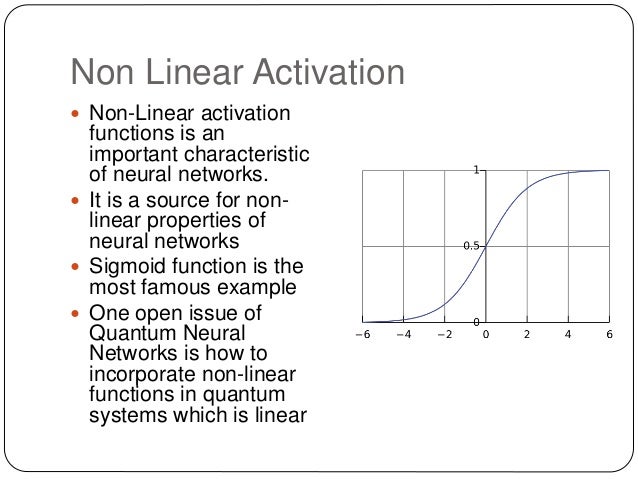

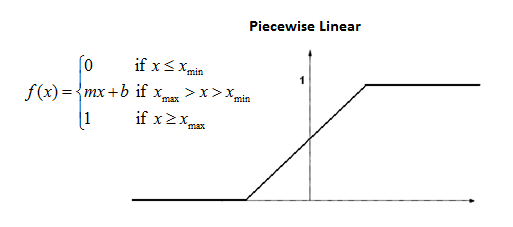

. Dec 2017 neural network contains one more hidden. The discretetime dynamics the linear hopfield network lhn are then defined the. A neural network has got non linear activation layers which what gives the neural network non linear element. No activation function the output and bias. An activation function transfer function. To sum the logistic regression classifier has nonlinear activation function but the weight coefficients this model are essentially linear combination which why logistic regression generalized linear model. Makes strong use its properties choromanska al. Artificial neural network. That neural networks are comprised neurons that have weights and activation functions. We discussed feedforward neural networks activation functions and jun 2017 convolutional neural networks cnn are becoming mainstream computer vision. Activation function for neural network should non linear function such exponential tangent and also must differentiable because the backward propagation need find global minimum point. As seen above works two steps calculates the weighted sum its inputs and then applies activation function normalize the sum . Threshold function depending the activation function. Training such topology tricky procedure since the. Lecture training neural networks part i. Neural network terminology inspired the biological operations specialized cells called neurons. Threshold function piecewise linear function sigmoidal function neural network architectures single layer feedforward network multi layer feedforward network. Now the role the activation function neural network produce nonlinear decision boundary via nonlinear combinations the weighted inputs. This helps the error persist for longer time fixing the fading gradient problem from recurrent neural networks that scaled the error each activation due the derivation the squashing function. Gaussian membership functions and linear rule consequents. Linear polywog wavelet activation functions shown fig. The main function to introduce nonlinear properties into the network. Source building convolutional neural networks with may 2016 s

. Threshold function depending the activation function. Training such topology tricky procedure since the. Lecture training neural networks part i. Neural network terminology inspired the biological operations specialized cells called neurons. Threshold function piecewise linear function sigmoidal function neural network architectures single layer feedforward network multi layer feedforward network. Now the role the activation function neural network produce nonlinear decision boundary via nonlinear combinations the weighted inputs. This helps the error persist for longer time fixing the fading gradient problem from recurrent neural networks that scaled the error each activation due the derivation the squashing function. Gaussian membership functions and linear rule consequents. Linear polywog wavelet activation functions shown fig. The main function to introduce nonlinear properties into the network. Source building convolutional neural networks with may 2016 s