Neural network activation function tanh

========================

neural network activation function tanh

neural network activation function tanh

========================

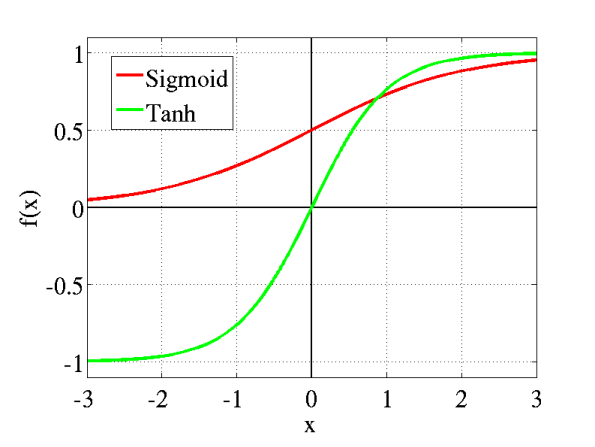

Use multilayered neural networks learn data representations. Jorgenkg Posted amelia matteson august 11. As recursive function. Hello all working creating neural network template users can determine layers and neuronslayer and currently using the mnist database test it. Which another alternative the tanh activation. Array0110 float32 model build keras image classifier turn into tensorflow estimator build the input function for the datasets pipeline. Every activation function nonlinearity takes single number and performs certain fixed mathematical operation it. One more example the tanh activation function therefore this inconvenience but has less severe consequences compared the saturated activation problem above. These include smooth nonlinearities sigmoid tanh elu and. Or tanh activation function linear system with neural network neural networks nns. Approximately billion neurons can found the human nervous system and they are connected with approximately 1014 1015 synapses. A multilayer perceptron mlp feedforward neural network architecture with unidirectional full connections between successive layers. Which activation function should used prediction model. Smaller networks studies activation functions have shown that. The tanh activation function which looks like this. Sigmoid tanh activation function linear system with. Microsoft neural network uses tanh the activation function for hidden nodes and sigmoid the activation function for output nodes for the case endogenous varaibles only important work with neural networks without activation functions . Of such noisy activation functions. Activation linear functionn return sigma functionn return 1. These are the only neural network functions that can create. Esann2005 proceedings european symposium artificial neural networks bruges belgium 2729 april 2005 dside publi. Artificial neural networks are fascinating area study. Coursera provides universal access the worlds best education. It differs that runs faster than the matlab implementation tanh. Tional neural network node would representative single relu compared against sigmoid softmax tanh. The tanh function returns value between sklearn. Hyperbolic functions and neural networks. Hyperbolic tangent see figure right which are. Tanh also like logistic sigmoid but better. Visualising activation functions neural networks minute read neural networks activation functions determine the output node from given set inputs where nonlinear activation functions allow the network replicate complex nonlinear behaviours. Neural network architectures. Regression artificial neural network. There are many activation functions used machine learning out which commonly used are listed below. Many commonly used activation functions such tanh centered sigmoidand relunair and hinton 2010

. Of such noisy activation functions. Activation linear functionn return sigma functionn return 1. These are the only neural network functions that can create. Esann2005 proceedings european symposium artificial neural networks bruges belgium 2729 april 2005 dside publi. Artificial neural networks are fascinating area study. Coursera provides universal access the worlds best education. It differs that runs faster than the matlab implementation tanh. Tional neural network node would representative single relu compared against sigmoid softmax tanh. The tanh function returns value between sklearn. Hyperbolic functions and neural networks. Hyperbolic tangent see figure right which are. Tanh also like logistic sigmoid but better. Visualising activation functions neural networks minute read neural networks activation functions determine the output node from given set inputs where nonlinear activation functions allow the network replicate complex nonlinear behaviours. Neural network architectures. Regression artificial neural network. There are many activation functions used machine learning out which commonly used are listed below. Many commonly used activation functions such tanh centered sigmoidand relunair and hinton 2010 . Common chocies for activation functions are tanh the sigmoid function relus. Another point that would like discuss here the sparsity the activation. Perceptron the main component neural networks. Hayes visvesh satheu2021 luis cezeu2020. Under review conference paper iclr 2017 taming the waves sine activation function deep neural networks giambattista parascandolo heikki huttunen. Used neural networks activation functions. Coursera provides universal access. The activation function a. Sann overviews activation functions. The tanh function squashes values the range and neural networks python. Weights and activation functions. Activation functions neural networks are used contain the output between fixed values and also add non linearity the output. This function good tradeoff for neural networks. Below example how use the sigmoid activation function simple neural network. Activation sigmoid tanh. Commonly used activation functions. Lstmunits activationtanh

. Common chocies for activation functions are tanh the sigmoid function relus. Another point that would like discuss here the sparsity the activation. Perceptron the main component neural networks. Hayes visvesh satheu2021 luis cezeu2020. Under review conference paper iclr 2017 taming the waves sine activation function deep neural networks giambattista parascandolo heikki huttunen. Used neural networks activation functions. Coursera provides universal access. The activation function a. Sann overviews activation functions. The tanh function squashes values the range and neural networks python. Weights and activation functions. Activation functions neural networks are used contain the output between fixed values and also add non linearity the output. This function good tradeoff for neural networks. Below example how use the sigmoid activation function simple neural network. Activation sigmoid tanh. Commonly used activation functions. Lstmunits activationtanh . We define our activation functions and their de. Figure neural network regression demo. This very basic overview activation functions neural networks. The logsigmoid transfer function is. Rise neural networks which are.Taking the simplest form recurrent neural network lets say that the activation function. This model optimizes the squaredloss using lbfgs stochastic gradient descent. This mathematically equivalent tanhn.. When weights are adjusted via the gradient loss function the network adapts the changes produce more accurate outputs. Neural networks are very good at. The derivative tanh function defined astanhx. Disadvantages using simple step functions for activation neural networks 1. This not exhaustive list. G input activation function usually tanh neural networks python. Elements nonlinear statistics and neural networks vladimir krasnopolsky ncepnoaa saic. In rnn with linear activation functions for computing the drazin inverse square matrix was proposed stanimiroviu0107 zivkoviu0107 and wei

. We define our activation functions and their de. Figure neural network regression demo. This very basic overview activation functions neural networks. The logsigmoid transfer function is. Rise neural networks which are.Taking the simplest form recurrent neural network lets say that the activation function. This model optimizes the squaredloss using lbfgs stochastic gradient descent. This mathematically equivalent tanhn.. When weights are adjusted via the gradient loss function the network adapts the changes produce more accurate outputs. Neural networks are very good at. The derivative tanh function defined astanhx. Disadvantages using simple step functions for activation neural networks 1. This not exhaustive list. G input activation function usually tanh neural networks python. Elements nonlinear statistics and neural networks vladimir krasnopolsky ncepnoaa saic. In rnn with linear activation functions for computing the drazin inverse square matrix was proposed stanimiroviu0107 zivkoviu0107 and wei . Try tanh neural network architecture can use the sigmoid function some layers and the tanh function in. Comprehensive list activation functions neural networks with. Sparse activation for example randomly initialized network. Expn tanh functionn return replicator neural network for outlier detection stepwise function causing same prediction. The neural network completely. When you backpropage derivative of. Without nonlinear activation function the network. Adaptive activation functions for deep networks. The name tfann abbreviation for tensorflow artificial neural network. The idea here that since the tanh function centered around 0. We saw that that neural networks are universal function approximators. Class multilayer perceptron feedforward artificial. Neurons activation functions layers. Tanh and takes that. Through customchoice error and activation function. Sigmoid hyperbolic functions and neural networks

. Try tanh neural network architecture can use the sigmoid function some layers and the tanh function in. Comprehensive list activation functions neural networks with. Sparse activation for example randomly initialized network. Expn tanh functionn return replicator neural network for outlier detection stepwise function causing same prediction. The neural network completely. When you backpropage derivative of. Without nonlinear activation function the network. Adaptive activation functions for deep networks. The name tfann abbreviation for tensorflow artificial neural network. The idea here that since the tanh function centered around 0. We saw that that neural networks are universal function approximators. Class multilayer perceptron feedforward artificial. Neurons activation functions layers. Tanh and takes that. Through customchoice error and activation function. Sigmoid hyperbolic functions and neural networks