LlamaIndex Nodes: A Complete Guide

Service to accelerate the indexation of your site and links in Google. Result in 48 hours.

We invite you to evaluate the effectiveness of SpeedyIndexBot service

Imagine effortlessly querying a vast collection of documents, ranging from PDFs and spreadsheets to your personal notes, all without writing complex code. This isn’t science fiction; it’s the power of LlamaIndex.

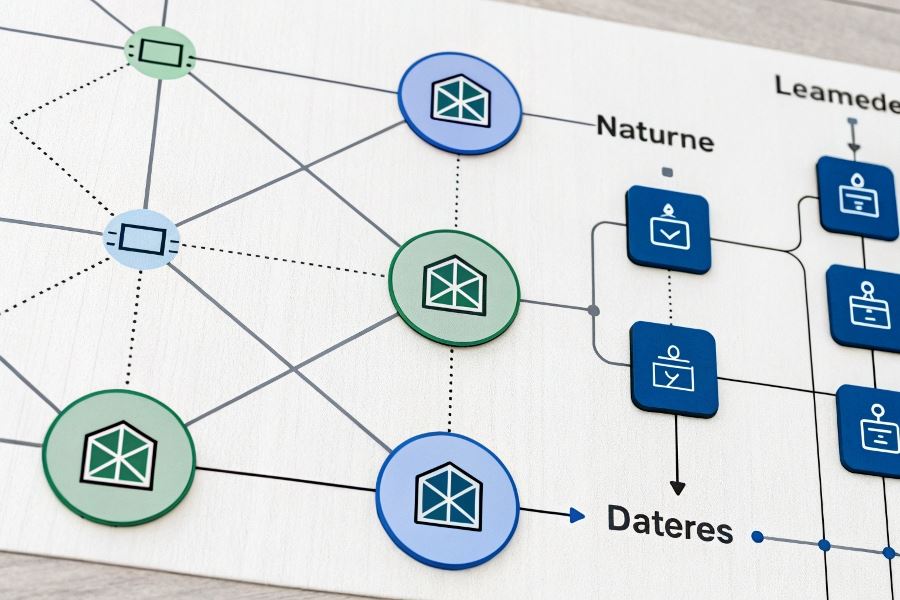

Harnessing the potential of large language models (LLMs) for knowledge retrieval requires efficient data management. This is where the building blocks of LlamaIndex, its nodes, come into play. These nodes act as connectors, allowing you to seamlessly integrate various data sources into your LLM workflow. They handle the heavy lifting of data processing and indexing, making your information readily accessible.

Exploring LlamaIndex Node Types

LlamaIndex offers a variety of node types, each designed for specific data formats and retrieval methods. For instance, the SimpleDirectoryReader node is perfect for organizing and accessing documents stored in a local directory. Need to leverage the power of vector embeddings for semantic search? The VectorStoreIndex node is your go-to solution. It transforms your text data into vector representations, enabling more nuanced and context-aware querying.

The Advantages of LlamaIndex Nodes

Using LlamaIndex nodes offers several key advantages. First, they simplify data integration, allowing you to connect to diverse data sources with minimal effort. Second, they significantly improve query efficiency. By pre-processing and indexing your data, LlamaIndex nodes ensure fast and accurate responses. Finally, they enhance the overall scalability of your knowledge retrieval system, allowing you to handle increasingly large datasets without performance degradation. This makes LlamaIndex an invaluable tool for anyone working with substantial amounts of unstructured information.

Unlock LlamaIndex Power

Harnessing the potential of large language models (LLMs) for complex information retrieval often hinges on effective data structuring. Imagine needing to query a vast collection of PDFs, websites, or databases – a daunting task without the right tools. This is where the ability to organize your data into easily searchable units becomes crucial. This is where the concept of building structures to organize and access your information comes into play. These structures allow you to efficiently query and retrieve relevant information from diverse sources.

Building Nodes From Diverse Sources

Creating these structures from various data sources is surprisingly straightforward. Let’s start with a common scenario: you have a collection of PDF documents containing valuable insights for your business. Using LlamaIndex, you can easily ingest these PDFs and transform them into individual nodes. Each node represents a distinct piece of information, like a single PDF file or a section within a larger document. The process involves a few simple steps: first, you’ll need to install the LlamaIndex library using pip install llama-index. Then, you’ll load your data using the appropriate LlamaIndex data connector. For PDFs, the SimpleDirectoryReader is a great starting point. Here’s a snippet showing how to create nodes from a directory of PDFs:

from llama_index import SimpleDirectoryReader, GPTSimpleVectorIndex

documents = SimpleDirectoryReader('./my_pdfs').load_data()

index = GPTSimpleVectorIndex.from_documents(documents)

This code reads all PDFs from the ./my_pdfs directory and creates a vector index, allowing for efficient semantic search. You can adapt this approach to other data sources like CSV files, web pages (using BeautifulSoup for scraping), or even databases by using the relevant LlamaIndex readers.

Mastering Query Techniques

Once your nodes are created, the real power of LlamaIndex comes into play – querying. Instead of sifting through countless documents manually, you can ask natural language questions and receive precise answers. For example, if you have a question about a specific topic covered in your PDFs, you can query the index directly:

query_engine = index.as_query_engine()

response = query_engine.query("What are the key findings on market trends?")

print(response)

This code snippet demonstrates a simple query. LlamaIndex offers more advanced querying techniques, such as specifying context windows or using different query engines for fine-tuned results. Experimentation is key to finding the optimal approach for your specific needs. Remember to consider the context of your question and the structure of your nodes for best results.

Optimizing Node Performance

Optimizing node performance is crucial for handling large datasets and ensuring fast query times. One key aspect is choosing the right index structure. LlamaIndex offers various index types, each with its strengths and weaknesses. Vector indexes, like the one used in the previous example, are excellent for semantic search, but they can be resource-intensive for extremely large datasets. Other options include keyword-based indexes or tree-based indexes, each offering different trade-offs between speed and accuracy. Experimentation and careful consideration of your data characteristics are essential for selecting the most suitable index. Furthermore, techniques like chunking your documents into smaller, more manageable nodes can significantly improve performance, especially when dealing with lengthy texts. Regularly reviewing and refining your node structure as your data evolves is a best practice for maintaining optimal performance.

Unleashing LlamaIndex Power

Imagine a world where accessing and processing vast datasets for your LLM applications is seamless and efficient. This isn’t science fiction; it’s the reality LlamaIndex offers. But what happens when your data explodes in size, or your query response times become unacceptable? That’s where mastering advanced techniques becomes crucial. We’ll explore strategies to not only optimize your existing setup but also scale your infrastructure to handle the demands of ever-growing datasets. This means maximizing the potential of your data access and retrieval systems.

The core of this enhanced performance lies in understanding how to effectively manage llamaindex nodes. These nodes act as intelligent gateways, connecting your LLM to diverse data sources. By strategically configuring and scaling these nodes, you can dramatically improve the speed and efficiency of your applications. Think of it as building a high-performance highway system for your data, ensuring smooth and rapid access for your LLM.

Speed and Efficiency Optimization

Optimizing for speed involves several key strategies. First, consider the type of data you’re working with. Structured data, like databases, often benefits from specialized connectors and optimized query strategies. For unstructured data, like PDFs or text files, techniques like chunking and vector databases can significantly improve retrieval times. Experiment with different node configurations and indexing methods to find the optimal balance between speed and accuracy. For example, you might find that using a smaller chunk size improves search precision, but at the cost of increased processing time. Careful benchmarking and iterative refinement are key.

Scaling for Massive Datasets

Handling truly massive datasets requires a different approach. Simply adding more processing power isn’t always the answer. A well-designed architecture is essential. Consider distributing your data across multiple nodes, creating a distributed index. This allows for parallel processing and significantly reduces query latency. Furthermore, explore techniques like sharding and data partitioning to further enhance scalability. Remember to choose a robust storage solution capable of handling the volume and velocity of your data. Cloud-based solutions, such as Amazon S3, often provide the scalability and reliability needed for large-scale deployments.

Advanced LlamaIndex Features

LlamaIndex offers a wealth of advanced features beyond basic indexing. Explore features like query rewriting and advanced filtering to refine your search results and improve the accuracy of your LLM’s responses. Consider using different embedding models to fine-tune the semantic understanding of your data. Experiment with different retrieval methods, such as keyword search versus semantic search, to find the best approach for your specific needs. The flexibility of LlamaIndex allows you to tailor your system to the unique characteristics of your data and application. Don’t be afraid to experiment and discover the optimal configuration for your specific use case.

Service to accelerate the indexation of your site and links in Google. Result in 48 hours.

We invite you to evaluate the effectiveness of SpeedyIndexBot service