Jav Leaked

💣 👉🏻👉🏻👉🏻 ALL INFORMATION CLICK HERE 👈🏻👈🏻👈🏻

Jav Leaked

Get started with Spring 5 and Spring Boot 2, through the Learn Spring course:

>> CHECK OUT THE COURSE

Get started with Spring 5 and Spring Boot 2, through the Learn Spring course:

>> CHECK OUT THE COURSE

One of the core benefits of Java is the automated memory management with the help of the built-in Garbage Collector (or GC for short). The GC implicitly takes care of allocating and freeing up memory and thus is capable of handling the majority of the memory leak issues.

While the GC effectively handles a good portion of memory, it doesn't guarantee a foolproof solution to memory leaking. The GC is pretty smart, but not flawless. Memory leaks can still sneak up even in applications of a conscientious developer.

There still might be situations where the application generates a substantial number of superfluous objects, thus depleting crucial memory resources, sometimes resulting in the whole application's failure.

Memory leaks are a genuine problem in Java. In this tutorial, we'll see what the potential causes of memory leaks are, how to recognize them at runtime, and how to deal with them in our application .

A Memory Leak is a situation when there are objects present in the heap that are no longer used, but the garbage collector is unable to remove them from memory and, thus they are unnecessarily maintained.

A memory leak is bad because it blocks memory resources and degrades system performance over time . And if not dealt with, the application will eventually exhaust its resources, finally terminating with a fatal java.lang.OutOfMemoryError .

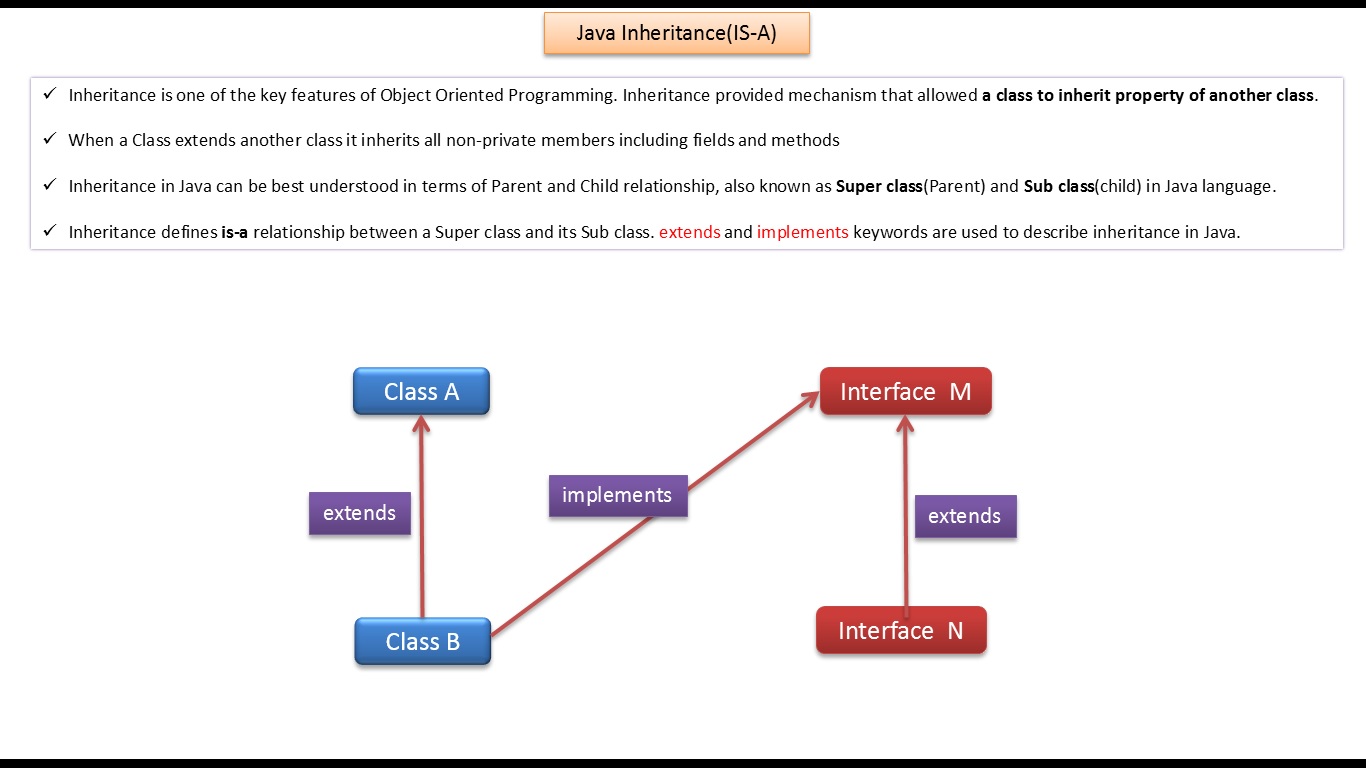

There are two different types of objects that reside in Heap memory — referenced and unreferenced. Referenced objects are those who have still active references within the application whereas unreferenced objects don't have any active references.

The garbage collector removes unreferenced objects periodically, but it never collects the objects that are still being referenced. This is where memory leaks can occur:

Let's have a closer look at some of these scenarios and how to deal with them.

In any application, memory leaks can occur for numerous reasons. In this section, we'll discuss the most common ones.

The first scenario that can cause a potential memory leak is heavy use of static variables.

In Java, static fields have a life that usually matches the entire lifetime of the running application (unless ClassLoader becomes eligible for garbage collection).

Let's create a simple Java program that populates a static List:

Now if we analyze the Heap memory during this program execution, then we'll see that between debug points 1 and 2, as expected, the heap memory increased.

But when we leave the populateList() method at the debug point 3, the heap memory isn't yet garbage collected as we can see in this VisualVM response:

However, in the above program, in line number 2, if we just drop the keyword static , then it will bring a drastic change to the memory usage, this Visual VM response shows:

The first part until the debug point is almost the same as what we obtained in the case of static. But this time after we leave the populateList() method, all the memory of the list is garbage collected because we don't have any reference to it .

Hence we need to pay very close attention to our usage of static variables. If collections or large objects are declared as static , then they remain in the memory throughout the lifetime of the application, thus blocking the vital memory that could otherwise be used elsewhere.

Whenever we make a new connection or open a stream, the JVM allocates memory for these resources. A few examples include database connections, input streams, and session objects.

Forgetting to close these resources can block the memory, thus keeping them out of the reach of GC. This can even happen in case of an exception that prevents the program execution from reaching the statement that's handling the code to close these resources.

In either case, the open connection left from resources consumes memory , and if we don't deal with them, they can deteriorate performance and may even result in OutOfMemoryError .

When defining new classes, a very common oversight is not writing proper overridden methods for equals() and hashCode() methods.

HashSet and HashMap use these methods in many operations, and if they're not overridden correctly, then they can become a source for potential memory leak problems.

Let's take an example of a trivial Person class and use it as a key in a HashMap :

Now we'll insert duplicate Person objects into a Map that uses this key.

Remember that a Map cannot contain duplicate keys:

Here we're using Person as a key. Since Map doesn't allow duplicate keys, the numerous duplicate Person objects that we've inserted as a key shouldn't increase the memory.

But since we haven't defined proper equals() method, the duplicate objects pile up and increase the memory , that's why we see more than one object in the memory. The Heap Memory in VisualVM for this looks like:

However, if we had overridden the equals() and hashCode() methods properly, then there would only exist one Person object in this Map .

Let's take a look at proper implementations of equals() and hashCode() for our Person class:

And in this case, the following assertions would be true:

After properly overriding equals() and hashCode() , the Heap Memory for the same program looks like:

Another example is of using an ORM tool like Hibernate, which uses equals() and hashCode() methods to analyze the objects and saves them in the cache.

The chances of memory leak are quite high if these methods are not overridden because Hibernate then wouldn't be able to compare objects and would fill its cache with duplicate objects.

This happens in the case of non-static inner classes (anonymous classes). For initialization, these inner classes always require an instance of the enclosing class.

Every non-static Inner Class has, by default, an implicit reference to its containing class. If we use this inner class' object in our application, then even after our containing class' object goes out of scope, it will not be garbage collected .

Consider a class that holds the reference to lots of bulky objects and has a non-static inner class. Now when we create an object of just the inner class, the memory model looks like:

However, if we just declare the inner class as static, then the same memory model looks like this:

This happens because the inner class object implicitly holds a reference to the outer class object, thereby making it an invalid candidate for garbage collection. The same happens in the case of anonymous classes.

Use of finalizers is yet another source of potential memory leak issues. Whenever a class' finalize() method is overridden, then objects of that class aren't instantly garbage collected. Instead, the GC queues them for finalization, which occurs at a later point in time.

Additionally, if the code written in finalize() method is not optimal and if the finalizer queue cannot keep up with the Java garbage collector, then sooner or later, our application is destined to meet an OutOfMemoryError .

To demonstrate this, let's consider that we have a class for which we have overridden the finalize() method and that the method takes a little bit of time to execute. When a large number of objects of this class gets garbage collected, then in VisualVM, it looks like:

However, if we just remove the overridden finalize() method, then the same program gives the following response:

For more detail about finalize() , read section 3 ( Avoiding Finalizers) in our Guide to the finalize Method in Java .

The Java String pool had gone through a major change in Java 7 when it was transferred from PermGen to HeapSpace. But for applications operating on version 6 and below, we should be more attentive when working with large Strings .

If we read a huge massive String object, and call intern() on that object, then it goes to the string pool, which is located in PermGen (permanent memory) and will stay there as long as our application runs. This blocks the memory and creates a major memory leak in our application.

The PermGen for this case in JVM 1.6 looks like this in VisualVM:

In contrast to this, in a method, if we just read a string from a file and do not intern it, then the PermGen looks like:

ThreadLocal (discussed in detail in Introduction to ThreadLocal in Java tutorial) is a construct that gives us the ability to isolate state to a particular thread and thus allows us to achieve thread safety.

When using this construct, each thread will hold an implicit reference to its copy of a ThreadLocal variable and will maintain its own copy, instead of sharing the resource across multiple threads, as long as the thread is alive.

Despite its advantages, the use of ThreadLocal variables is controversial, as they are infamous for introducing memory leaks if not used properly. Joshua Bloch once commented on thread local usage :

“Sloppy use of thread pools in combination with sloppy use of thread locals can cause unintended object retention, as has been noted in many places. But placing the blame on thread locals is unwarranted.”

ThreadLocals are supposed to be garbage collected once the holding thread is no longer alive. But the problem arises when ThreadLocals are used along with modern application servers.

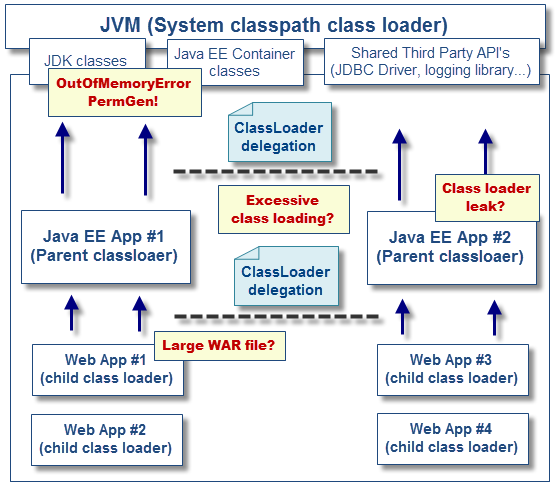

Modern application servers use a pool of threads to process requests instead of creating new ones (for example the Executor in case of Apache Tomcat). Moreover, they also use a separate classloader.

Since Thread Pools in application servers work on the concept of thread reuse, they are never garbage collected — instead, they're reused to serve another request.

Now, if any class creates a ThreadLocal variable but doesn't explicitly remove it, then a copy of that object will remain with the worker Thread even after the web application is stopped, thus preventing the object from being garbage collected.

Although there is no one-size-fits-all solution when dealing with memory leaks, there are some ways by which we can minimize these leaks.

Java profilers are tools that monitor and diagnose the memory leaks through the application. They analyze what's going on internally in our application — for example, how memory is allocated.

Using profilers, we can compare different approaches and find areas where we can optimally use our resources.

We have used Java VisualVM throughout section 3 of this tutorial. Please check out our Guide to Java Profilers to learn about different types of profilers, like Mission Control, JProfiler, YourKit, Java VisualVM, and the Netbeans Profiler.

By enabling verbose garbage collection, we're tracking detailed trace of the GC. To enable this, we need to add the following to our JVM configuration:

By adding this parameter, we can see the details of what's happening inside GC:

We can also resort to reference objects in Java that comes in-built with java.lang.ref package to deal with memory leaks. Using java.lang.ref package, instead of directly referencing objects, we use special references to objects that allow them to be easily garbage collected.

Reference queues are designed for making us aware of actions performed by the Garbage Collector. For more information, read Soft References in Java Baeldung tutorial, specifically section 4.

For projects on JDK 1.5 and above, Eclipse shows warnings and errors whenever it encounters obvious cases of memory leaks. So when developing in Eclipse, we can regularly visit the “Problems” tab and be more vigilant about memory leak warnings (if any):

We can measure and analyze the Java code's performance by executing benchmarks. This way, we can compare the performance of alternative approaches to do the same task. This can help us choose a better approach and may help us to conserve memory.

For more information about benchmarking, please head over to our Microbenchmarking with Java tutorial.

Finally, we always have the classic, old-school way of doing a simple code walk-through.

In some cases, even this trivial looking method can help in eliminating some common memory leak problems.

In layman's terms, we can think of memory leak as a disease that degrades our application's performance by blocking vital memory resources. And like all other diseases, if not cured, it can result in fatal application crashes over time.

Memory leaks are tricky to solve and finding them requires intricate mastery and command over the Java language. While dealing with memory leaks, there is no one-size-fits-all solution, as leaks can occur through a wide range of diverse events.

However, if we resort to best practices and regularly perform rigorous code walk-throughs and profiling, then we can minimize the risk of memory leaks in our application.

As always, the code snippets used to generate the VisualVM responses depicted in this tutorial are available on GitHub .

Типичные случаи утечки памяти в Java / Хабр

Understanding Memory Leaks in Java | Baeldung

How Memory Leaks Happen in a Java Application – Stackify

Куда уходит память. Разбираемся с memory leaks в Java на примере Spring...

Android: Утечка памяти

About Eugen Paraschiv Eugen is a software engineer with a passion for Spring, REST APIs, Security and teaching, and the founder of Baeldung .

Get the latest news, tips, and guides on software development.

How to Troubleshoot IIS Worker Process (w3wp) High CPU Usage

How to Monitor IIS Performance: From the Basics to Advanced IIS Performance Monitoring

SQL Performance Tuning: 7 Practical Tips for Developers

Looking for New Relic Alternatives & Competitors? Learn Why Developers Pick Retrace

5 Awesome Retrace Logging & Error Tracking Features

Want to write better code? Check out our free transaction tracing tool, Prefix !

Want to write better code? Check out our free transaction tracing tool, Prefix !

One of the core benefits of Java is the JVM, which is an out-of-the-box memory management . Essentially, we can create objects and the Java Garbage Collector will take care of allocating and freeing up memory for us.

Nevertheless, memory leaks can still occur in Java applications.

In this article, we’re going to describe the most common memory leaks, understand their causes, and look at a few techniques to detect/avoid them. We’re also going to use the Java YourKit profiler throughout the article, to analyze the state of our memory at runtime.

The standard definition of a memory leak is a scenario that occurs when objects are no longer being used by the application, but the Garbage Collector is unable to remove them from working memory – because they’re still being referenced. As a result, the application consumes more and more resources – which eventually leads to a fatal OutOfMemoryError .

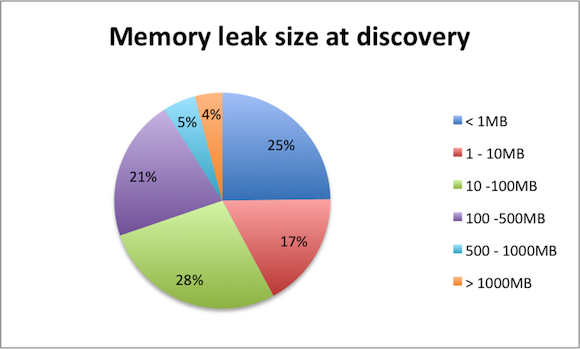

For a better understanding of the concept, here’s a simple visual representation:

As we can see, we have two types of objects – referenced and unreferenced; the Garbage Collector can remove objects that are unreferenced. Referenced objects won’t be collected, even if they’re actually not longer used by the application.

Detecting memory leaks can be difficult. A number of tools perform static analysis to determine potential leaks, but these techniques aren’t perfect because the most important aspect is the actual runtime behavior of the running system.

So, let’s have a focused look at some of the standard practices of preventing memory leaks , by analyzing some common scenarios .

In this initial section, we’re going to focus on the classic memory leak scenario – where Java objects are continuously created without being released.

An advantageous technique to understand these situations is to make reproducing a memory leak easier by setting a lower size for the Heap . That’s why, when starting our application, we can adjust the JVM to suit our memory needs:

These parameters specify the initial Java Heap size as well as the maximum Heap size.

The first scenario that might cause a Java memory leak is referencing a heavy object with a static field.

Let’s have a look at a quick example:

We created our ArrayList as a static field – which will never be collected by the JVM Garbage Collector during the lifetime of the JVM process, even after the calculations it was used for are done. We also invoked Thread.sleep(10000) to allow the GC to perform a full collection and try to reclaim everything that can be reclaimed.

Let’s run the test and analyze the JVM with our profiler:

Notice how, at the very beginning, all memory is, of course, free.

Then, in just 2 seconds, the iteration process runs and finishes – loading everything into the list (naturally this will depend on the machine you’re running the test on).

After that, a full garbage collection cycle is triggered, and the test continues to execute, to allow this cycle time to run and finish. As you can see, the list is not reclaimed and the memory consumption doesn’t go down.

Let’s now see the exact same example, only this time, the ArrayList isn’t referenced by a static variable. Instead, it’s a local variable that gets created, used and then discarded:

Once the method finishes its job, we’ll observe the major GC collection, around 50th second on the image below:

Notice how the GC is now able to reclaim some of the memory utilized by the JVM.

Now that you understand the scenario, there are of course ways to prevent it from occurring.

First, we need to pay close attention to our usage of static ; declaring any collection or heavy object as static ties its lifecycle to the lifecycle of the JVM itself, and makes the entire object graph impossible to collect.

We also need to be aware of collections in general – that’s a common way to unintentionally hold on to references for longer than we need to.

The second group of scenarios that frequently causes memory leaks involves String operations – specifically the String.intern() API .

Let’s have a look at a quick example:

Here, we simply try to load a large text file into running memory and then return a canonical form, using . intern() .

The intern API will place the str String in the JVM memory pool – where it can’t be collected – and again, this will cause the GC to be unable to free up enough memory:

We can clearly see that in the first 15th seconds JVM is stable, then we load the file and JVM perform garbage collection (20th second).

Finally, the str.intern() is invoked, which leads to the memory leak – the stable line indicating high heap memory usage, which will never be released.

Please remember that interned String objects are stored in PermGen space – if our application is intended to perform a lot of operations on large strings , we might need to increase the size of the permanent generation:

The second solution is to use Java 8 – where the PermGen space is replaced by the Metaspace – which won’t lead to any OutOfMemoryError when using intern on Strings:

Finally, there are also several options of avoiding the .intern() API on Strings as well.

Forgetting to close a stream is a very common scenario, and certainly, one that most developers can relate to. The problem was partially removed in Java 7 when the ability to automatically close all types of streams was introduced into the try-with-resource clause .

Why partially? Because the try-with-resources syntax is optional :

Let’s see how the memory of the application looks when loading a large file from an URL:

As we can see, the heap usage is gradually increasing over time – which is the direct impact of the memory leak caused by not closing the stream.

Let’s dig a bit deeper into this scenario because it’s not as clear-cut as the rest. Technically, an unclosed stream will result in two types of leaks – a low-level resource leak and memory leak.

The low-level resource leak is simply the leak of an OS-level resource – such as file descriptors, open connections, etc. These resources can also leak, just like memory does.

Of course, the JVM uses memory to keep track of these underlying resources as well, which is why this also results in a memory leak .

We always need to remember to close streams manually, or to make a use of the auto-close feature introduced in Java 8:

In this case, the BufferedReader will be automatically closed at the end of the try statement, without the need to close it in an explicit finally block.

This scenario is quite similar to the previous one, with the primary difference of dealing with unclosed connections (e.g. to a database, to an FTP server, etc.). Again, improper implementation can do a lot of harm, leading to memory problems.

The URLConnection remains open, and the result is, predictably, a memory leak:

Notice how the Garbage Collector cannot do anything to release unused, but referenced memory. The situation is immediately clear after the 1st minute – the number of GC operations rapidly decreases, causing increased Heap memory use, which leads to the OutOfMemoryError.

The answer here is simple – we need to always close connections in a disciplined manner.

A simple but very common example that can lead to a memory leak is to use a HashSet with objects that are missing their hashCode() or equals() implementations.

Specifically, when we start adding duplicate objects into a Set – this will only ever grow, instead of ignoring duplicates as it should. We also won’t be able to remove these objects, once added.

Let’s create a simple class without either equals or hashCode :

This simple implementation will lead to the following scenario at runtime:

Notice how the garbage collector stopped being able to reclaim memory around 1:40, and notice the memory leak; the number of GC collections dropped almost four times immediately after.

In these situations, the solution is simple – it’s crucial to provide the hashCode() and equals() implementations.

One tool worth mentioning here is Project Lombok – this provides a lot of default implementation by annotations, e.g. @EqualsAndHashCode .

Diagnosing memory leaks is a lengthy process that requires a lot of practical experience, debugging skills and detailed knowledge of the application.

Let’s see which techniques can help you in addition to standard profiling.

One of the quickest ways to identify a memory leak is to enable verbose garbage collection.

By adding the -verbose:gc parameter to the JVM configuration of our application, we’re enabling a very detailed trace of GC. Summary reports are shown in default error output file, which should help you understand how your memory is being managed.

The second technique is the one we’ve been using throughout this article – and that’s profiling. The most popular profiler is Visual VM – which is a good place to start moving past command-line JDK tools and into lightweight profiling.

In this article, we used another profiler – YourKit – which has some additional, more advanced features compared to Visual VM.

Finally, this is more of a general good practice than a specific technique to deal with memory leaks.

Simply put – review your code thoroughly, practice regular code reviews and make good use of static analysis tools to help you understand your code and your system.

In this tutorial, we had a practical look at how memory leaks happen on the JVM. Understanding how these scenarios happen is the first step in the process of dealing with them.

Then, having the techniques and tools to really see what’s happening at runtime, as the leak occurs, is critical as well. Static analysis and careful code-focused reviews can only do so much, and – at the end of the day – it’s the runtime that will show you the more complex leaks that aren’t immediately identifiable in the code.

Finally, leaks can be notoriously hard to find and reproduce because many of them only happen under intense load, which generally happens in production. This is where you need to go beyond code-level analysis and work on two main aspects – reproduction and early detection.

The best and most reliable way to reproduce memory leaks is to simulate the usage patterns of a production environment as close as possible, with the help of a good suite of performance tests .

And early detection is where a solid performance management solution and even an early detection solution can make a significant difference, as it’s the only way to have the necessary insight into the runtime of your application in production.

The full implementation of this tutorial can be found over on GitHub . This is a Maven based project, so it can simply be imported and run as it is.

Stackify’s APM tools are used by thousands of .NET, Java, PHP, Node.js, Python, & Ruby developers all over the world. Explore Retrace’s product features to learn more.

Join the 50,000 developers that subscribe to our newsletter.

PO Box 2159 Mission, KS 66201 816-888-5055

Hot Teen Face

Amateur Hardcore Interracial

Hot Middle Aged Women Nude

Porn Pics Sites

Lesbians Seducing Friends