Fake User Agent

⚡ ALL INFORMATION CLICK HERE 👈🏻👈🏻👈🏻

Fake User Agent

Sign up with email

Sign up

Sign up with Google

Sign up with GitHub

Sign up with Facebook

Asked

6 years, 1 month ago

1,659 3 3 gold badges 6 6 silver badges 19 19 bronze badges

1,696 3 3 gold badges 12 12 silver badges 14 14 bronze badges

1,315 1 1 gold badge 9 9 silver badges 23 23 bronze badges

404k 101 101 gold badges 896 896 silver badges 1056 1056 bronze badges

thanks for your answer, I tried with the headers in my requests but still could not get the real content of the page, there's a string 'Your web browser must have JavaScript enabled in order for this application to display correctly.' in the returned html page, should I add java script support in the requests? If so how would I do that?

– user1726366

Dec 26 '14 at 4:32

@user1726366: You can't simply add JavaScript support - you need a JavaScript interpreter for that. The simplest approach is to use the JavaScript interpreter of a real Web browser, but you can automate that from Python using Selenium .

– PM 2Ring

Dec 26 '14 at 5:44

@alecxe,@sputnick: I tried to capture the packets with wireshark to compare the difference from using python requests and browser, seems like the website url isn't a static one I have to wait for the page render to complete, so Selenium sounds the right tools for me. Thank you for your kind help. :)

– user1726366

Dec 26 '14 at 9:26

@user1726366 yup, if using a real browser+selenium fits your needs then this is the most painless approach. Note that you can use PhantomJS headless browser with selenium. Thanks. (don't forget to accept the answer if it was helpful)

– alecxe

Dec 26 '14 at 14:12

Turns out some search engines filter some UserAgent . Anyone know why ? Could anyone provide a list of acceptable UserAgent s ?

– dallonsi

Aug 6 '20 at 12:18

3,103 1 1 gold badge 5 5 silver badges 20 20 bronze badges

1,213 1 1 gold badge 15 15 silver badges 18 18 bronze badges

still getting Error 404

– Maksim Kniazev

May 13 '18 at 23:16

404 is different error, you sure you are able to browse the page using a browser?

– Umesh Kaushik

May 15 '18 at 8:08

Absolutely. I feel like the web site that I am trying to use blocked all Amazon EC2 IPs.

– Maksim Kniazev

May 16 '18 at 8:58

Could you please ping the link here? I can try at my end. Further if IP is blocked then error code should be 403(forbidden) or 401(unauthorised). There are websites which do not allow scraping at all. Further many websites user cloudflare to avoid bots to access website.

– Umesh Kaushik

May 17 '18 at 5:19

Here is my link regalbloodline.com/music/eminem . It worked fine before. Stopped working on python 2. Worked on python 3 on local machine. Move to AWS EC2 did not work there. Kept getting Error 404. Then stopped working on local machine too. Using browser emulation worked on local machine but not on EC2. In the end I gave up and found alternative website to scrape. By the way is cloudfire could be avoided ?

– Maksim Kniazev

May 17 '18 at 6:13

139k 29 29 gold badges 195 195 silver badges 193 193 bronze badges

1,446 1 1 gold badge 11 11 silver badges 20 20 bronze badges

21 1 1 silver badge 2 2 bronze badges

1,659 3 3 gold badges 6 6 silver badges 19 19 bronze badges

Senior React Engineer with eye for design - London or remote (in EU time-zone)

Backend Software Engineer, Creator Tooling

Team Lead (.NET) - Solve interesting problems from home!

Senior Java Web Developer / Consultant - Work in an open source company!

Stack Overflow

Questions

Jobs

Developer Jobs Directory

Salary Calculator

Help

Mobile

Disable Responsiveness

Products

Teams

Talent

Advertising

Enterprise

Company

About

Press

Work Here

Legal

Privacy Policy

Terms of Service

Contact Us

Stack Exchange Network

Technology

Life / Arts

Culture / Recreation

Science

Other

Join Stack Overflow to learn, share knowledge, and build your career.

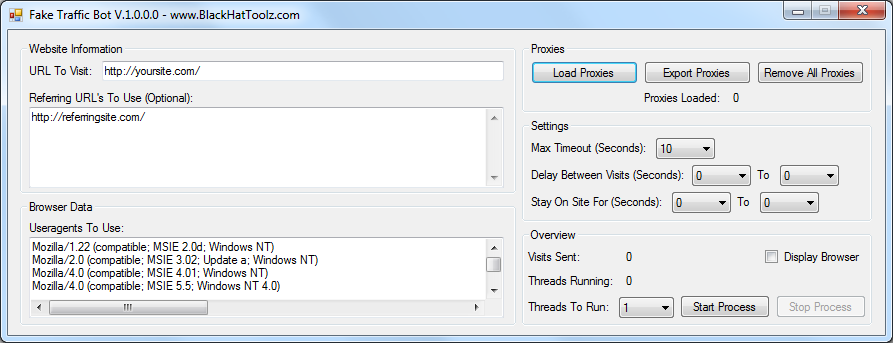

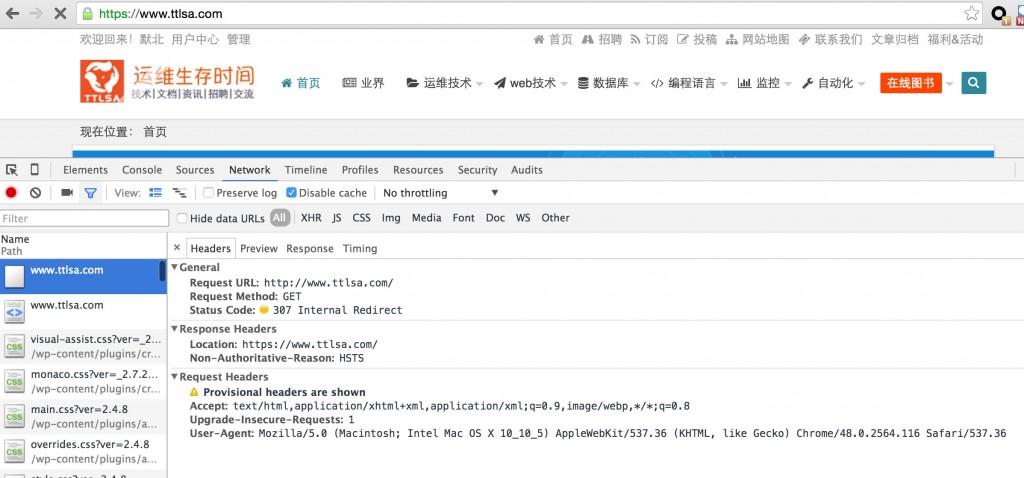

I want to get the content from this website.

If I use a browser like Firefox or Chrome I could get the real website page I want, but if I use the Python requests package (or wget command) to get it, it returns a totally different HTML page.

I thought the developer of the website had made some blocks for this.

How do I fake a browser visit by using python requests or command wget?

FYI, here is a list of User-Agent strings for different browsers:

As a side note, there is a pretty useful third-party package called fake-useragent that provides a nice abstraction layer over user agents:

Up to date simple useragent faker with real world database

Try doing this, using firefox as fake user agent (moreover, it's a good startup script for web scraping with the use of cookies):

The root of the answer is that the person asking the question needs to have a JavaScript interpreter to get what they are after. What I have found is I am able to get all of the information I wanted on a website in json before it was interpreted by JavaScript. This has saved me a ton of time in what would be parsing html hoping each webpage is in the same format.

So when you get a response from a website using requests really look at the html/text because you might find the javascripts JSON in the footer ready to be parsed.

I had a similar issue but I was unable to use the UserAgent class inside the fake_useragent module. I was running the code inside a docker container

I targeted the endpoint used in the module. This solution still gave me a random user agent however there is the possibility that the data structure at the endpoint could change.

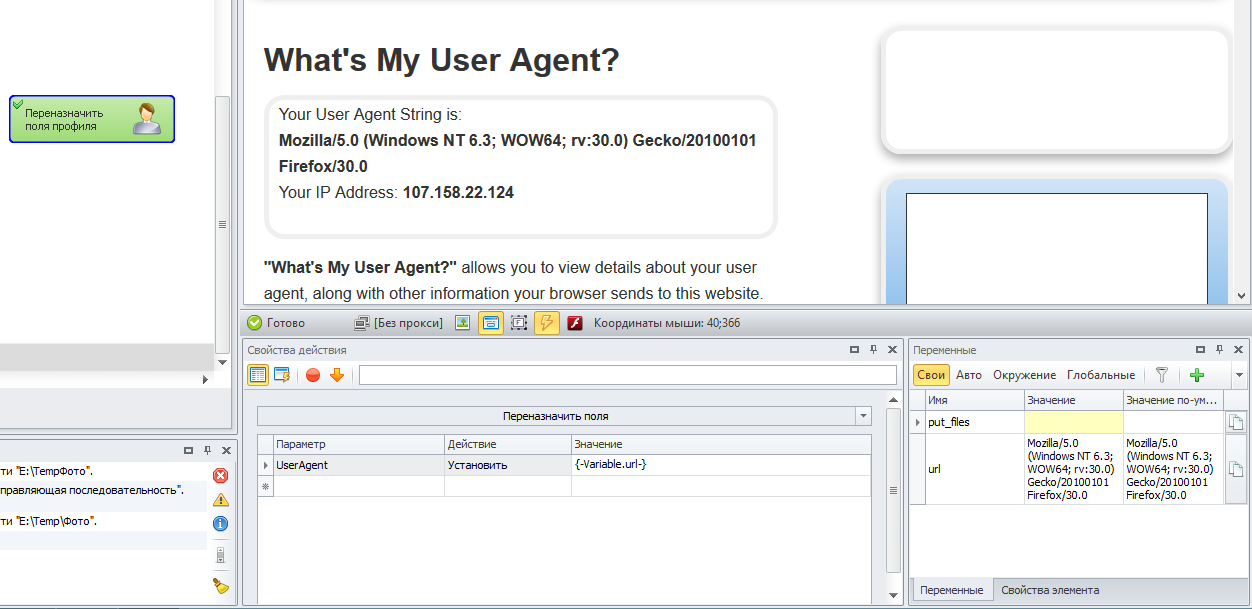

You need to create a header with a proper formatted User agent String, it server to communicate client-server.

You can check your own user agent Here .

I found this module very simple to use, in one line of code it randomly generates a User agent string.

This is how, I have been using a random user agent from a list of nearlly 1000 fake user agents

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36

By clicking “Post Your Answer”, you agree to our terms of service , privacy policy and cookie policy

To subscribe to this RSS feed, copy and paste this URL into your RSS reader.

site design / logo © 2021 Stack Exchange Inc; user contributions licensed under cc by-sa . rev 2021.2.5.38499

GitHub - alecxe/scrapy- fake - useragent : Random User - Agent middleware...

web scraping - How to use Python requests to fake ... - Stack Overflow

How to fake and rotate User Agents using Python 3

Python Examples of fake _ useragent . UserAgent

Python fake _ useragent примеры... - HotExamples

How to fake and rotate User Agents using Python 3

DOWNLOADER_MIDDLEWARES = {

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware' : None ,

'scrapy_useragents.downloadermiddlewares.useragents.UserAgentsMiddleware' : 500 ,

}

USER_AGENTS = [

( 'Mozilla/5.0 (X11; Linux x86_64) '

'AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/57.0.2987.110 '

'Safari/537.36' ), # chrome

( 'Mozilla/5.0 (X11; Linux x86_64) '

'AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/61.0.3163.79 '

'Safari/537.36' ), # chrome

( 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:55.0) '

'Gecko/20100101 '

'Firefox/55.0' ), # firefox

( 'Mozilla/5.0 (X11; Linux x86_64) '

'AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/61.0.3163.91 '

'Safari/537.36' ), # chrome

( 'Mozilla/5.0 (X11; Linux x86_64) '

'AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/62.0.3202.89 '

'Safari/537.36' ), # chrome

( 'Mozilla/5.0 (X11; Linux x86_64) '

'AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/63.0.3239.108 '

'Safari/537.36' ), # chrome

]

Please DO NOT contact us for any help with our Tutorials and Code using this form or by calling us, instead please add a comment to the bottom of the tutorial page for help

Turn the Internet into meaningful, structured and usable data

To rotate user agents in Python here is what you need to do

There are different methods to do it depending on the level of blocking you encounter.

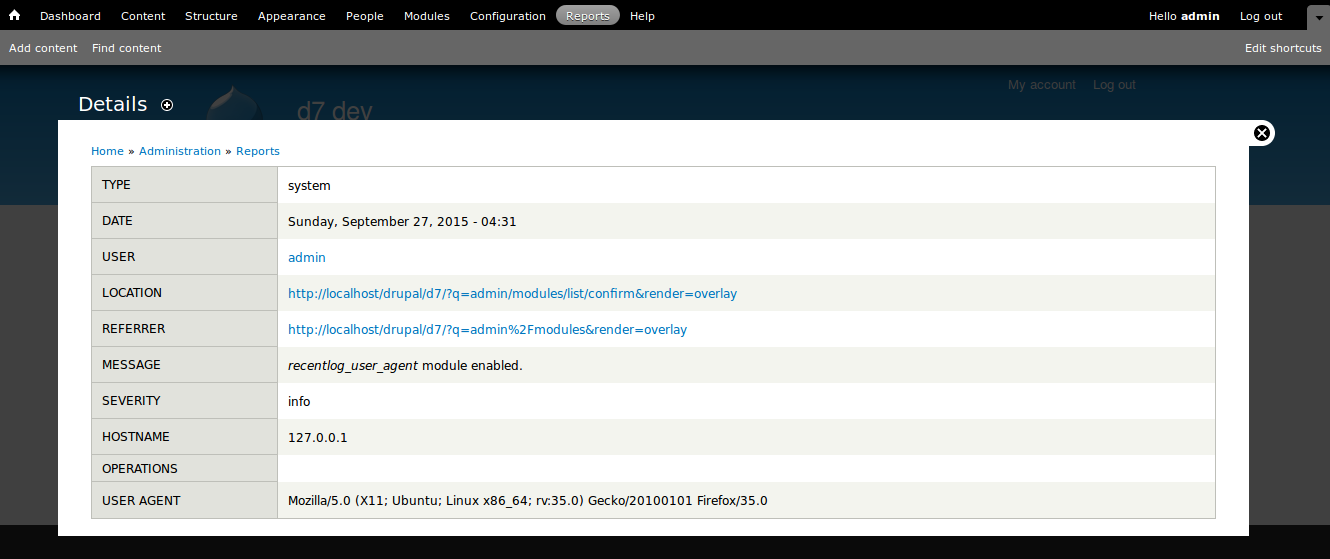

A user agent is a string that a browser or application sends to each website you visit. A typical user agent string contains details like – the application type, operating system, software vendor, or software version of the requesting software user agent. Web servers use this data to assess the capabilities of your computer, optimizing a page’s performance and display. User-Agents are sent as a request header called “User-Agent”.

User-Agent: Mozilla/ () ()

Below is the User-Agent string for Chrome 83 on Mac Os 10.15

Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.97 Safari/537.36

Before we look into rotating user agents, let’s see how to fake or spoof a user agent in a request.

Most websites block requests that come in without a valid browser as a User-Agent. For example here are the User-Agent and other headers sent for a simple python request by default while making a request.

Ignore the X-Amzn-Trace-Id as it is not sent by Python Requests, instead generated by Amazon Load Balancer used by HTTPBin.

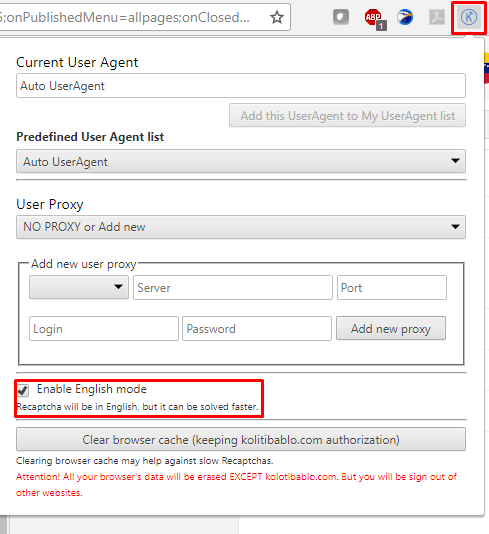

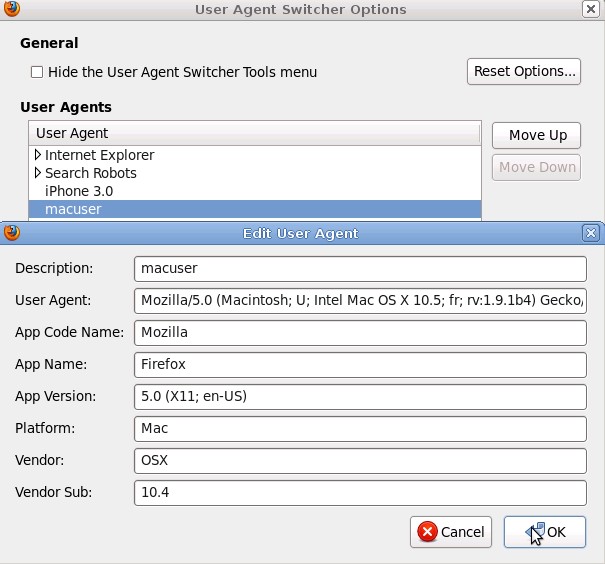

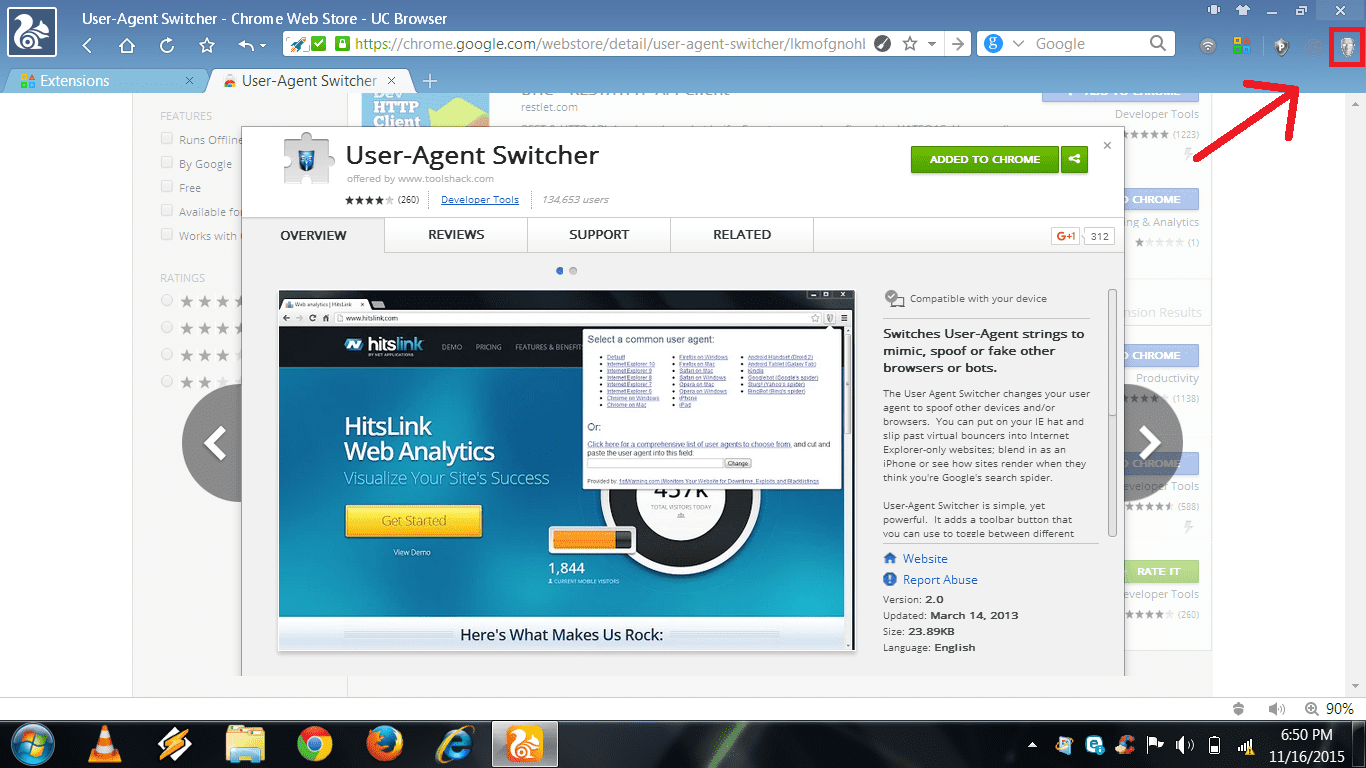

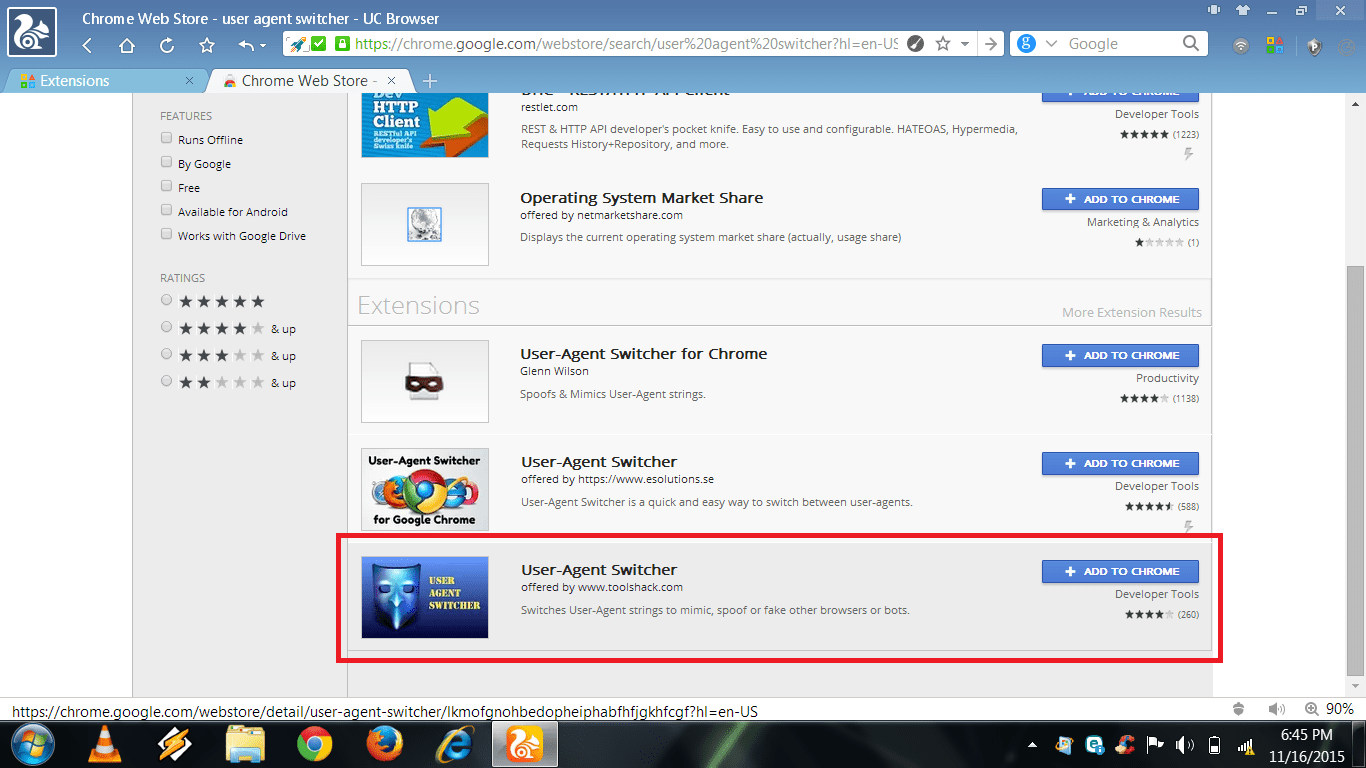

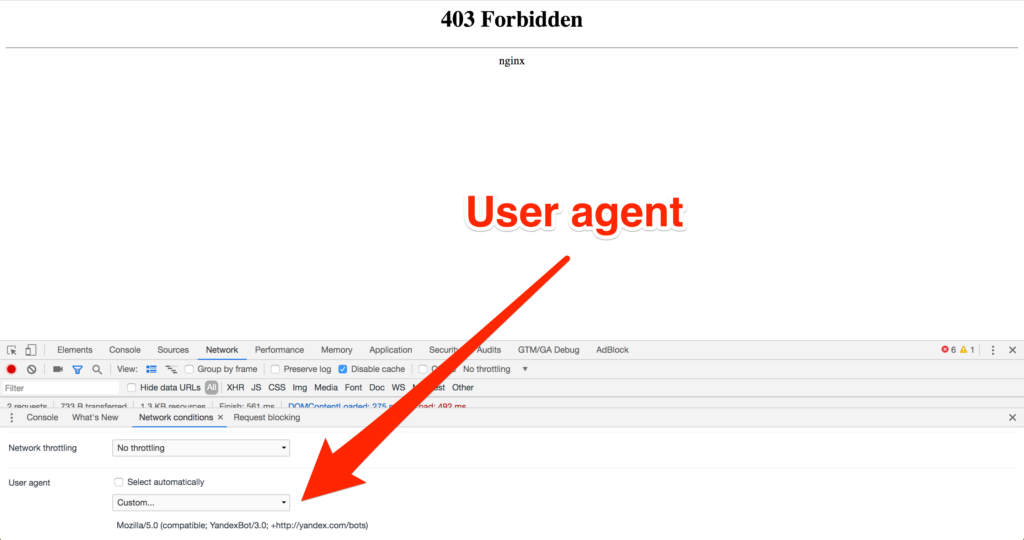

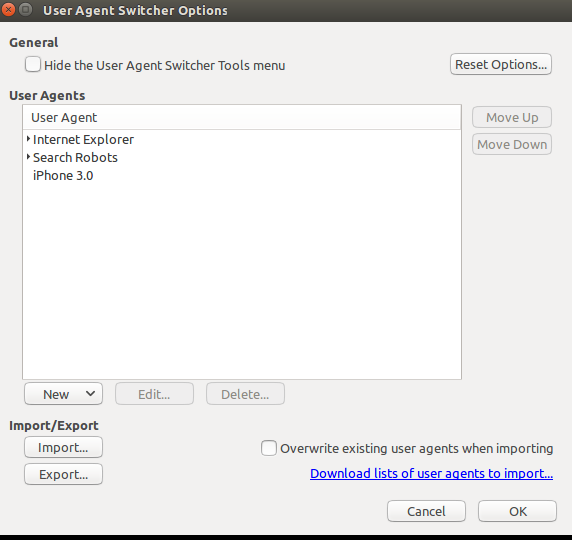

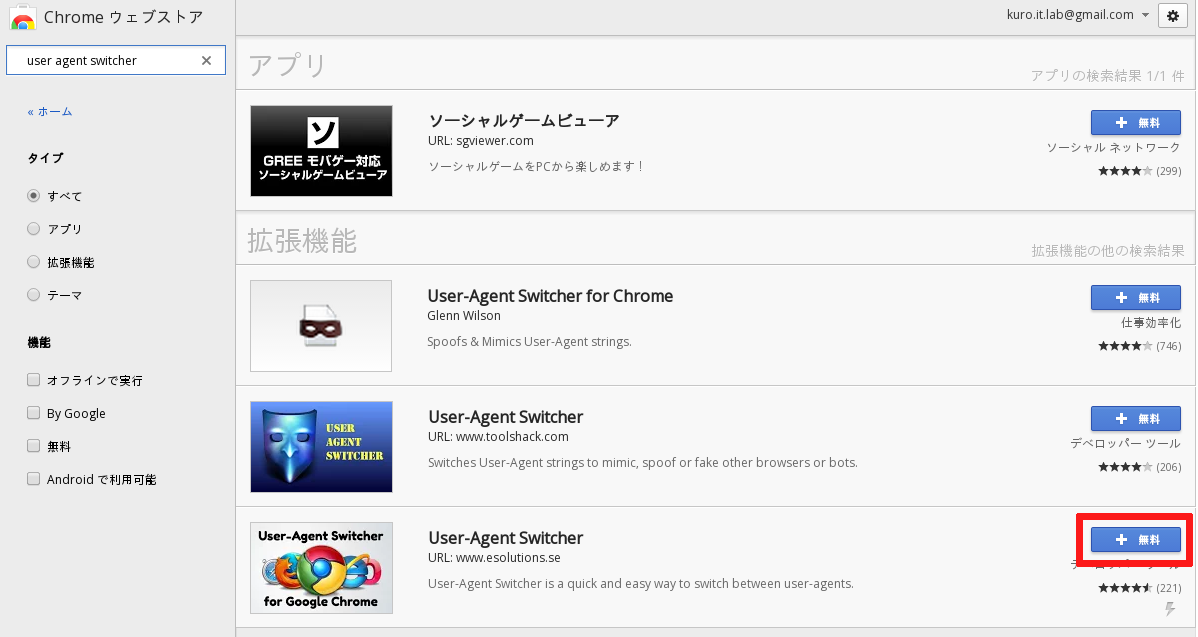

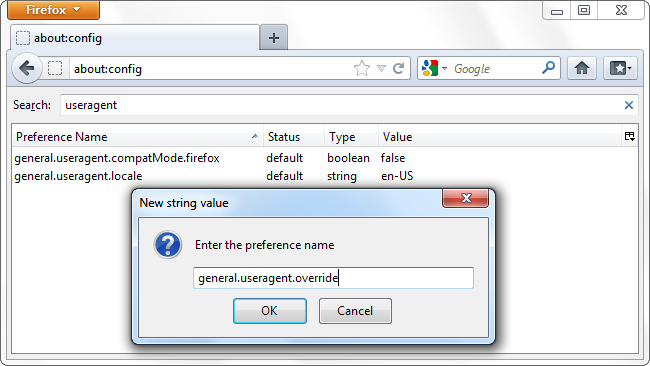

Any website could tell that this came from Python Requests, and may already have measures in place to block such user agents . User-agent spoofing is when you replace the user agent string your browser sends as an HTTP header with another character string. Major browsers have extensions that allow users to change their User-agent.

We can fake the user agent by changing the User-Agent header of the request and bypass such User-Agent based blocking scripts used by websites.

To change the User-Agent using Python Requests, we can pass a dict with a key ‘User-Agent’ with the value as the User-Agent string of a real browser,

Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/83.0.4103.97 Safari/537.36

As before lets ignore the headers that start with X- as they are generated by Amazon Load Balancer used by HTTPBin, and not from what we sent to the server

But, websites that use more sophisticated anti-scraping tools can tell this request did not come from Chrome.

Although we had set a user agent, the other headers that we sent are different from what the real chrome browser would have sent.

Here is what real Chrome would have sent

It is missing these headers chrome would sent when downloading an HTML Page or has the wrong values for it

Anti Scraping Tools can easily detect this request as a bot a so just sending a User-Agent wouldn’t be good enough to get past the latest anti-scraping tools and services.

Let’s add these missing headers and make the request look like it came from a real chrome browser

Now, this request looks more like it came from Chrome 83, and should get you past most anti scraping tools – if you are not flooding the website with requests.

If you are making a large number of requests for web scraping a website, it is a good idea to randomize. You can make each request you send look random, by changing the exit IP address of the request using rotating proxies and sending a different set of HTTP headers to make it look like the request is coming from different computers from different browsers

If you are just rotating user agents. The process is very simple.

To rotate user agents in Scrapy, you need an additional middleware. There are a few Scrapy middlewares that let you rotate user agents like:

Our example is based on Scrapy-UserAgents.

Add in settings file of Scrapy add the following lines

Most of the techniques above just rotates the User-Agent header, but we already saw that it is easier for bot detection tools to block you when you are not sending the other correct headers for the user agent you are using.

To get better results and less blocking, we should rotate a full set of headers associated with each User-Agent we use. We can prepare a list like that by taking a few browsers and going to https://httpbin.org/headers and copy the set headers used by each User-Agent. ( Remember to remove the headers that start with X- in HTTPBin )

Browsers may behave differently to different websites based on the features and compression methods each website supports. A better way is

In order to make your requests from web scrapers look as if they came from a real browser:

Having said that, let’s get to the final piece of code

You cannot see the order in which the requests were sent in HTTPBin, as it orders them alphabetically.

There you go! We just made these requests look like they came from real browsers.

Rotating user agents can help you from getting blocked by websites that use intermediate levels of bot detection, but advanced anti-scraping services has a large array of tools and data at their disposal and can see past your user agents and IP address.

Turn the Internet into meaningful, structured and usable data

Anti scraping tools lead to scrapers performing web scraping blocked. We provided web scraping best practices to bypass anti scraping

When scraping many pages from a website, using the same IP addresses will lead to getting blocked. A way to avoid this is by rotating IP addresses that can prevent your scrapers from being disrupted.…

Here are the high-level steps involved in this process and we will go through each of these in detail - Building scrapers, Running web scrapers at scale, Getting past anti-scraping techniques, Data Validation and Quality…

There is a python lib called “fake-useragent” which helps getting a list of common UA.

Great find. We had used fake user agent before, but at times we feel like the user agent lists are outdated.

I have to import “urllib.request” instead of “requests”, otherwise it does not work.

agreed, same for me. I think that was a typo.

requests is different package, it should be installed separately, with “pip install requests”. But urllib.request is a system library always included in your Python installation

requests use urllib3 packages, you need install requests with pip install.

Hi there, thanks for the great tutorials!

Just wondering; if I’m randomly rotating both ips and user agents is there a danger in trying to visit the same URL or website multiple times from the same ip address but with a different user agent and that looking suspicious?

Nick,

There is no definite answer to these things – they all vary from site to site and time to time.

There is a library whose name is shadow-useragent wich provides updated User Agents per use of the commmunity : no more outdated UserAgent! https://github.com/lobstrio/shadow-useragent

There is a website front to a review database which to access with Python will require both faking a User Agent and a supplying a login session to access certain data. Won’t this mean that if I rotate user agents and IP addresses under the same login session it will essentially tell the database I am scraping? Is there any way around this?

………………..

ordered_headers_list = []

for headers in headers_list:

h = OrderedDict()

for header,value in headers.items():

h[header]=value

ordered_headers_list.append(h)

for i in range(1,4):

#Pick a random browser headers

headers = random.choice(headers_list)

#Create a request session

r = requests.Session()

r.headers = headers

# Download the page using requests

print(“Downloading %s”%url)

r = r.get(url, headers=i,headers[‘User-Agent’])

# Simple check to check if page was blocked (Usually 503)

if r.status_code > 500:

if “To discuss automated access to Amazon data please contact” in r.text:

print(“Page %s was blocked by Amazon. Please try using better proxies\n”%url)

else:

print(“Page %s must have been blocked by Amazon as the status code was %d”%(url,r.status_code))

return None

# Pass the HTML of the page and create

return e.extract(r.text)

# product_data = []

with open(“asin.txt”,’r’) as urllist, open(‘hasil-GRAB.txt’,’w’) as outfile:

for url in urllist.read().splitlines():

data = scrape(url)

if data:

json.dump(data,outfile)

outfile.write(“\n”)

# sleep(5)

can anyone help me to combine this random user agent with the amazon.py script that is in the amazon product scrapping tutorial in this tutorial —-> https://www.scrapehero.com/tutorial-how-to-scrape-amazon-product-details-using-python-and-selectorlib/

Is there any library like fakeuseragent that will give you list of headers in correct order including user agent to avoid manual spoofing like in the example code.

@melmefolti We haven’t found anything so far. A lot of effort would be needed to check each Browser Version, Operating System combination and keep these values updated.

Very useful article with that single component clearly missing. I have come across pycurl and uncurl packages for python which return the same thing as the website, but in alphabetical order. Perhaps the only option is the create a quick little scraper for the cURL website, to then feed the main scraper of whatever other website you’re looking at

You can try curl with the -I option

ie curl -I https://www.example.com and see if that helps

why exactly do we need to open the network tab?

‘Open an incognito or a private tab in a browser, go to the Network tab of each browsers developer tools, and visit the link you are trying to scrape directly in the browser.

Copy the curl command to that request –

curl ‘https://www.amazon.com/’ -H ‘User-Agent:…’

does the navigator have something to do with the curl command?

The curl command is copied from that window – so it is needed.

In the line “Accept-Encoding”: “gzip, deflate,br”,

the headers having Br is not working it is printing gibberish when i try to use beautiful soup with that request .

You can safely remove the br and it will still work

Your email address will not be published. Required fields are marked *

Legal Disclaimer: ScrapeHero is an equal opportunity data service provider, a conduit, just like

an ISP. We just gather data for our customers responsibly and sensibly. We do not store or resell data.

We only provide the technologies and data pipes to scrape publicly available data. The mention of any

company names, trademarks or data sets on our site does not imply we can or will scrape them. They are

listed only as an illustration of the types of requests we get. Any code provided in our tutorials is

for learning only, we are not responsible for how it is used.

Young Boy Fuck Sexy

Japanese Family Incest

Sex Mom Incest Porn

Old Dad Fuck Young Daughter

Xxx Lolita 2021 Sex

f_auto/p/629325a2-9b5f-11e6-bb5a-00163ec9f5fa/3491759788/user-agent-switcher-screenshot.png" width="550" alt="Fake User Agent" title="Fake User Agent">

f_auto/p/629325a2-9b5f-11e6-bb5a-00163ec9f5fa/3491759788/user-agent-switcher-screenshot.png" width="550" alt="Fake User Agent" title="Fake User Agent">

.png)