Ansible Ssh Private Key

⚡ ALL INFORMATION CLICK HERE 👈🏻👈🏻👈🏻

Ansible Ssh Private Key

AnsibleFest

Products

Community

Webinars & Training

Blog

For previous versions, see the documentation archive.

AnsibleFest

Products

Community

Webinars & Training

Blog

mail.example.com

[webservers]

foo.example.com

bar.example.com

[dbservers]

one.example.com

two.example.com

three.example.com

all :

hosts :

mail.example.com

children :

webservers :

hosts :

foo.example.com :

bar.example.com :

dbservers :

hosts :

one.example.com :

two.example.com :

three.example.com :

jumper ansible_port = 5555 ansible_host=192.0.2.50

hosts :

jumper :

ansible_port : 5555

ansible_host : 192.0.2.50

[webservers]

www[01:50].example.com

[targets]

localhost ansible_connection = local

other1.example.com ansible_connection = ssh ansible_user=mpdehaan

other2.example.com ansible_connection = ssh ansible_user=mdehaan

[atlanta]

host1 http_port = 80 maxRequestsPerChild=808

host2 http_port = 303 maxRequestsPerChild=909

[atlanta]

host1

host2

[atlanta:vars]

ntp_server = ntp.atlanta.example.com

proxy = proxy.atlanta.example.com

atlanta :

hosts :

host1 :

host2 :

vars :

ntp_server : ntp.atlanta.example.com

proxy : proxy.atlanta.example.com

[atlanta]

host1

host2

[raleigh]

host2

host3

[southeast:children]

atlanta

raleigh

[southeast:vars]

some_server = foo.southeast.example.com

halon_system_timeout = 30

self_destruct_countdown = 60

escape_pods = 2

[usa:children]

southeast

northeast

southwest

northwest

all :

children :

usa :

children :

southeast :

children :

atlanta :

hosts :

host1 :

host2 :

raleigh :

hosts :

host2 :

host3 :

vars :

some_server : foo.southeast.example.com

halon_system_timeout : 30

self_destruct_countdown : 60

escape_pods : 2

northeast :

northwest :

southwest :

Any host that is member of a child group is automatically a member of the parent group.

A child group’s variables will have higher precedence (override) a parent group’s variables.

Groups can have multiple parents and children, but not circular relationships.

Hosts can also be in multiple groups, but there will only be one instance of a host, merging the data from the multiple groups.

/etc/ansible/group_vars/raleigh # can optionally end in '.yml', '.yaml', or '.json'

/etc/ansible/group_vars/webservers

/etc/ansible/host_vars/foosball

---

ntp_server : acme.example.org

database_server : storage.example.org

/etc/ansible/group_vars/raleigh/db_settings

/etc/ansible/group_vars/raleigh/cluster_settings

some_host ansible_port=2222 ansible_user=manager

aws_host ansible_ssh_private_key_file=/home/example/.ssh/aws.pem

freebsd_host ansible_python_interpreter=/usr/local/bin/python

ruby_module_host ansible_ruby_interpreter=/usr/bin/ruby.1.9.3

- name : create jenkins container

docker_container :

docker_host : myserver.net:4243

name : my_jenkins

image : jenkins

- name : add container to inventory

add_host :

name : my_jenkins

ansible_connection : docker

ansible_docker_extra_args : "--tlsverify --tlscacert=/path/to/ca.pem --tlscert=/path/to/client-cert.pem --tlskey=/path/to/client-key.pem -H=tcp://myserver.net:4243"

ansible_user : jenkins

changed_when : false

- name : create directory for ssh keys

delegate_to : my_jenkins

file :

path : "/var/jenkins_home/.ssh/jupiter"

state : directory

You are reading an unmaintained version of the Ansible documentation. Unmaintained Ansible versions can contain unfixed security vulnerabilities (CVE). Please upgrade to a maintained version. See the latest Ansible documentation .

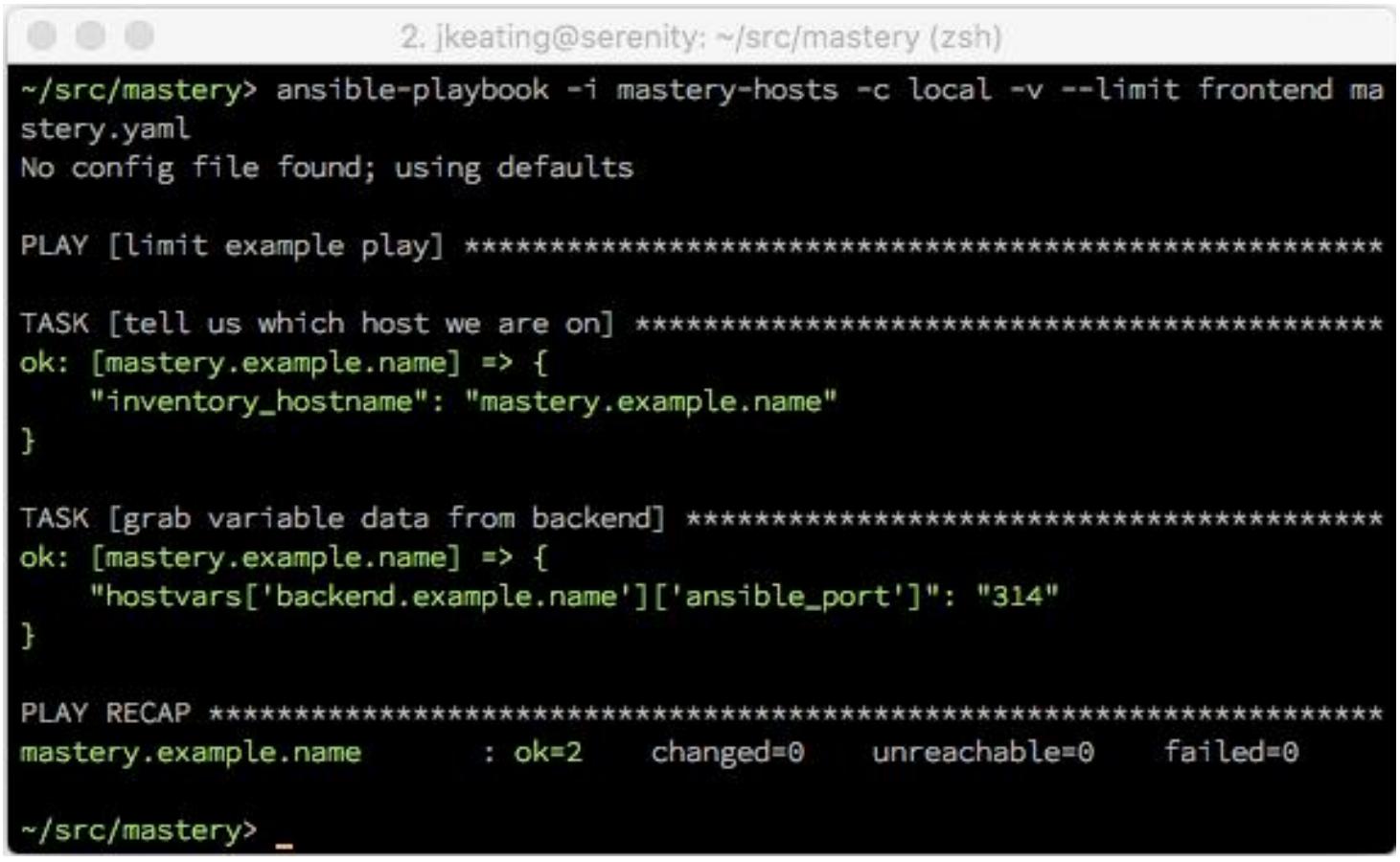

Ansible works against multiple systems in your infrastructure at the same time.

It does this by selecting portions of systems listed in Ansible’s inventory,

which defaults to being saved in the location /etc/ansible/hosts .

You can specify a different inventory file using the -i option on the command line.

Not only is this inventory configurable, but you can also use multiple inventory files at the same time and

pull inventory from dynamic or cloud sources or different formats (YAML, ini, etc.), as described in Dynamic Inventory .

Introduced in version 2.4, Ansible has inventory plugins to make this flexible and customizable.

The inventory file can be in one of many formats, depending on the inventory plugins you have.

For this example, the format for /etc/ansible/hosts is an INI-like (one of Ansible’s defaults) and looks like this:

The headings in brackets are group names, which are used in classifying systems

and deciding what systems you are controlling at what times and for what purpose.

It is ok to put systems in more than one group, for instance a server could be both a webserver and a dbserver.

If you do, note that variables will come from all of the groups they are a member of. Variable precedence is detailed in a later chapter.

If you have hosts that run on non-standard SSH ports you can put the port number after the hostname with a colon.

Ports listed in your SSH config file won’t be used with the paramiko connection but will be used with the openssh connection.

To make things explicit, it is suggested that you set them if things are not running on the default port:

Suppose you have just static IPs and want to set up some aliases that live in your host file, or you are connecting through tunnels.

You can also describe hosts via variables:

In the above example, trying to ansible against the host alias “jumper” (which may not even be a real hostname) will contact 192.0.2.50 on port 5555.

Note that this is using a feature of the inventory file to define some special variables.

Generally speaking, this is not the best way to define variables that describe your system policy, but we’ll share suggestions on doing this later.

Values passed in the INI format using the key=value syntax are not interpreted as Python literal structure

(strings, numbers, tuples, lists, dicts, booleans, None), but as a string. For example var=FALSE would create a string equal to ‘FALSE’.

Do not rely on types set during definition, always make sure you specify type with a filter when needed when consuming the variable.

If you are adding a lot of hosts following similar patterns, you can do this rather than listing each hostname:

For numeric patterns, leading zeros can be included or removed, as desired. Ranges are inclusive. You can also define alphabetic ranges:

Ansible 2.0 has deprecated the “ssh” from ansible_ssh_user , ansible_ssh_host , and ansible_ssh_port to become ansible_user , ansible_host , and ansible_port . If you are using a version of Ansible prior to 2.0, you should continue using the older style variables ( ansible_ssh_* ). These shorter variables are ignored, without warning, in older versions of Ansible.

You can also select the connection type and user on a per host basis:

As mentioned above, setting these in the inventory file is only a shorthand, and we’ll discuss how to store them in individual files in the ‘host_vars’ directory a bit later on.

As described above, it is easy to assign variables to hosts that will be used later in playbooks:

Variables can also be applied to an entire group at once:

Be aware that this is only a convenient way to apply variables to multiple hosts at once; even though you can target hosts by group, variables are always flattened to the host level before a play is executed.

It is also possible to make groups of groups using the :children suffix in INI or the children: entry in YAML.

You can apply variables using :vars or vars: :

If you need to store lists or hash data, or prefer to keep host and group specific variables separate from the inventory file, see the next section.

Child groups have a couple of properties to note:

There are two default groups: all and ungrouped . all contains every host.

ungrouped contains all hosts that don’t have another group aside from all .

Every host will always belong to at least 2 groups.

Though all and ungrouped are always present, they can be implicit and not appear in group listings like group_names .

The preferred practice in Ansible is to not store variables in the main inventory file.

In addition to storing variables directly in the inventory file, host and group variables can be stored in individual files relative to the inventory file (not directory, it is always the file).

These variable files are in YAML format. Valid file extensions include ‘.yml’, ‘.yaml’, ‘.json’, or no file extension.

See YAML Syntax if you are new to YAML.

Assuming the inventory file path is:

If the host is named ‘foosball’, and in groups ‘raleigh’ and ‘webservers’, variables

in YAML files at the following locations will be made available to the host:

For instance, suppose you have hosts grouped by datacenter, and each datacenter

uses some different servers. The data in the groupfile ‘/etc/ansible/group_vars/raleigh’ for

the ‘raleigh’ group might look like:

It is okay if these files do not exist, as this is an optional feature.

As an advanced use case, you can create directories named after your groups or hosts, and

Ansible will read all the files in these directories in lexicographical order. An example with the ‘raleigh’ group:

All hosts that are in the ‘raleigh’ group will have the variables defined in these files

available to them. This can be very useful to keep your variables organized when a single

file starts to be too big, or when you want to use Ansible Vault on a part of a group’s

variables. Note that this only works on Ansible 1.4 or later.

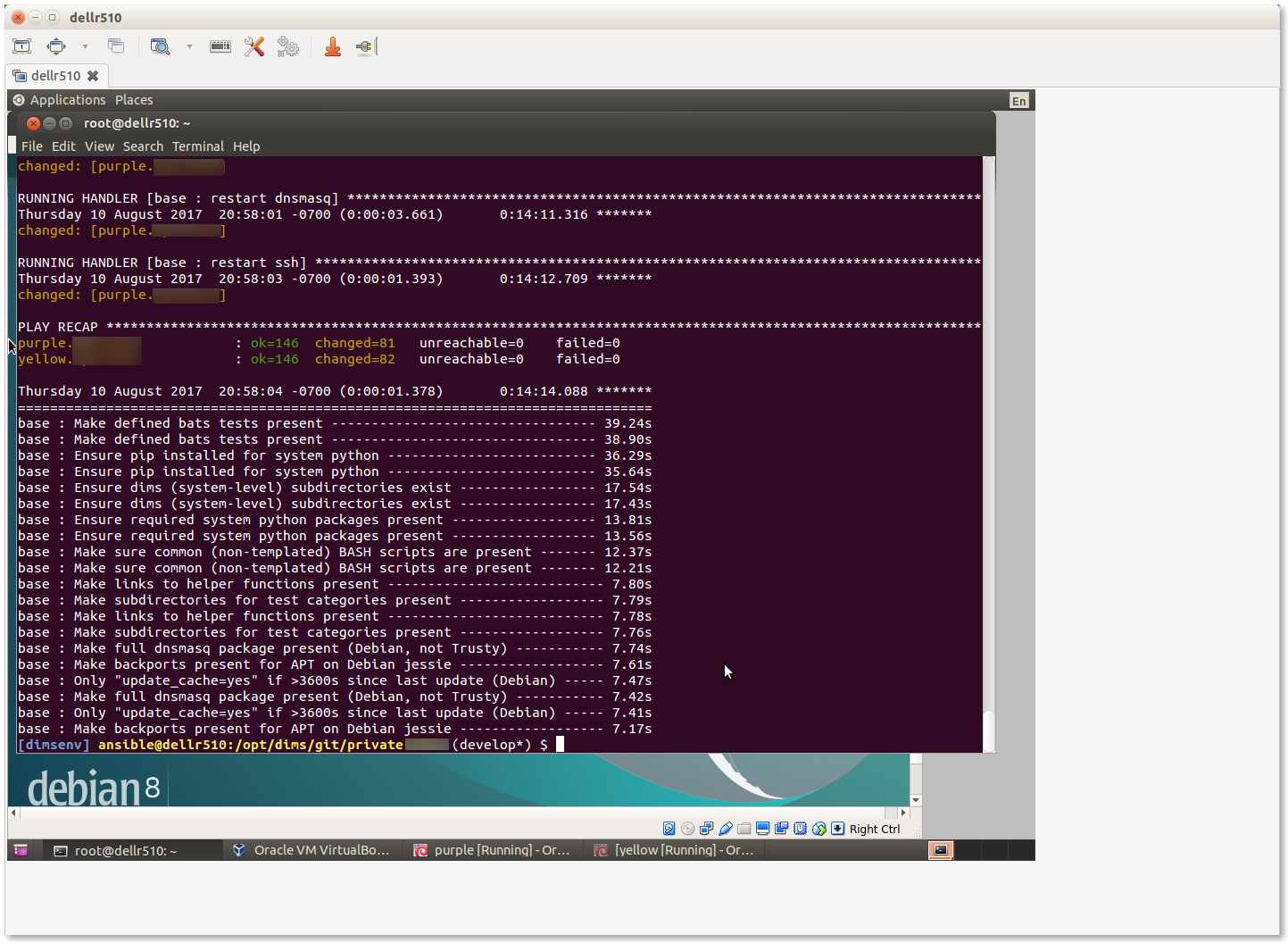

Tip: In Ansible 1.2 or later the group_vars/ and host_vars/ directories can exist in

the playbook directory OR the inventory directory. If both paths exist, variables in the playbook

directory will override variables set in the inventory directory.

Tip: Keeping your inventory file and variables in a git repo (or other version control)

is an excellent way to track changes to your inventory and host variables.

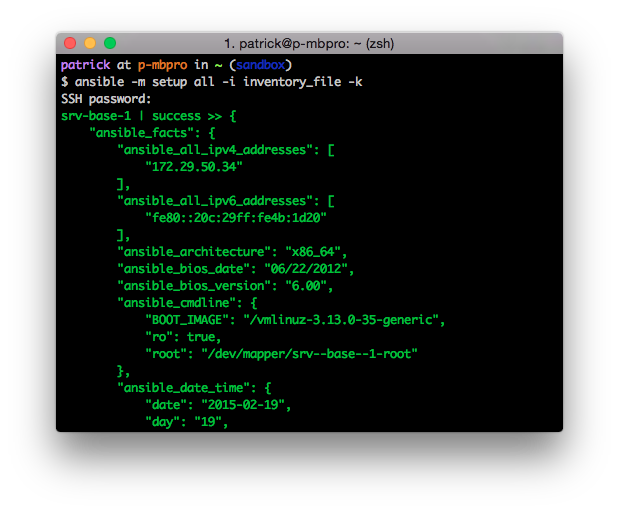

As alluded to above, setting the following variables controls how ansible interacts with remote hosts.

Ansible 2.0 has deprecated the “ssh” from ansible_ssh_user , ansible_ssh_host , and ansible_ssh_port to become ansible_user , ansible_host , and ansible_port . If you are using a version of Ansible prior to 2.0, you should continue using the older style variables ( ansible_ssh_* ). These shorter variables are ignored, without warning, in older versions of Ansible.

Privilege escalation (see Ansible Privilege Escalation for further details):

Remote host environment parameters:

Examples from an Ansible-INI host file:

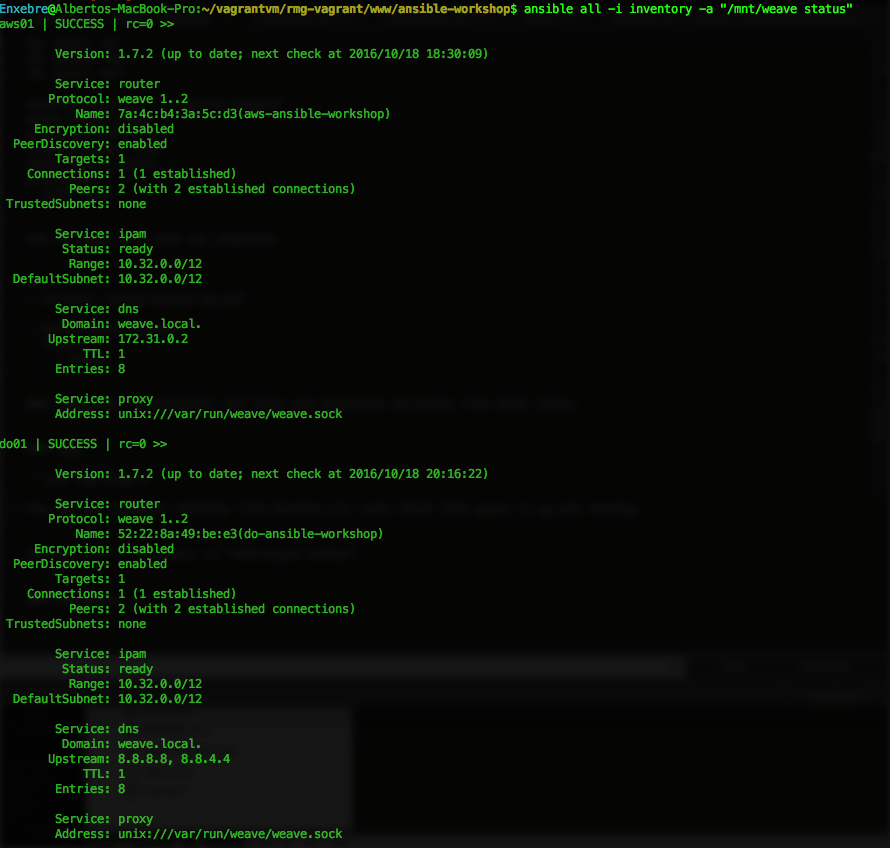

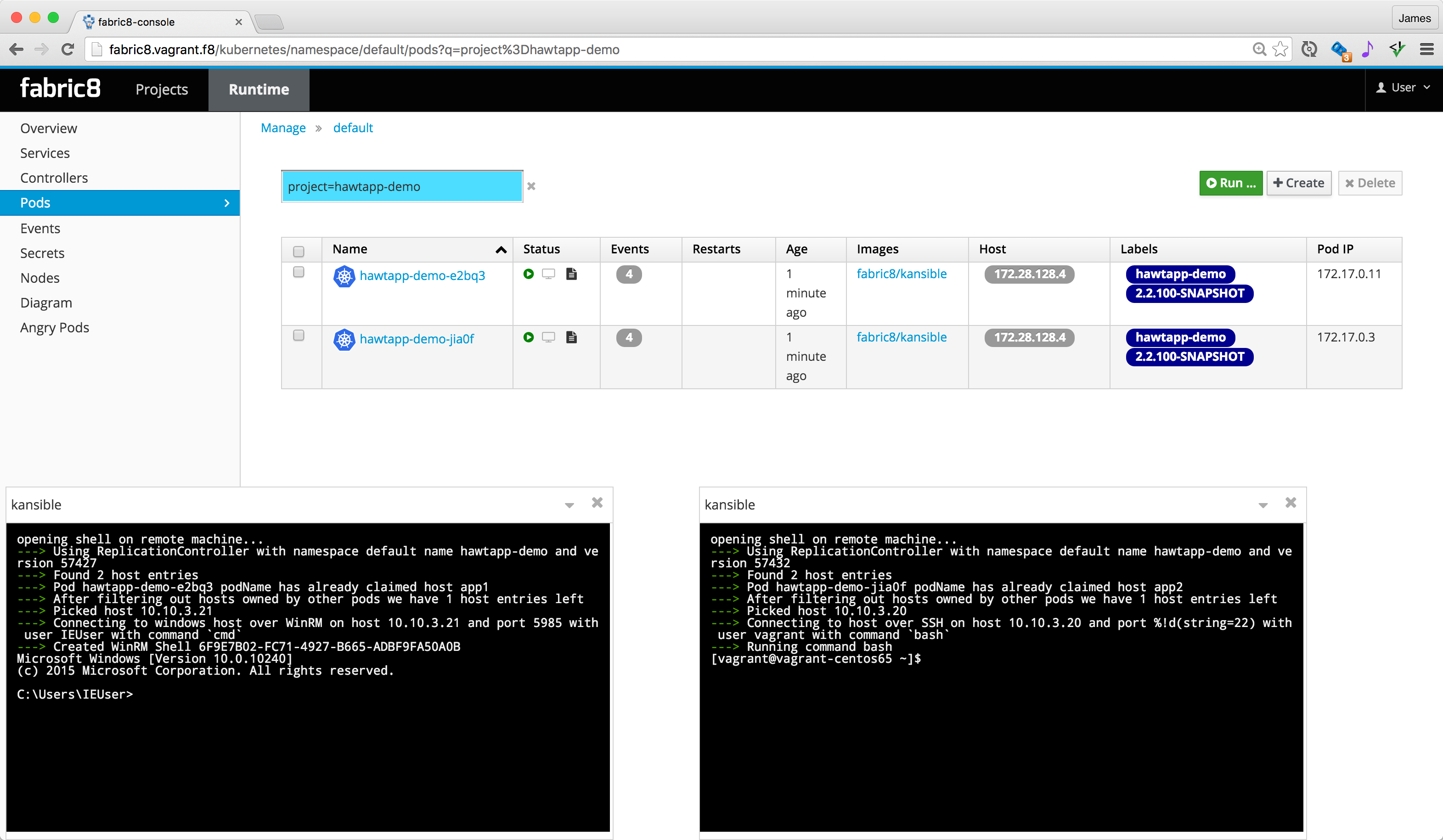

As stated in the previous section, Ansible executes playbooks over SSH but it is not limited to this connection type.

With the host specific parameter ansible_connection= , the connection type can be changed.

The following non-SSH based connectors are available:

This connector can be used to deploy the playbook to the control machine itself.

This connector deploys the playbook directly into Docker containers using the local Docker client. The following parameters are processed by this connector:

Here is an example of how to instantly deploy to created containers:

If you’re reading the docs from the beginning, this may be the first example you’ve seen of an Ansible playbook. This is not an inventory file.

Playbooks will be covered in great detail later in the docs.

Copyright © 2017 Red Hat, Inc.

Last updated on Dec 01, 2020.

Ansible docs are generated from GitHub sources using Sphinx using a theme provided by Read the Docs .

Ansible : Private /Public Keys and SSH Agent setup | by OpenInfo | Medium

Inventory — Ansible Documentation | Non- SSH connection types

Allow ssh private keys to be used that are encrypted with vault #22382

Ansible : SSH connection & running commands - 2020

Define ssh key per host using ansible _ ssh _ private _ key _file - nixCraft

Sign up

Sign up

Features →

Mobile →

Actions →

Codespaces →

Packages →

Security →

Code review →

Project management →

Integrations →

GitHub Sponsors →

Customer stories →

Explore GitHub →

Learn and contribute

Topics →

Collections →

Trending →

Learning Lab →

Open source guides →

Connect with others

The ReadME Project →

Events →

Community forum →

GitHub Education →

GitHub Stars program →

Plans →

Compare plans →

Contact Sales →

Education →

In this repository

All GitHub

↵

In this repository

All GitHub

↵

In this organization

All GitHub

↵

In this repository

All GitHub

↵

Code

Issues

Pull requests

Actions

Projects

Security

Insights

Dirrk opened this issue

Mar 7, 2017

· 32 comments

· May be fixed by #52739

Dirrk opened this issue

Mar 7, 2017

· 32 comments

· May be fixed by #52739

affects_2.2

c:plugins/connection/ssh

feature

has_pr

support:core

waiting_on_contributor

ansible_ssh_private_key : ' -----BEGIN RSA PRIVATE KEY-----so secret much secure-----END RSA PRIVATE KEY----- '

[atlanta]

host1 ansible_ssh_private_key_vault_file = " {{ inventory_dir }}/atlanta.key "

host2 ansible_ssh_private_key_file = " {{ inventory_dir }}/atlanta.key "

[atlanta]

host1 ansible_ssh_private_key_file = " {{ inventory_dir }}/atlanta.key "

host2 ansible_ssh_private_key_file = " {{ inventory_dir }}/atlanta.key "

👍

143

❤️

23

👀

5

ansibot

added

affects_2.2

c:plugins/connection/ssh

feature_idea

needs_triage

labels

Mar 7, 2017

JJediny

mentioned this issue

Mar 9, 2017

rcarrillocruz

removed

the

needs_triage

label

Mar 10, 2017

ansibot

added

the

waiting_on_contributor

label

Mar 13, 2017

Dirrk

mentioned this issue

Mar 18, 2017

ansibot

added

the

support:core

label

Jun 29, 2017

ansibot

added

feature

and removed

feature_idea

labels

Mar 2, 2018

webknjaz

added this to To Do - Backlog only. Anything Can be here.

in Ansible 2.8

Sep 7, 2018

webknjaz

removed this from To Do - Backlog only. Anything Can be here.

in Ansible 2.8

Sep 10, 2018

loicalbertin

mentioned this issue

Nov 18, 2018

webknjaz

linked a pull request that will

close

this issue

Feb 21, 2019

ansibot

added

the

has_pr

label

Jul 26, 2019

enoman

mentioned this issue

Feb 4, 2021

sinatommy

mentioned this issue

Feb 19, 2021

Sign up for free

to join this conversation on GitHub .

Already have an account?

Sign in to comment

© 2021 GitHub, Inc.

Terms

Privacy

Security

Status

Docs

Contact GitHub

Pricing

API

Training

Blog

About

You can’t perform that action at this time.

You signed in with another tab or window. Reload to refresh your session.

You signed out in another tab or window. Reload to refresh your session.

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and

privacy statement . We’ll occasionally send you account related emails.

Already on GitHub?

Sign in

to your account

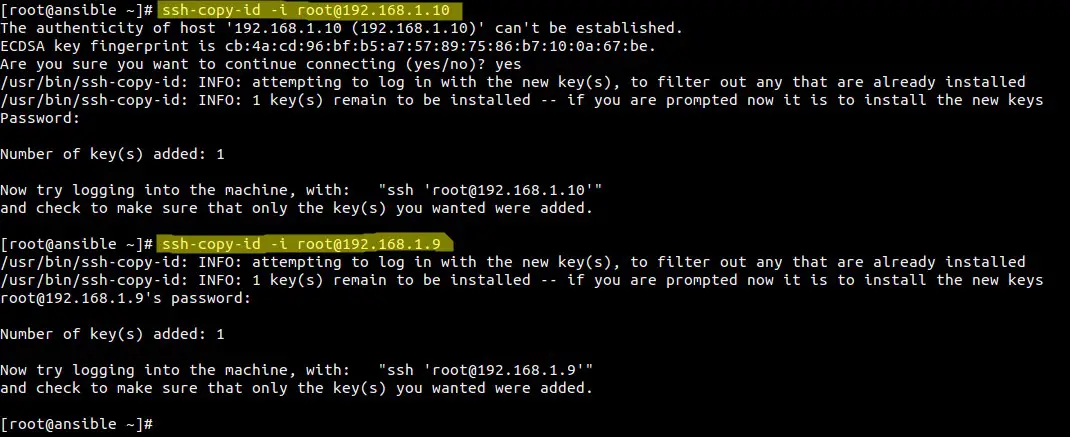

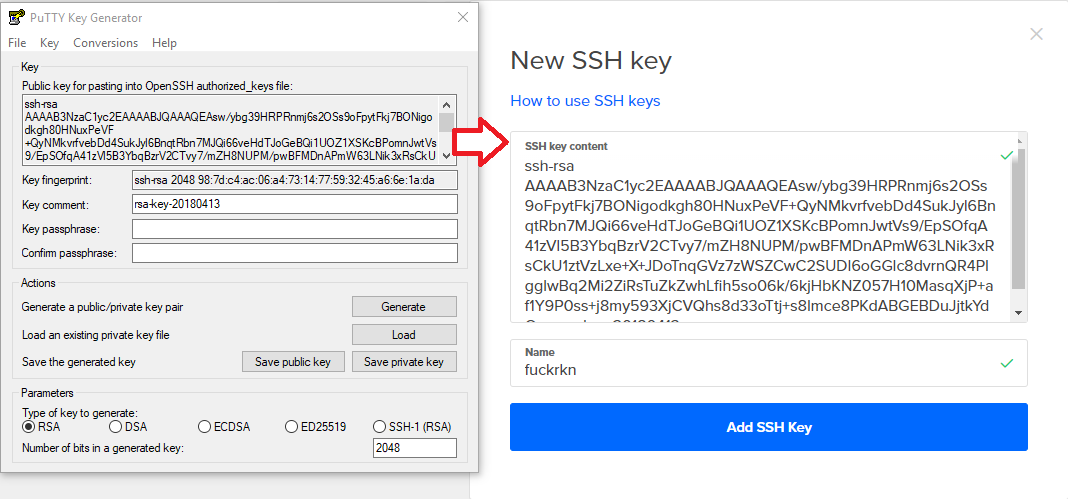

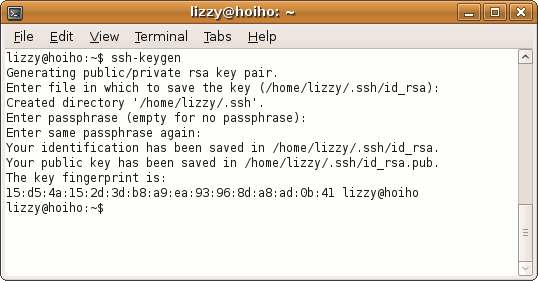

Core library specific to ssh authentication

I am proposing a new variable(ansible_ssh_private_key) be added as an alternative to (ansible_ssh_private_key_file).

When defining hosts, I would like to be able to use an ssh private key that is stored securely using ansible-vault. There are basically 3 ways to achieve this and I have listed them below:

Add ansible_ssh_private_key variable that can be a string version of the private key (this uses the already allowed vaulting of groupt_vars / host_vars files)

Add ansible_ssh_private_key_vault_file that is a file that is encrypted with ansible-vault and decrypted at run time to provide the key to the ssh agent.

Change how ansible_ssh_private_key_file works so that it first attempts to decrypt using ansible-vault when it is encrypted.

Personally, I don't care which way this feature is added and I am more than willing to write the code for it. I just want to know what and if the community / devs want this feature added.

I am going to reference each of the solutions individually on how they would be used.

inventory/group_vars/atlanta.yml (showing as decrypted obviously)

inventory/atlanta.key (showing as decrypted obviously)

inventory/atlanta.key (showing as decrypted obviously)

In all 3 cases, I expect that when logging in to atlanta servers group that it would use the ssh private key provided to login:

There are no results as it this feature doesn't exist.

The text was updated successfully, but these errors were encountered:

@Dirrk I'm not totally opposed to the idea. I think there will be a lot of caveats to getting it right, but those can probably get ironed out in the PR and in the devel pipeline.

If you want to work on a patch to enable this feature, please hop on #ansible-devel on irc.freenode.net and get the larger group of devs to review your PR(s).

Why use ansible-vault for this instead of using ssh-agent?

Without significant changes to ansible-vault, any of the variations mentioned above are going to have decrypted copies of the ssh private keys floating around in the ansible process.

@alikins , Hey just wanted to follow up on here after our discussion yesterday. To recap, the desire to have this feature stems from wanting ansible to be able to use ssh key files that are stored securely in vault. However it also could be used by dynamic inventory scripts that return a private key for certain servers or various other reasons that are currently not available now.

We also discussed ways of implementing this without leaving decrypted ssh keys all over the ansible client computer. This is fairly trivial with paramiko_ssh but I am still investigating the ssh route to make sure both ssh connection plugins can use this feature. If you or anyone else had any ideas on handling the default ssh connection plugin without a file being created it would be very helpful.

I am putting a PR in that has the implementation of this feature on the paramiko plugin, just to get some early feedback on the direction of this why I continue to work on the default ssh plugin. #22764

@Dirrk Hey. I would like to do exactly as you describe so I am very interested in this feature. In our setups, private keys get shipped in vaults, meaning provisioning should be able to happen straight away from any machine with Ansible and the vault password; it would be nice not to have to juggle ssh keys or setup ssh-agents first!

Have you done any more work or had further discussions following your work last month on #22764 ?

I found this issue when I was attempting to run ansible playbooks in a CI system. We don't wish to expose any secrets in our repository source code. We have it set up so that the vault password file is mounted in the CI-worker containers. Without this feature (and without using ssh passwords), the best option I can think of is to store the vault-encrypted private key file in the repository, then have a task either in our playbook or before our playbook that decrypts the file and places it somewhere in the container's filesystem. I find it preferable to have in-memory private keys for the duration of a playbook run compared to writing a private key to a CI disk. Just a thought, I understand that this is not the primary use case.

The following snippet works around my previously mentioned issue and avoids both writing plaintext to disk and prolonged plaintext in memory (as alikins mentioned on March 13). This snippet is intended for ansible_connection=local.

The following snippet works around my previously mentioned issue and avoids both writing plaintext to disk and prolonged plaintext in memory ...

Nice. Possible improvements (based in part on Lennart Poettering's " /tmp or not /tmp? "):

The following snippet works around my previously mentioned issue and avoids both writing plaintext to disk and prolonged plaintext in memory ...

Nice. Possible improvements (based in part on Lennart Poettering's " /tmp or not /tmp? "):

You can also take the ssh-agent mangle totally out from ansible:

ssh-agent bash -c "ansible-vault view files/vault_ssh_key | ssh-add - && ansible-playbook ..."

At the time of running the playbook, ansible should be able to source the key from Azure Key Vault and use that private key to log in to the hosts? Does anyone know a way how to source the private key from Azure Key Vault?

@apassi you are not guarantee to have XDG_RUNTIME_DIR

@zsh2k ansible_ssh_private_key require a file path, not the contents, you'll have to fetch and write to a local file. This is a limitation of the ssh utility, since we need to pass it a file and cannot insert the key's content as a value.

@bcoca That part of my message was quoted. I am running whole ansible within ssh-agent, which gets the private get from ansible-vault, and if success, ansible playbook is started. In my idea, the ssh-agent is terminated after execute, because i dont want to left it running background.

this is a problem for us, we are fetching the key from conjur (secrets management solution like hashicorp), so we have the contents not in a file though.

The way i envision this being used is with aws_secret to automatically pull the ssh password from AWS Secrets Manager via the inventory file.

If I had different host groups with different keys, "foo", "bar" and "baz" my inventory.yml would look something like this.

No vaulting, no ssh-agent. No need to create python or bash scripts to pull/convert/save pem files in my .ssh directory.

@gearlbrace the issue is still that ssh requires a file and I don't think there is a way we can guarantee it being securely removed in all cases (crash), but you can still use the lookup to write the file in a template task, use it and delete it.

@bcoca Yes, however ansible can fall back to, or be configured to use, paramiko, which can accept a key argument or a file.

Maybe create something in ansible, that either runs as a separate thread or a subprocess, that creates a unix socket and implements the ssh-agent protocol . Then, ansible would either set the SSH_AUTH_SOCK env var or pass the IdentityAgent option with the path to its ansible-vault powered ssh-agent socket. In this way, the key could be vaulted and only decrypted in memory.

This approach would not work if someone is already using an ssh-agent and they want AgentForwarding to work. But, if someone's already using ssh-agent, then it would make sense for them to use that agent for this as well, loading the keys into the agent before running the relevant playbooks (possibly using a playbook that unvaults the key and runs ssh-add).

@gearlbrace switching the connection plugin used underneath for this is not a good approach, just use paramiko to start with

@cognifloyd i don't think we should reimplement ssh-agent in ansible, just use existing one, the security implications alone are enough to require a dedicated team of experts.

I started some work here creating a context manager that could create a fifo for use here, but the code is both not designed well to work in that respect, and we may use the private key file multiple times in a single connection.

I was thinking it might make the most sense to create an ssh-agent module that could support this behavior.

@sivel i had a prototype working at one point, but it required local_action for it to work in this context, I originally had intended it for proxies and to startup services that requried keys/auth.

The way i envision this being used is with aws_secret to automatically pull the ssh password from AWS Secrets Manager via the inventory file.

If I had different host groups with different keys, "foo", "bar" and "baz" my inventory.yml would look something like this.

No vaulting, no ssh-agent. No need to create python or bash scripts to pull/convert/save pem files in my .ssh directory.

@gearlbrace the issue is still that ssh requires a file and I don't think there is a way we can guarantee it being securely removed in all cases (crash), but you can still use the lookup to write the file in a template task, use it and delete it.

what if whatever we implement here simply serves as a wrapper for that process? basically a task that is those 3 things, pull the key, put in file, and then run a handler at the eend of it all to clean it up

We are also facing the same issue. We would like to use the aws secrets manager lookup to get the SSH key.

Successfully merging a pull request may close this issue.