Airflow: scaling out recommendations by Shopify

How to DWH with Python, @bryzgaloffShopify runs over 10k DAGs. 150k runs per day. Over 400 tasks at a given moment on average.

This is a brief overview of their approach. Link to source article.

Fast file access

Problem: reading DAGs files from Google Cloud Storage (through GCSFuse as a filesystem interface) is too slow.

Solution:

— Use Network File System instead of Google Cloud Storage.

— For users' convenience: allow them to upload files to GCS (through GCS UI/API/CLI), then sync files to NFS via a separate script.

Pro: GCS IAM allows to control access of specific users to specific DAGs locations.

Reduce metadata

Problem: Airflow performance degrades due to metadata volume.

Solution: introduce metadata retention policy (e.g. 28 days), regularly delete older historical data (DagRuns, TaskInstances, Logs, TaskRetries, etc) via ORM.

Con: Airflow features which rely on durable job history (for example, long-running backfills) become unusable.

Alternative: clean db in Airflow 2.3+.

Manifest file

Problems:

— In a multi-tenant setting it is hard to associate DAGs with users and teams.

— Airflow serves users with different access levels ⇒ without a proper permissions control Airflow users have too wide-ranging access.

Solution:

— Introduce a manifest file containing DAG owner email, code source, namespace, access restrictions, resources pools specification, etc.

— Reject DAGs which violate assertions declared in the manifest file. Shopify raises AirflowClusterPolicyViolation for this case.

Consistent distribution of load

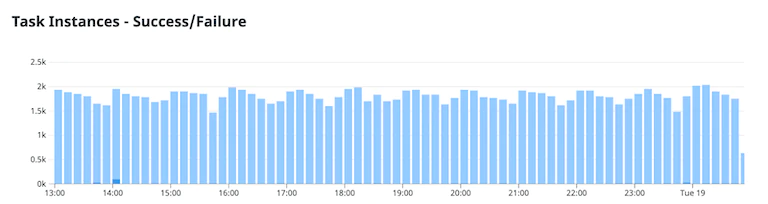

Problem: humans tend to schedule DAGs unevenly, e.g. at "round" times (like :00 minutes or :00:00 hours) or via unpredictable schedule_interval=timedelta(…). This creates large surges of traffic which can overload the Airflow scheduler.

Solution: use a deterministically randomized schedule interval. Determinism is achieved by hashing a constant seed such as dag_id.

Resources contention

Problem: there are a lot of possible points of resources contention within Airflow.

Solution: use built-in Airflow concurrency control features like:

— pools (useful for reducing disruptions caused by bursts in traffic)

— and priority weights (useful to ensure that latency-sensitive tasks are run prior to lower priority ones).

Different execution environments

Problem: different tasks may rely on different sets of dependencies (e.g. Python libraries) and requirements (e.g. hardware resources).

Solution: distribute tasks using Celery queues to differently configured workers.

---

Brought to you by @pydwh 💛