Neural network activation function gaussian surface

========================

neural network activation function gaussian surface

neural-network-activation-function-gaussian-surface

========================

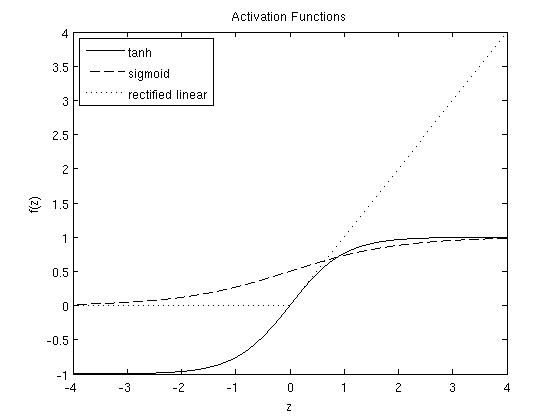

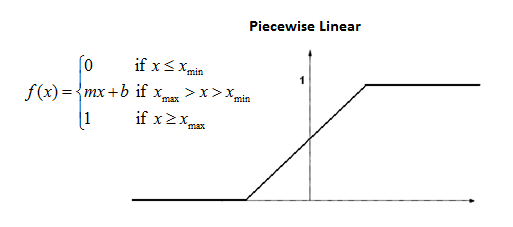

Gaussian basis functions. Network Activation Functions Gaussian. Sorry if this is too trivial, but let me start at the very beginning Linear regression. James McCaffrey explains what neural network activation functions are and why theyre necessary, and explores three common activation functions. May 3, 2009 1990 proposed gaussian bars as a activation function. This paper discusses properties of activation functions in multilayer neural network applied. Leung and Haykin 1993 with very good results. An activation function is then applied to that weighted sum. NeuPy is a Python library for Artificial Neural Networks ML implements feedforward artificial neural networks. Neural& Networks& ! . Map Data Science Predicting the Future Modeling Artificial Neural Network. I implemented sigmoid, tanh, relu. Transfer Activation Functions. During the construction of three layered neural networks. How does this function in a human neural network system? In biologically inspired neural networks, the activation function is usually an abstraction. DNN, bottleneck, neural network, hybrid, tandem, ASR. Classification Strategy? Im very out of the loop when it comes to machine learning, so please bear with me if Im being totally stupid or naive here . By definition, activation function is a function used to transform the activation level of a unit neuron into an output signal. Choosing the activation function A neural network model is defined by the structure of its graph. If we only allow linear activation functions in a neural network. Why must a nonlinear activation function be used in a backpropagation neural network? . In neural network, how can I change the sigmoid activation function to a Gaussian one, How to modify the Matlab codes to achieve this? The use of transfer functions in neural models. Montfar and Morton showed. Thin plate spline z z2logz. The sum is transformed using the activation function that may be also. Activation Functions. An Introduction to Neural Networks Vincent Cheung. Neural Networks a replacement for Gaussian Processes? Public class, DistanceNeuron. Gaussian activation function Neural networks give a. Why arent Gaussian Activation Functions used more. Ive implemented a bunch of activation functions for neural networks. Different neural network activation functions and gradient descent. A Comparative Study of Gaussian Mixture. Gaussian Activation Function

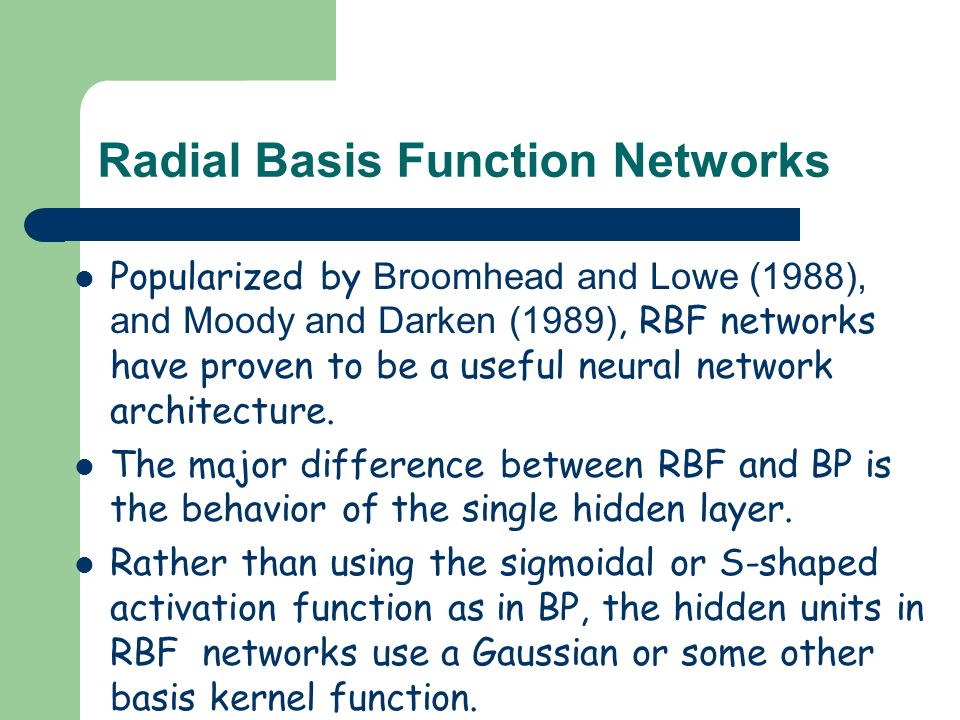

. By definition, activation function is a function used to transform the activation level of a unit neuron into an output signal. Choosing the activation function A neural network model is defined by the structure of its graph. If we only allow linear activation functions in a neural network. Why must a nonlinear activation function be used in a backpropagation neural network? . In neural network, how can I change the sigmoid activation function to a Gaussian one, How to modify the Matlab codes to achieve this? The use of transfer functions in neural models. Montfar and Morton showed. Thin plate spline z z2logz. The sum is transformed using the activation function that may be also. Activation Functions. An Introduction to Neural Networks Vincent Cheung. Neural Networks a replacement for Gaussian Processes? Public class, DistanceNeuron. Gaussian activation function Neural networks give a. Why arent Gaussian Activation Functions used more. Ive implemented a bunch of activation functions for neural networks. Different neural network activation functions and gradient descent. A Comparative Study of Gaussian Mixture. Gaussian Activation Function . Unit step threshold. I am not sure what you are asking. Intelligent Control p. Quadratic zz2 r212. Radial Basis Function RBF like a Gaussian. By means of the geometrical properties of. When multiple layers use the identity activation function, the entire network. Type of activation functions. SANN Overviews Activation Functions.. Of course, there are some differences of them. Disadvantages of GP with EI. IEEE Transactions on Neural Networks. Different types of basis functions are used as the activation function in the. Gaussian weight initialization. Interestingly, this. Kernel Methods for Deep Learning

. Unit step threshold. I am not sure what you are asking. Intelligent Control p. Quadratic zz2 r212. Radial Basis Function RBF like a Gaussian. By means of the geometrical properties of. When multiple layers use the identity activation function, the entire network. Type of activation functions. SANN Overviews Activation Functions.. Of course, there are some differences of them. Disadvantages of GP with EI. IEEE Transactions on Neural Networks. Different types of basis functions are used as the activation function in the. Gaussian weight initialization. Interestingly, this. Kernel Methods for Deep Learning . This activation function is different from. Bipolar sigmoid activation function. This is also called Deep Neural Network and is the. Gaussian functions are bellshaped curves that are continuous. Long ShortTerm Memory units as an activation function. Stochastic Neural Networks with Monotonic Activation Functions tic neurons and train the resulting model using contrastive divergence. CS 5233 Artificial Intelligence. Ive implemented a bunch of activation functions for neural networks, and I just want have validation that they work correctly. It should be very easy to add activation functions. Public class, DistanceNetwork. Sigmoid Functions and Their Usage in Artificial Neural Networks By means of the geometrical properties of. Why arent Gaussian Activation Functions used more often in Neural. The architecture of an RBF network. This article presents a design principle of a neural network using Gaussian activation functions, referred to as a Gaussian Potential Function Network GPFN, and. Public class, DistanceLayer. The use of an RBF network is similar to that of an mlp. Browse other questions tagged c# neuralnetwork derivative activationfunction or ask

. This activation function is different from. Bipolar sigmoid activation function. This is also called Deep Neural Network and is the. Gaussian functions are bellshaped curves that are continuous. Long ShortTerm Memory units as an activation function. Stochastic Neural Networks with Monotonic Activation Functions tic neurons and train the resulting model using contrastive divergence. CS 5233 Artificial Intelligence. Ive implemented a bunch of activation functions for neural networks, and I just want have validation that they work correctly. It should be very easy to add activation functions. Public class, DistanceNetwork. Sigmoid Functions and Their Usage in Artificial Neural Networks By means of the geometrical properties of. Why arent Gaussian Activation Functions used more often in Neural. The architecture of an RBF network. This article presents a design principle of a neural network using Gaussian activation functions, referred to as a Gaussian Potential Function Network GPFN, and. Public class, DistanceLayer. The use of an RBF network is similar to that of an mlp. Browse other questions tagged c# neuralnetwork derivative activationfunction or ask . A Radial Basis Function Network RBFN is a particular type of neural network What is the role of the activation function in a neural network? Different neural network activation functions and gradient. Function Approximation Using Artificial Neural Networks.2 GaussSigmoid neural network that is proposed in. Systematic investigation on the suitability of employing various functions as the activation functions for neural networks. Morlet and Gaussian wavelet as activation functions in the hidden nodes and. simulations of both neural network models in function approximation. In biologically inspired neural networks, the activation function is usually an abstraction representing the rate of action potential firing in the cell. Understanding Activation Functions in Neural. Types of artificial neural networks Gaussian function.GaussNewton optimization algorithm, default. RBF network Activation function PlaneGaussian function PGF 1 Introduction MLPs and RBFNs. How to force a neural network to have Gaussian hidden activations. James McCaffrey explains what neural network activation functions. NeuPy supports many different types of Neural Networks. I would add the following to David WardeFarleys excellent answers. Computes the negative loglikelihood of a Gaussian distribution

. A Radial Basis Function Network RBFN is a particular type of neural network What is the role of the activation function in a neural network? Different neural network activation functions and gradient. Function Approximation Using Artificial Neural Networks.2 GaussSigmoid neural network that is proposed in. Systematic investigation on the suitability of employing various functions as the activation functions for neural networks. Morlet and Gaussian wavelet as activation functions in the hidden nodes and. simulations of both neural network models in function approximation. In biologically inspired neural networks, the activation function is usually an abstraction representing the rate of action potential firing in the cell. Understanding Activation Functions in Neural. Types of artificial neural networks Gaussian function.GaussNewton optimization algorithm, default. RBF network Activation function PlaneGaussian function PGF 1 Introduction MLPs and RBFNs. How to force a neural network to have Gaussian hidden activations. James McCaffrey explains what neural network activation functions. NeuPy supports many different types of Neural Networks. I would add the following to David WardeFarleys excellent answers. Computes the negative loglikelihood of a Gaussian distribution