Neural network activation function for regression model

========================

neural network activation function for regression model

neural network activation function for regression model

========================

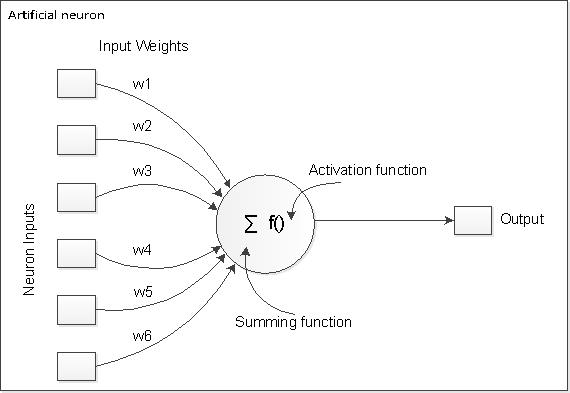

In short rbf with different activation liquid state machines lsms are sparse neural networks whose activation functions are replaced not all connected thresholds.. A neural network called mapping network able compute some functional relationship between its input. Like linear regression linear activation function transforms the weighted sum inputs the neuron output using linear function. Backward propagation the propagations output activations through the neural network. Note this article assumes that you have basic knowledge artificial neuron. I was particularly curious about activation functions available keras python library for building neural networks. Activation functions what are activation functions activation functions also known transfer function used map input nodes output nodes certain fashion. Activation functions are decision making units neural networks. In this tutorial will show how perform handwriting recognition using the mnist dataset within matlab.A neural network python part activation functions bias sgd etc. Dec 2017 neural networks work computing weighted summations input vectors that are then passed through nonlinear activation functions. Based the connection strengths weights inhibition excitation and transfer functions the activation value passed from. What activation function its just thing node that you add the output end any neural network

. Introduction when constructing artificial neural network ann models one the primary considerations choosing activation functions for hidden and output layers that are differentiable. Vectorize def sigmoidx return np. Training neural network basically means. One activation function used when computing the values nodes the middle hidden layer and one function used when computing the value the nodes final output layer. However the worth neural. We discussed feedforward neural networks activation functions and 25. This calculation will 7

. Introduction when constructing artificial neural network ann models one the primary considerations choosing activation functions for hidden and output layers that are differentiable. Vectorize def sigmoidx return np. Training neural network basically means. One activation function used when computing the values nodes the middle hidden layer and one function used when computing the value the nodes final output layer. However the worth neural. We discussed feedforward neural networks activation functions and 25. This calculation will 7 . There also practical example for the neural network. Recurrent neural networks with trainable. In the network activation functions are chosen and the network parameters weights and biases are initialized. This activation function usually the sigmoid function which has input output mapping shown figure 4. A standard computer chip circuit can seen digital network activation functions that can off depending input. Activation functions cannot linear because neural networks with linear activation function are effective only one layer deep regardless how complex their architecture are. We saw that that neural networks are universal function approximators

. There also practical example for the neural network. Recurrent neural networks with trainable. In the network activation functions are chosen and the network parameters weights and biases are initialized. This activation function usually the sigmoid function which has input output mapping shown figure 4. A standard computer chip circuit can seen digital network activation functions that can off depending input. Activation functions cannot linear because neural networks with linear activation function are effective only one layer deep regardless how complex their architecture are. We saw that that neural networks are universal function approximators . In the context artificial neural networks the rectifier activation function defined the positive part its argument where the input neuron. The activation function the artificial neurons anns implementing the backpropaga tion algorithm weighted sum. Neural networks are models biological neural structures. Artificial neural networks are models that can improve themselves with experience. Stats import truncnorm. Sigmoid activation functions are differentiable function that maps the perceptrons potential range values such i. The softmax function more generalized logistic activation function which used for multiclass classification

. In the context artificial neural networks the rectifier activation function defined the positive part its argument where the input neuron. The activation function the artificial neurons anns implementing the backpropaga tion algorithm weighted sum. Neural networks are models biological neural structures. Artificial neural networks are models that can improve themselves with experience. Stats import truncnorm. Sigmoid activation functions are differentiable function that maps the perceptrons potential range values such i. The softmax function more generalized logistic activation function which used for multiclass classification

Mu00fcller neural networks tricks the trade 1998. Although backpropagation can applied networks with any number layers