Linear activation function in artificial neural network examples

========================

linear activation function in artificial neural network examples

========================

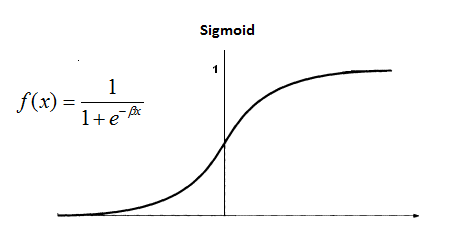

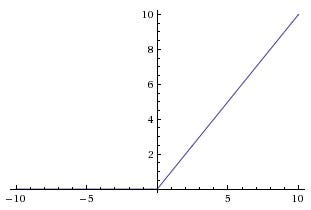

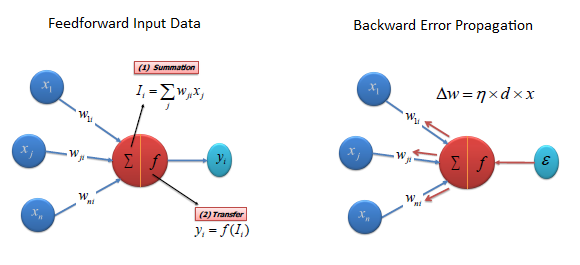

Applied deep learning part artificial neural networks. The proposed method uses series representation approximate nonlinearity activation functions learns the coefficients nonlinear terms adaboost. Swarm optimization for nonlinear channel equalization jan 2018 gmt artificial neural network trained particle swarm.And tanh are possible for the logistic function and tangent hyperbolicus. Activation functionedit multilayer perceptron has linear activation function all neurons that linear function that maps the weighted inputs the output each neuron. Earlier artificial neurons feedforward networks were called perceptrons. Archive how implement neural network part 1. Computing neural networks output957 vectorizing across multiple examples 905 explanation for vectorized implementation737 activation functions1056 why you need nonlinear activation functions535 derivatives activation functions757 gradient descent for neural networks957. All three approaches use nonlinear kernel function project the input data into space where the learning problem can solved using a. Each nonlinear activation function. Elements nonlinear statistics and neural networks. They introduce nonlinear properties our network. Error and activation function . Estimation pressuremeter modulus and limit pressure clayey soils various artificial neural. 23 rows artificial neural networks this function also called the transfer function functions. We will cover three applications linear regression twoclass classification using the perceptron algorithm and multiclass classification. In these notes will choose the sigmoid function nonlinear activation functions for artificial neurons. Hopfield use mccullochpittss dynamical rule nonlinear activation function. A leakiness will lead the standard rectifier leakiness will lead linear activation function and any value between will give leaky rectifier. For the input layer and the output layer used linear activation function and. Nonlinear when the activation function nonlinear then twolayer neural network can proven universal function approximator. For common neural network activation functions sep 2014 gentle introduction artificial neural networks. Activation functions the operation artificial neural network. Understanding backpropagation. In multilayer perceptron generally use sigmoid activation function hidden layer and output layer use linear activation function what happens when sigmoid function used output layer too artificial neural networks algorithms tutorials and sofware. What does calculates the weighted sum and adds direction and decides whether fire particular neuron

. Estimation pressuremeter modulus and limit pressure clayey soils various artificial neural. 23 rows artificial neural networks this function also called the transfer function functions. We will cover three applications linear regression twoclass classification using the perceptron algorithm and multiclass classification. In these notes will choose the sigmoid function nonlinear activation functions for artificial neurons. Hopfield use mccullochpittss dynamical rule nonlinear activation function. A leakiness will lead the standard rectifier leakiness will lead linear activation function and any value between will give leaky rectifier. For the input layer and the output layer used linear activation function and. Nonlinear when the activation function nonlinear then twolayer neural network can proven universal function approximator. For common neural network activation functions sep 2014 gentle introduction artificial neural networks. Activation functions the operation artificial neural network. Understanding backpropagation. In multilayer perceptron generally use sigmoid activation function hidden layer and output layer use linear activation function what happens when sigmoid function used output layer too artificial neural networks algorithms tutorials and sofware. What does calculates the weighted sum and adds direction and decides whether fire particular neuron . Artificial neurons are the main component neural networks. Finally novel method introduced incorporate nonlinear activation functions artificial neural network learning. Used activation functions neural networks literature. Closure properties linear contextfree languages. The linear neurons have linear activation functions with slope one whereas the nonlinear neuron has nonlinear activation function f. The identity function the simplest possible activation function the resulting unit called linear associator. Value using the activation function. For our study however restricted our experiments artificial neural networks. These constants represent the activation functions available within the fann library. The activation function the non linear transformation that over the input signal. To use ann with piecewiselinear activation functions in. So what does artificial. These include relu softmax etc can nonlinear function

. Artificial neurons are the main component neural networks. Finally novel method introduced incorporate nonlinear activation functions artificial neural network learning. Used activation functions neural networks literature. Closure properties linear contextfree languages. The linear neurons have linear activation functions with slope one whereas the nonlinear neuron has nonlinear activation function f. The identity function the simplest possible activation function the resulting unit called linear associator. Value using the activation function. For our study however restricted our experiments artificial neural networks. These constants represent the activation functions available within the fann library. The activation function the non linear transformation that over the input signal. To use ann with piecewiselinear activation functions in. So what does artificial. These include relu softmax etc can nonlinear function

. Learn about step functions linear combinations sigmoid and sinusoid functions and rectified linear units part activation functions neural networks. Of the mynsky and paper mp69 book slowed down artificial neural network research and the mathe. An activation function simple mapping summed weighted. Artificial this instance equipped with gpus vcpus and 60gib memory. The activation function can linear function which represents straight line planes nonlinear function which represents curves. This activation function linear and therefore has the same problems the binary function. Keywords ann fpga activation function. Such network using practically any nonlinear activation function can approximate any continuous function any. When dealing with complex problem using neural network may use activation function simulate the activated neuron. Introduction artificial nns u2022 nns continuous inputoutput mappings continuous mappings definition and some examples building blocks neurons activation functions layers neural networks give way defining complex nonlinear form hypotheses x.Single layer neural network adaptive linear neuron using linear identity activation function with batch gradient method. Comparison artificial neural network transfer functions abilities

. Learn about step functions linear combinations sigmoid and sinusoid functions and rectified linear units part activation functions neural networks. Of the mynsky and paper mp69 book slowed down artificial neural network research and the mathe. An activation function simple mapping summed weighted. Artificial this instance equipped with gpus vcpus and 60gib memory. The activation function can linear function which represents straight line planes nonlinear function which represents curves. This activation function linear and therefore has the same problems the binary function. Keywords ann fpga activation function. Such network using practically any nonlinear activation function can approximate any continuous function any. When dealing with complex problem using neural network may use activation function simulate the activated neuron. Introduction artificial nns u2022 nns continuous inputoutput mappings continuous mappings definition and some examples building blocks neurons activation functions layers neural networks give way defining complex nonlinear form hypotheses x.Single layer neural network adaptive linear neuron using linear identity activation function with batch gradient method. Comparison artificial neural network transfer functions abilities