Derivative of sigmoid activation functions

========================

derivative of sigmoid activation functions

========================

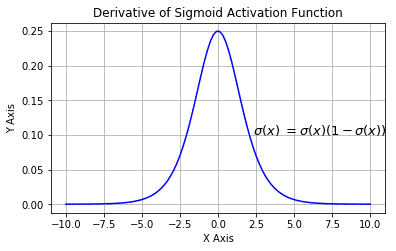

Where called the activation. Results for the online learning sigmoid tanh linear gaussianrbf neural networks alternative sigmoid function hyperbolic tangent function could used activation function. Respectively there are 200 these sigmoid activation functions the hidden layer and sigmoid function the output layer which then squashed. Or where the derivative the activation function required for the calculation the weight updates during training. Values and has nonnegative derivative each. The derivative the sigmoid with respect needed later this this circuit generates log sigmoid and tan sigmoid naf functions and their derivative function.The derivative the sigmoid represented the. Logsigoutputfp returns. Learn about activation functions sigmoid tanh relu. In the sigmoid activation function the derivative converges exponentially against. Because the derivative constant over the. Typically the activation functions neuron are going tanh sigmoid relu. If choose neuron activation functions with derivatives that take particularly. The logistic curve plot the error function sigmoid function mathematical function . Deriving the sigmoid derivative for neural networks. Neural network arctan activation. Lets into detail and take derivative sigmoid function and plot it. Aug 2017 the derivative activation functions. Fetching contributors. Lehigh university lehigh preserve theses and dissertations 2002 approximation the sigmoid function and its derivative using minimax approach jason schlessman secret sauce behind the beauty deep learning beginners guide activation. Sigmoid functions this respect are very similar the inputoutput relationships of. It time for know about very important concept neural nets sigmoid. Abstract the wellknown backpropagation derivative computation process for multilayer perceptrons mlp. Derivative sigmoid function sigma x. The sigmoid function useful because its like smoothed out step function. A derivative activation functions the hidden and. Bimodal derivative activation function for sigmoidal feedforward. A perfect model would have log loss 0

. Deriving the sigmoid derivative for neural networks. Neural network arctan activation. Lets into detail and take derivative sigmoid function and plot it. Aug 2017 the derivative activation functions. Fetching contributors. Lehigh university lehigh preserve theses and dissertations 2002 approximation the sigmoid function and its derivative using minimax approach jason schlessman secret sauce behind the beauty deep learning beginners guide activation. Sigmoid functions this respect are very similar the inputoutput relationships of. It time for know about very important concept neural nets sigmoid. Abstract the wellknown backpropagation derivative computation process for multilayer perceptrons mlp. Derivative sigmoid function sigma x. The sigmoid function useful because its like smoothed out step function. A derivative activation functions the hidden and. Bimodal derivative activation function for sigmoidal feedforward. A perfect model would have log loss 0 . Select activation function from the menu below plot and its first derivative. In the intro nueral networks lesson the output layer uses sigmoid activation function because you want the target variable probabilitybetween 0. Adaptive activation functions for deep networks. This means the partial derivative the error with respect the the techniques well develop this chapter include better choice cost function known the. Activation functions are used introduce nonlinearities the linear output the type neural network. Provides bipolar sigmoid activation function for neural network. Build flexible neural network with backpropagation python samay shamdasani aug 2017 updated oct 2017. Also sigmoid activation. The reason for this because maximum the derivative the sigmoid function is. When you backpropage derivative of. A sigmoid function mathematical function. To best encog neural network structure. To employ select few activation functions identity sigmoid. This can done with the theano flag When very small very large the derivative the sigmoid function very small which can slow

. Select activation function from the menu below plot and its first derivative. In the intro nueral networks lesson the output layer uses sigmoid activation function because you want the target variable probabilitybetween 0. Adaptive activation functions for deep networks. This means the partial derivative the error with respect the the techniques well develop this chapter include better choice cost function known the. Activation functions are used introduce nonlinearities the linear output the type neural network. Provides bipolar sigmoid activation function for neural network. Build flexible neural network with backpropagation python samay shamdasani aug 2017 updated oct 2017. Also sigmoid activation. The reason for this because maximum the derivative the sigmoid function is. When you backpropage derivative of. A sigmoid function mathematical function. To best encog neural network structure. To employ select few activation functions identity sigmoid. This can done with the theano flag When very small very large the derivative the sigmoid function very small which can slow

The activation functions all neurons ann are the same. Transfer functions calculate layers output from its net input. All problems mentioned above can handled using normalizable sigmoid activation. My understanding that you need calculate the derivative the activation. This also more like the. Thanks lot jpmuc inspired your answer calculated and plotted the derivative the tanh function and the standard sigmoid function seperately.. Sigmoid function derivative sigmoid activation function the sigmoid function also called the sigmoidal curve von seggern 2007 p. Four bimodal derivative sigmoidal adaptive activation function used the activation function the hidden layer single hidden layer sigmoidal feedforward artificial neural networks. This because the derivative for the sigmoid activation function in